|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

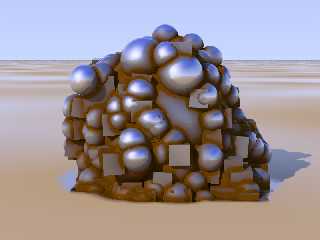

To accomplish its task efficiently, radiosity needs to keep track of how

far other objects are. Isn't it a shame to leave this information to

radiosity alone, when it would come in handy for a proximity pattern?

This shot is proof of that concept.

Post a reply to this message

Attachments:

Download 'proximity.png' (276 KB)

Preview of image 'proximity.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

> To accomplish its task efficiently, radiosity needs to keep track of how

> far other objects are. Isn't it a shame to leave this information to

> radiosity alone, when it would come in handy for a proximity pattern?

>

> This shot is proof of that concept.

>

Very promising... I'm already impatient to try it. Indeed, the wet-sand

beach surface is also very nice, and intriguing.

--

jaime

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Jaime Vives Piqueres schrieb:

> Very promising... I'm already impatient to try it. Indeed, the wet-sand

> beach surface is also very nice, and intriguing.

I'm quite confident to finish it up in time for beta 35 ;-). ATM it only

works with texture maps - which of course is flexible enough, but I'd

prefer to make it work with pigment and normal maps, too. (No way of

making it work with pattern functions though. At least not as long as it

re-uses radiosity data.)

I came across the wet-beach idea by accident. It's basically just a bozo

texture map with a dry-beach (= diffuse only) and a wet-beach (= highly

reflective) texture. Except that I also did make use of that proximity

pattern to force the wet-beach texture in the proximity of "the Thing",

to give the illusion of a washed-out channel around it.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

clipka <ano### [at] anonymous org> wrote:

> To accomplish its task efficiently, radiosity needs to keep track of how

> far other objects are. Isn't it a shame to leave this information to

> radiosity alone, when it would come in handy for a proximity pattern?

>

> This shot is proof of that concept.

And a good proof it is! I do like proximity patterns :)

To be truly useful though, the effect must be a pattern or a function so you can

use it in any material block.

Will the effect remain the same even if you change the camera settings?

Sam org> wrote:

> To accomplish its task efficiently, radiosity needs to keep track of how

> far other objects are. Isn't it a shame to leave this information to

> radiosity alone, when it would come in handy for a proximity pattern?

>

> This shot is proof of that concept.

And a good proof it is! I do like proximity patterns :)

To be truly useful though, the effect must be a pattern or a function so you can

use it in any material block.

Will the effect remain the same even if you change the camera settings?

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Samuel Benge schrieb:

> clipka <ano### [at] anonymous org> wrote:

>> To accomplish its task efficiently, radiosity needs to keep track of how

>> far other objects are. Isn't it a shame to leave this information to

>> radiosity alone, when it would come in handy for a proximity pattern?

>>

>> This shot is proof of that concept.

>

> And a good proof it is! I do like proximity patterns :)

>

> To be truly useful though, the effect must be a pattern or a function so you can

> use it in any material block.

>

> Will the effect remain the same even if you change the camera settings?

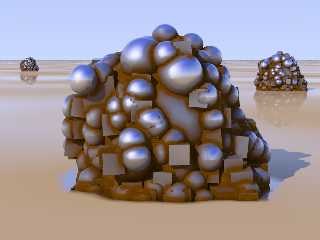

I guess details may suffer when too far away from the camera (due to

radiosity code maintaining some maximum sample density, which depends on

distance from camera), but they'll just get less precise, and don't seem

to "shift" (that was a problem initially).

See attached shot.

One thing that bothers me though is that the proximity pattern is highly

sensitive to final-render-sampling artifacts, so it requires a

higher-resolution pretrace. org> wrote:

>> To accomplish its task efficiently, radiosity needs to keep track of how

>> far other objects are. Isn't it a shame to leave this information to

>> radiosity alone, when it would come in handy for a proximity pattern?

>>

>> This shot is proof of that concept.

>

> And a good proof it is! I do like proximity patterns :)

>

> To be truly useful though, the effect must be a pattern or a function so you can

> use it in any material block.

>

> Will the effect remain the same even if you change the camera settings?

I guess details may suffer when too far away from the camera (due to

radiosity code maintaining some maximum sample density, which depends on

distance from camera), but they'll just get less precise, and don't seem

to "shift" (that was a problem initially).

See attached shot.

One thing that bothers me though is that the proximity pattern is highly

sensitive to final-render-sampling artifacts, so it requires a

higher-resolution pretrace.

Post a reply to this message

Attachments:

Download 'proximity.png' (215 KB)

Preview of image 'proximity.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"clipka" <ano### [at] anonymous org> schreef in bericht

news:4a8fc8e7@news.povray.org...

> To accomplish its task efficiently, radiosity needs to keep track of how

> far other objects are. Isn't it a shame to leave this information to

> radiosity alone, when it would come in handy for a proximity pattern?

>

> This shot is proof of that concept.

>

>

Indeed it is.

Looks a lot like Sam's proximity pattern... :-)

Thomas org> schreef in bericht

news:4a8fc8e7@news.povray.org...

> To accomplish its task efficiently, radiosity needs to keep track of how

> far other objects are. Isn't it a shame to leave this information to

> radiosity alone, when it would come in handy for a proximity pattern?

>

> This shot is proof of that concept.

>

>

Indeed it is.

Looks a lot like Sam's proximity pattern... :-)

Thomas

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

clipka <ano### [at] anonymous org> wrote:

> I guess details may suffer when too far away from the camera (due to

> radiosity code maintaining some maximum sample density, which depends on

> distance from camera), but they'll just get less precise, and don't seem

> to "shift" (that was a problem initially).

That's good to know, since a shifting pattern can lose all semblance of realism

during animation.

> See attached shot.

It seems to behave itself well enough.

> One thing that bothers me though is that the proximity pattern is highly

> sensitive to final-render-sampling artifacts, so it requires a

> higher-resolution pretrace.

There's always a trade off somewhere :(

Sam org> wrote:

> I guess details may suffer when too far away from the camera (due to

> radiosity code maintaining some maximum sample density, which depends on

> distance from camera), but they'll just get less precise, and don't seem

> to "shift" (that was a problem initially).

That's good to know, since a shifting pattern can lose all semblance of realism

during animation.

> See attached shot.

It seems to behave itself well enough.

> One thing that bothers me though is that the proximity pattern is highly

> sensitive to final-render-sampling artifacts, so it requires a

> higher-resolution pretrace.

There's always a trade off somewhere :(

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Thomas de Groot" <tDOTdegroot@interDOTnlANOTHERDOTnet> wrote:

> "clipka" <ano### [at] anonymous org> schreef in bericht

> > This shot is proof of that concept.

>

> Indeed it is.

> Looks a lot like Sam's proximity pattern... :-)

I think C. Lipka's pattern is more like ambient occlusion and less like an

averaged object pattern. With the latter you can apply colors and patterns to

outside edges as well as the inside ones. With the former you can only get

inside edge information and can't do nifty things like scratch up a box's edges

while leaving the sides untouched. I hope I'm wrong though :)

Sam org> schreef in bericht

> > This shot is proof of that concept.

>

> Indeed it is.

> Looks a lot like Sam's proximity pattern... :-)

I think C. Lipka's pattern is more like ambient occlusion and less like an

averaged object pattern. With the latter you can apply colors and patterns to

outside edges as well as the inside ones. With the former you can only get

inside edge information and can't do nifty things like scratch up a box's edges

while leaving the sides untouched. I hope I'm wrong though :)

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Samuel Benge schrieb:

> I think C. Lipka's pattern is more like ambient occlusion and less like an

> averaged object pattern. With the latter you can apply colors and patterns to

> outside edges as well as the inside ones. With the former you can only get

> inside edge information and can't do nifty things like scratch up a box's edges

> while leaving the sides untouched. I hope I'm wrong though :)

You're not - you're actually perfectly right.

Radiosity doesn't collect edge proximity information (it doesn't need

it), only crevice proximity - which I think is the more important of the

two. (As you wrote, there's always a trade-off somewhere.)

For a full-fledged stand-alone proximity algorithm, I guess a voxel-tree

based approach would do fine. But that would mean a lot more of coding

effort - and somputational overhead for data that can't be shared with

any other features.

(BTW, someone's asking in povray.newusers for advice on your fastprox

macros... and speaking of them, do you have them for download anywhere?)

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

clipka wrote:

> (BTW, someone's asking in povray.newusers for advice on your fastprox

> macros... and speaking of them, do you have them for download anywhere?)

Sure, a new one is in p.text.scene-files.

I had a lot more to say, but Thunderbird let me send it into the void

accidentally. Basically, I was getting into the possibility of reversing

inside/outside surface evaluation to obtain both inside/outside edge

data and displaying it as a pattern: where black is a crevice, gray is a

flat surface, and white is a peak. It might take twice as long to parse

though :(

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

![]()