|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"yesbird" <nomail@nomail> wrote:

> Don't you think about using some external tools, just for reference and

> experiments ? Yesterday I googled about photogrammetry and found some popular

> open-source, like: https://micmac.ensg.eu/index.php/Accueil

Well, yes - I'll frequently use easily-accessible online tools and some simple

graphics programs to help me work things out, make a rough model, or verify that

what I'm doing is correct (sanity check).

I just try to not get too sidetracked with installing and learning 3rd-party

software which may or may not do what I actually want - However if it does, and

there's some source code that looks like I could translate it to SDL, or it's

open-source and we could use the code in POV-Ray's source as an internal

function, then that always seems to be the way to go.

> These clouds ?

Yep, that's them.

> Please notify me about any updates, I am impressed and very interested.

Same with POV-Lab. I'm sure that there are a great many things that both

software packages can profit from, if the user bases were brought into closer

contact.

I have also been mulling over whether someone should check out this ChatGPL AI

thing, and ask it to write some POV-Ray scenes, or write known algorithms from

other languages in SDL to see what it spits out.

- BW

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Bald Eagle" <cre### [at] netscape net> wrote:

> I'm trying to do a sort of rough photogrammetric conversion of points on the

> screen to true 3D coordinates with the same apparent position.

>

I've been wondering if the result you're after is anything like Norbert Kern's

'position finder' code from 2006. Thomas De G and I spent time in 2018 trying to

improve it...

https://news.povray.org/povray.tools.general/thread/%3Cweb.5ba183edb47e1707a47873e10%40news.povray.org%3E/

IIRC, you first render a scene, then pick an x/y pixel position in that image

render and feed it into the code, and it gives you the actual 3D position in the

scene that the pixel corresponds to.

I have to admit that I haven't revisited that code since then, so my memory of

what it does is a bit hazy. I need to re-read that post myself! I do know that

Thomas and I also posted image examples at the time, of our work-in-progress. net> wrote:

> I'm trying to do a sort of rough photogrammetric conversion of points on the

> screen to true 3D coordinates with the same apparent position.

>

I've been wondering if the result you're after is anything like Norbert Kern's

'position finder' code from 2006. Thomas De G and I spent time in 2018 trying to

improve it...

https://news.povray.org/povray.tools.general/thread/%3Cweb.5ba183edb47e1707a47873e10%40news.povray.org%3E/

IIRC, you first render a scene, then pick an x/y pixel position in that image

render and feed it into the code, and it gives you the actual 3D position in the

scene that the pixel corresponds to.

I have to admit that I haven't revisited that code since then, so my memory of

what it does is a bit hazy. I need to re-read that post myself! I do know that

Thomas and I also posted image examples at the time, of our work-in-progress.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Kenneth" <kdw### [at] gmail com> wrote:

> I've been wondering if the result you're after is anything like Norbert Kern's

> 'position finder' code from 2006. Thomas De G and I spent time in 2018 trying to

It's a similar idea, and if you recall, I played with that code as well.

> IIRC, you first render a scene, then pick an x/y pixel position in that image

> render and feed it into the code, and it gives you the actual 3D position in the

> scene that the pixel corresponds to.

The difference here is that we don't have the underlying scene code and

therefore the "actual 3D objects" to trace back to. That code takes a pixel,

shoots a ray out from the camera position through that screen/image plane

position, and returns the result of a trace () call, which is how we get the

z-coordinate, the actual x & y coordinates at that z, and even the surface

normal vector.

But we don't have any of that - we only have the image.

What photogrammetry does is reason backwards through that process and eliminate

all of the "cheats" like trace () and using the camera properties and knowledge

of geometry, figure out where the object would have actually been.

It's "easier" with more images of the same scene taken at different camera

locations and positions, because you can cross rays and get the z-coordinates

that way.

If I understand things correctly, using a single image is like trying to look at

an oncoming sine wave and determine where the curve is. There are an infinite

number of sinusoidal peaks and troughs, so, you just pick the one that makes the

most sense in the present case. It's also like "which of the infinite

reflections of you in the fun house is the 'first' one?"

- BW com> wrote:

> I've been wondering if the result you're after is anything like Norbert Kern's

> 'position finder' code from 2006. Thomas De G and I spent time in 2018 trying to

It's a similar idea, and if you recall, I played with that code as well.

> IIRC, you first render a scene, then pick an x/y pixel position in that image

> render and feed it into the code, and it gives you the actual 3D position in the

> scene that the pixel corresponds to.

The difference here is that we don't have the underlying scene code and

therefore the "actual 3D objects" to trace back to. That code takes a pixel,

shoots a ray out from the camera position through that screen/image plane

position, and returns the result of a trace () call, which is how we get the

z-coordinate, the actual x & y coordinates at that z, and even the surface

normal vector.

But we don't have any of that - we only have the image.

What photogrammetry does is reason backwards through that process and eliminate

all of the "cheats" like trace () and using the camera properties and knowledge

of geometry, figure out where the object would have actually been.

It's "easier" with more images of the same scene taken at different camera

locations and positions, because you can cross rays and get the z-coordinates

that way.

If I understand things correctly, using a single image is like trying to look at

an oncoming sine wave and determine where the curve is. There are an infinite

number of sinusoidal peaks and troughs, so, you just pick the one that makes the

most sense in the present case. It's also like "which of the infinite

reflections of you in the fun house is the 'first' one?"

- BW

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Now, following along with that whole thing, doing the Norbert Kern thing would

function as a check on the whole photogrammetry process.

If we made a nice, simple test scene with well-defined, easily visible reference

points, and known 3D locations, then every step of the photogrammetry

back-projection - the undoing of the projection matrix that make the image,

would be able to be matched up with planes and rays and vectors and points -

like checking the manual solution to a math problem with a calculator.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

On 05/02/2023 05:20, Bald Eagle wrote:

>

> I'm trying to do a sort of rough photogrammetric conversion of points on the

> screen to true 3D coordinates with the same apparent position.

>

> ...

>

>

I'm not sure i understood everything, but i thought of this :

In the "screen.inc", there is a macro that converts a 3d position to a

2d position.

By analyzing how this macro works, it may be possible to do the opposite

or do a try/test loop to get 3d vector.

It's just an idea ...

I haven't tested it and i don't know if it's a possibility.

--

Kurtz le pirate

Compagnie de la Banquise

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

kurtz le pirate <kur### [at] gmail com> wrote:

> I'm not sure i understood everything, but i thought of this :

> In the "screen.inc", there is a macro that converts a 3d position to a

> 2d position.

>

> By analyzing how this macro works, it may be possible to do the opposite

> or do a try/test loop to get 3d vector.

As I (tried) to explain to Kenneth, you can't quite use that method to go

backwards, since it uses trace().

There's also the issue of the solid spherical angle of view - if I have a sphere

with radius 1 at 10 units away, I can make the same image with a sphere of

radius 10 at 100 units away. So the z-coordinate is undefined.

I suppose I'm trying to use relative distances and sensible geometric

transformations which would give me a reasonable starting point for further

refinement.

I went through the first few pages of one of the matrix transform methods, and

it was mostly a lot of unproductive academic blathering on that either went

around in a circle or just puffed up a lot and went nowhere. Lots more to read

though. com> wrote:

> I'm not sure i understood everything, but i thought of this :

> In the "screen.inc", there is a macro that converts a 3d position to a

> 2d position.

>

> By analyzing how this macro works, it may be possible to do the opposite

> or do a try/test loop to get 3d vector.

As I (tried) to explain to Kenneth, you can't quite use that method to go

backwards, since it uses trace().

There's also the issue of the solid spherical angle of view - if I have a sphere

with radius 1 at 10 units away, I can make the same image with a sphere of

radius 10 at 100 units away. So the z-coordinate is undefined.

I suppose I'm trying to use relative distances and sensible geometric

transformations which would give me a reasonable starting point for further

refinement.

I went through the first few pages of one of the matrix transform methods, and

it was mostly a lot of unproductive academic blathering on that either went

around in a circle or just puffed up a lot and went nowhere. Lots more to read

though.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Bald Eagle" <cre### [at] netscape net> wrote:

> kurtz le pirate <kur### [at] gmail net> wrote:

> kurtz le pirate <kur### [at] gmail com> wrote:

>

> > I'm not sure i understood everything, but i thought of this :

> > In the "screen.inc", there is a macro that converts a 3d position to a

> > 2d position.

[snip]

>

> As I (tried) to explain to Kenneth, you can't quite use that method to go

> backwards, since it uses trace().

Nope, no trace() in Screen.inc, just some math wizardry and a matrix. It might

be worth a look, just to see if something there could be reverse-engineered. com> wrote:

>

> > I'm not sure i understood everything, but i thought of this :

> > In the "screen.inc", there is a macro that converts a 3d position to a

> > 2d position.

[snip]

>

> As I (tried) to explain to Kenneth, you can't quite use that method to go

> backwards, since it uses trace().

Nope, no trace() in Screen.inc, just some math wizardry and a matrix. It might

be worth a look, just to see if something there could be reverse-engineered.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Photogrammetry is indeed an interesting subject. Over the years(!), I've tried

to imaging how it could be done in SDL (using two stereo photos of a scene, not

one, egads!)

My fuzzy idea would use eval_pigment to compare pixels or blocks of same, then

find what the horizontal/vertical pixel distance *differences* are at various

points in the photos, then somehow use that info to discern where in 3D space

those 'matching' pixels correspond to. With some arbitrary scaling in z

involved. Of course, I'm assuming that the original camera is looking straight

ahead, not up or down. That might complexify things.(?)

A very basic idea, I admit, and without any fancy 'edge-finding' stuff.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Kenneth" <kdw### [at] gmail com> wrote:

> Nope, no trace() in Screen.inc, just some math wizardry and a matrix. It might

> be worth a look, just to see if something there could be reverse-engineered.

Right, sorry - I still had the "screen position finder" thing in my head...

screen.inc doesn't do anything except organize things into a well-defined box

that exactly matches the view frustum of the camera. The box is squished very

thin, and positioned so that it fills the entire camera from edge to edge.

So no, it still doesn't "convert 3D coordinates to 2D coordinates". What it

does allow you to do is use <u, v> values of the image plane to place 3D objects

at that location. That's why those values are in the -0.5 to 0.5 range But all

that does is define the image plane as a constant z-value (or some distance

along whatever vector the view the direction is defined as).

The matrix just rotates all of that into position when the camera isn't in the

default position, so that it all lines up.

// Note that even though objects aligned using screen.inc follow the

// camera, they are still part of the scene. That means that they will be

// affected by perspective, lighting, the surroundings etc.

So, it's related to the solution, in that one can correlate the <u, v> values

with a RAY that extends through an infinite number of z-values, but the trick is

- which z value? com> wrote:

> Nope, no trace() in Screen.inc, just some math wizardry and a matrix. It might

> be worth a look, just to see if something there could be reverse-engineered.

Right, sorry - I still had the "screen position finder" thing in my head...

screen.inc doesn't do anything except organize things into a well-defined box

that exactly matches the view frustum of the camera. The box is squished very

thin, and positioned so that it fills the entire camera from edge to edge.

So no, it still doesn't "convert 3D coordinates to 2D coordinates". What it

does allow you to do is use <u, v> values of the image plane to place 3D objects

at that location. That's why those values are in the -0.5 to 0.5 range But all

that does is define the image plane as a constant z-value (or some distance

along whatever vector the view the direction is defined as).

The matrix just rotates all of that into position when the camera isn't in the

default position, so that it all lines up.

// Note that even though objects aligned using screen.inc follow the

// camera, they are still part of the scene. That means that they will be

// affected by perspective, lighting, the surroundings etc.

So, it's related to the solution, in that one can correlate the <u, v> values

with a RAY that extends through an infinite number of z-values, but the trick is

- which z value?

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

On 11/02/2023 18:48, Bald Eagle wrote:

>

> screen.inc doesn't do anything except organize things into a well-defined box

> that exactly matches the view frustum of the camera. The box is squished very

> thin, and positioned so that it fills the entire camera from edge to edge.

>

> So no, it still doesn't "convert 3D coordinates to 2D coordinates". What it

> does allow you to do is use <u, v> values of the image plane to place 3D objects

> at that location. That's why those values are in the -0.5 to 0.5 range But all

> that does is define the image plane as a constant z-value (or some distance

> along whatever vector the view the direction is defined as).

>

Sorry Bald, but I don't agree with you.

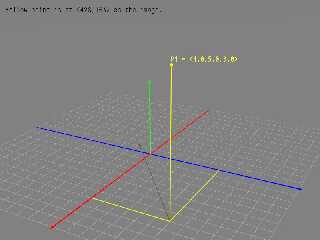

Look at the image in this message. Render at 800x600.

The yellow point is at <4,5,3>. They are indeed 3d coordinates.

Now the black text says that this same point has the coordinates x=428

(from left to right) and y=163 (top to bottom).

This is a 2d coordinate in the image.

Of corse, if the image is render at 1024 by 768, the 2D coordinate is

548 and 208.

So, the macro Get_Screen_XY(Loc) in screen.inc convert 3d to 2d coordinates.

After that, it may not meet your need, I agree ;)

--

Kurtz le pirate

Compagnie de la Banquise

Post a reply to this message

Attachments:

Download 'getscreen.jpg' (84 KB)

Preview of image 'getscreen.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()