|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Hi,

There is a post with the same subject in the general discussion. And

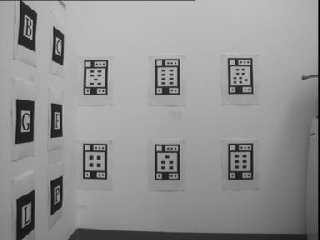

here is the real snap shot and the image generated by povray.

By looking at these two images, it seems that the marker is distorted,

which is probably the reason why the tracking is not accurate.

As I've mentioned in the general discussion, I have texture mapped the

marker into the scene. And here is the texture map statment:

box

{

<0, 0, 0>, <21, 29.7, 0.01>

pigment

{

image_map{png target_file once interpolate 2 map_type 0}

scale <21, 29.7, 0.01>

}

}

The size of the marker is actually an A4 size paper. Therefore I use the

same "dimension" in my code.

Is this code incorrect? Or I have to use some other options on the

texture map?

regards

Colin

Post a reply to this message

Attachments:

Download 'us-ascii' (113 KB)

Download 'us-ascii' (112 KB)

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Colin wrote:

> Hi,

>

> here is the real snap shot and the image generated by povray.

Sorry, can't read that files.

Could you post them in another format ?

Regis.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

I don't think many of us can view the images. Post them in JPG or PNG,

please?

BTW, what format is it?

--

~Mike

Things! Billions of them!

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Here is the jpeg version of the images.

regdo wrote:

> Colin wrote:

>

>> Hi,

>>

>

>> here is the real snap shot and the image generated by povray.

>

>

> Sorry, can't read that files.

> Could you post them in another format ?

>

> Regis.

Post a reply to this message

Attachments:

Download 'povray-gen.jpg' (8 KB)

Download 'real-snap.jpg' (10 KB)

Preview of image 'povray-gen.jpg'

Preview of image 'real-snap.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

After reading both this post and the post in povray.general, I don't

understand what the problem is that you're having, otherwise I might have

tried to help. From what I do understand, you're generating images with

POV-Ray, and then feeding these images into some sort of tracking system,

and it's giving incorrect results. If this is the case then I would expect

that the tracking system is doing something wrong, because the image you

showed here looks fine. But the fact that you're asking your question on the

POV-Ray newsgroups shows that you think your POV-Ray script is doing

something wrong, not the tracking system... so I'm just confused. Are you

trying to make an image with POV-Ray which looks the same as the real-world

image? Is POV-Ray using the *output* of your tracking system or is it an

input?

> Is this code incorrect? Or I have to use some other options on the

> texture map?

Your code correctly maps an image onto the box.

- Slime

[ http://www.slimeland.com/ ]

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Colin" <kla### [at] essex ac ac uk> wrote in message news:420bb2e2@news.povray.org...

> Hi,

>

> There is a post with the same subject in the general discussion. And

> here is the real snap shot and the image generated by povray.

Hi Colin, I think we need some more detail about what you are doing so that we

can help.

Obviously you have the tracking working correctly with the image from the

camera, and the tracking software is giving you a camera position as its output?

Are you then attempting to use that position as a camera location in POV-Ray, to

produce an image that will have the targets in the same positions as seen by the

real camera?

If that is what you are trying to do, then the discrepancy probably is caused by

your camera definition in POV-Ray. You will need to check the specifications

for the lens of your camera so that you can determine what settings should be

used in POV-Ray to simulate your real camera's field of view and focal length.

It appears, also, that your POV-Ray camera is looking directly forward - are you

sure that this is correct? What output does the tracking software provide - a

camera position and a look-at position, or a camera position and a direction, or

something else?

Lance.

thezone - thezone.firewave.com.au

thehandle - www.thehandle.com uk> wrote in message news:420bb2e2@news.povray.org...

> Hi,

>

> There is a post with the same subject in the general discussion. And

> here is the real snap shot and the image generated by povray.

Hi Colin, I think we need some more detail about what you are doing so that we

can help.

Obviously you have the tracking working correctly with the image from the

camera, and the tracking software is giving you a camera position as its output?

Are you then attempting to use that position as a camera location in POV-Ray, to

produce an image that will have the targets in the same positions as seen by the

real camera?

If that is what you are trying to do, then the discrepancy probably is caused by

your camera definition in POV-Ray. You will need to check the specifications

for the lens of your camera so that you can determine what settings should be

used in POV-Ray to simulate your real camera's field of view and focal length.

It appears, also, that your POV-Ray camera is looking directly forward - are you

sure that this is correct? What output does the tracking software provide - a

camera position and a look-at position, or a camera position and a direction, or

something else?

Lance.

thezone - thezone.firewave.com.au

thehandle - www.thehandle.com

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Hi all,

oh well... I know it is confusing... I'm not really sure on how to

present the whole stuff clearly as the software is quite complicated...

Let's start from the beginning, I'm going to test a vision based

positioning system with both simulation and in the real world

environment. The past experience of using this system is quite accurate.

For now, I have to develop an augmented reality application, which use

this positioning system as the base to get the user's location in the room.

The simulation is to test the accuracy of this system, as an "ideal"

environment. It is because this system hasn't been tested properly

before. But surprisingly, the result of the simulation is very inaccurate.

Before doing the test, the camera has to be calibrated first so that the

software collects the lens properties of the camera. These properties

are responsible for those complicated matrix transformation in

calculating the camera position from those markers. And the coordinate

of the marker (the 4 centroid coordinates of the 4 regions in the corner

of each target) is supplied to the software so that it can calculate

those transformation.

Therefore, (as I am the one who know the system most) I think the camera

definition is not the major problem (maybe I'm wrong). And by posting

(and looking at) these two images, I think the problem is about the

markers aspect ration. As Tim Nikias suggest, the povray unit shouldn't

be the problem. By putting those markers in the same "distance" away

from the camera, it seems the one generated by povray is really

distorted (taller and thinner). (since the target dimension has to be

supplied into the software) And therefore I think this is the major problem.

Here is the camera definition:

camera

{

location <61.5, 74.55, -200>

direction <0, 0, 1.7526>

look_at <61.5, 74.55, 0>

}

The output image is rendered to 384x288 (which is the same aspect ratio

as the default povray camera ratio). And here is the texture mapping

code, which simulate a marker that has printed on an A4 size paper:

box

{

<0, 0, 0>, <21, 29.7, 0.01>

pigment

{

image_map{png target_file once interpolate 2 map_type 0}

scale <21, 29.7, 0.01>

}

}

By ignoring the complicated positioning software, did I do anything

wrong on simulating an A4 sized paper and the texture mapping bit?

Thanks for the attention and sorry for the confusing post before. I hope

I have make this issue clearer now.

Regards

Colin

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Lance Birch" <-> wrote:

> If that is what you are trying to do, then the discrepancy probably is caused by

> your camera definition in POV-Ray. You will need to check the specifications

> for the lens of your camera so that you can determine what settings should be

> used in POV-Ray to simulate your real camera's field of view and focal length.

Also it seems that the "real" camera gives a little spherical projection. It

is something between normal and spherical POV camera. Probably it will be

rather hard to reconstruct this kind of view. But if you want to go any

further I suggest to start from matching both cameras exactly one to

another. Either you can try to add a converter which makes image aspherical

or you may try to experiment with transformation matrix for POV camera. It

looks like the camera produces projection on a spherical surface but the

focus is not in the center of the sphere but closer to the surface.

I'm not sure if I make myself clear, but look at your picture taken from the

camera and you will see that all edges are not straight. It is obvious that

they can't match the image rendered with a normal camera type from POVRay.

Przemek

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Ah - now I understand what you mean.

You're saying that you're trying to test the software's accuracy by making it

track to a POV-Ray generated "perfect" image/environment - the results of which

should match the test with real camera images.

There are two possible reasons why you're getting strange results:

1. the POV-Ray camera is of a projection type or angle of view that the software

can't easily calibrate to

2. the tracking software is having a difficult time finding the targets because

of the black background of the image

I believe your problem is your camera's "direction" vector. Setting it to

<0,0,1.7526> will increase POV-Ray's viewing plane distance (which can be

thought of as being similar to a real camera lens' focal length - the longer the

"direction" vector, the longer the focal length, and the more "zoomed in" your

image will be). The longer the focal length the smaller the angle of view, and

this reduced viewing angle could be causing the tracking software to incorrectly

believe that the camera is closer to the tracking targets than it really is, or

cause the tracking software to incorrectly calibrate because it is expecting an

angle of view within a certain range.

Alternatively, maybe the tracking software is becoming confused because the

background colour of your POV-Ray image is black, and the tracking software is

looking for a series of black targets. It might be a good idea to place a white

plane behind the target objects so that the image has a white background (like

the real camera image), which should make it easier for the software to find the

targets.

So, I'd suggest making the background of your POV-Ray image white, and removing

the "direction" from your camera and only using location and look_at to begin

with, and see if this helps. If it doesn't, use the "angle" keyword (it's

easier than using the "direction" keyword) to adjust the viewing angle of

POV-Ray's camera to match that of your real camera, then see if the tracking

software's results are more accurate.

Lance.

thezone - thezone.firewave.com.au

thehandle - www.thehandle.com

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Colin <kla### [at] essex ac ac uk> wrote:

> The output image is rendered to 384x288 (which is the same aspect ratio

> as the default povray camera ratio). And here is the texture mapping

> code, which simulate a marker that has printed on an A4 size paper:

I have an idea about what can cause this problem. The aspect of your image

is exactly 4/3 - counting in pixels. It is also displayed on screen with

aspect 4/3. This makes the distortion because typical PAL devices use

pixels which are not square. Your image should have 352 pixels in height

what is 1/2 of PAL resolution and what makes the whole visible area on 4/3

monitor. The rest of the pixels are margins which are usually hidden.

PAL resolution = 704 x 576 and is displayed on 4/3 screen

Half of PAL = 352 x 288 also displayed in 4/3

Pixel aspect = (704/4) / (576/3) = 0,9166 (width/height)

Your capture device gives you the whole image, without clipping the margins.

So you have to clip them by yourself to size 352 and then stretch the image

to aspect 4/3.

The other and maybe simplier solution is to add:

"right (4/3) / 0,9166"

to your camera definition.

However it has an disadvantage because both images remain distorted and if

your software makes use of sizes measured on the image it may cause an

error.

Greetings!

Przemek uk> wrote:

> The output image is rendered to 384x288 (which is the same aspect ratio

> as the default povray camera ratio). And here is the texture mapping

> code, which simulate a marker that has printed on an A4 size paper:

I have an idea about what can cause this problem. The aspect of your image

is exactly 4/3 - counting in pixels. It is also displayed on screen with

aspect 4/3. This makes the distortion because typical PAL devices use

pixels which are not square. Your image should have 352 pixels in height

what is 1/2 of PAL resolution and what makes the whole visible area on 4/3

monitor. The rest of the pixels are margins which are usually hidden.

PAL resolution = 704 x 576 and is displayed on 4/3 screen

Half of PAL = 352 x 288 also displayed in 4/3

Pixel aspect = (704/4) / (576/3) = 0,9166 (width/height)

Your capture device gives you the whole image, without clipping the margins.

So you have to clip them by yourself to size 352 and then stretch the image

to aspect 4/3.

The other and maybe simplier solution is to add:

"right (4/3) / 0,9166"

to your camera definition.

However it has an disadvantage because both images remain distorted and if

your software makes use of sizes measured on the image it may cause an

error.

Greetings!

Przemek

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()