|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

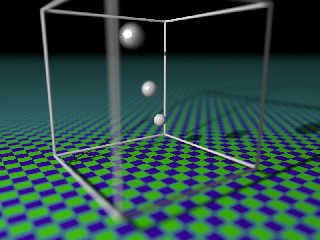

I'd had a version of depth of field blur in my stochastic render rig for some

time, but there was something not quite right with it.

After some time, and reading Paul Bourke's website

(http://local.wasp.uwa.edu.au/~pbourke/modelling_rendering/povcameras/ and

http://local.wasp.uwa.edu.au/~pbourke/projection/stereorender/ helped the most)

I realised that I had made the classic blunder, and was moving the camera to

achieve focal blur, which gave me a focal point, and not a focal plane.

The correct solution, as Paul and others have pointed out, is to use a shear

operation (which POV-Ray supports via a transform { matrix <...> } line in the

camera { ... } declaration.

If you are looking down the z axis, then all you need is

camera {

transform { matrix <1, 0, 0, 0, 1, 0, rx, ry, 1, 0, 0, 0> }

...

}

where rx and ry are the amount of shearing that occurs, and therefore the amount

of depth of field blur.

If you are not looking along the z axis, then you need to transform the camera

so it is along the z axis, do the shear, then transform it back.

The results are exactly what I was after to start with, but had gotten wrong - a

nice clear focal plane, passing through the look_at point, and parallel to the

image plane.

Cheers,

Edouard.

Post a reply to this message

Attachments:

Download 'dof-shear-fixed.jpg' (153 KB)

Preview of image 'dof-shear-fixed.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Source code to the above post.

(Also - I know this has been solved a bunch of times before, but this code

should also be useful to anyone wanting to create stereo pairs - just cut out

the random iris sampling stuff, and just set rx to +some_value the -some_value

(and ry to zero), and your got the two stereo render pairs you need.)

#include "transforms.inc"

#declare s = seed( frame_number );

#declare lens_shape =

function {

pigment {

image_map {

png "pentagon-outlined.png"

once

}

}

}

#declare found = false;

#while ( found = false )

#declare test_pos = <rand(s), rand(s), 0>;

#declare test_value = lens_shape( test_pos.x, test_pos.y, test_pos.z ).red;

#if ( pow( rand(s), .333 ) <= test_value )

#declare lens_offset = test_pos - <0.5,0.5,0>;

#declare found = true;

#end

#end

#declare rx = lens_offset.x * 0.05;

#declare ry = lens_offset.y * 0.05;

#declare cam_location = <-10, 8, -15>;

#declare look_location =<3, 3, 3>;

#declare eye_direction = look_location - cam_location;

camera {

perspective

transform {

translate look_location * -1

Reorient_Trans( eye_direction, z )

matrix <1, 0, 0, 0, 1, 0, rx, ry, 1, 0, 0, 0>

Reorient_Trans( z, eye_direction )

translate look_location

}

location cam_location

look_at look_location

angle 60

right x * 1

up y * image_height/image_width

}

light_source {

< -20, 15, -5>

rgb 1

}

plane {

<0.0, 1.0, 0.0>, 0.0

material {

texture {

pigment {

checker

rgb <0.123476, 0.000000, 0.998413>,

rgb <0.107591, 0.993164, 0.000000>

}

finish {

diffuse 0.6

brilliance 1.0

}

}

}

}

sphere {

<0, 6, 0>, 0.5

pigment { rgb 1 }

finish { specular 50 roughness 0.001 }

}

sphere {

<-3, 9, -3>, 0.5

pigment { rgb 1 }

finish { specular 50 roughness 0.001 }

}

sphere {

<3, 3, 3>, 0.5

pigment { rgb 1 }

finish { specular 50 roughness 0.001 }

}

union {

cylinder {

<-5, -5, -5>, <-5, -5, 5>, 0.1

}

cylinder {

<5, -5, -5>, <5, -5, 5>, 0.1

}

cylinder {

<-5, 5, -5>, <-5, 5, 5>, 0.1

}

cylinder {

<5, 5, -5>, <5, 5, 5>, 0.1

}

cylinder {

<-5, -5, -5>, <-5, 5, -5>, 0.1

}

cylinder {

<-5, -5, 5>, <-5, 5, 5>, 0.1

}

cylinder {

<5, -5, -5>, <5, 5, -5>, 0.1

}

cylinder {

<5, -5, 5>, <5, 5, 5>, 0.1

}

cylinder {

<-5, -5, -5>, <5, -5, -5>, 0.1

}

cylinder {

<-5, 5, -5>, <5, 5, -5>, 0.1

}

cylinder {

<-5, -5, 5>, <5, -5, 5>, 0.1

}

cylinder {

<-5, 5, 5>, <5, 5, 5>, 0.1

}

translate y*6

pigment { rgb 1 }

}

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

And the iris image I used

Cheers,

Edouard.

Post a reply to this message

Attachments:

Download 'pentagon-outlined.png' (5 KB)

Preview of image 'pentagon-outlined.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Edouard Poor" <pov### [at] edouard info> wrote:

> I realised that I had made the classic blunder, and was moving the camera to

> achieve focal blur, which gave me a focal point, and not a focal plane.

This is useful information! Thanks! I occasionally mess around with averaged

frames, and I can get a certain kind of focal_blur by moving the camera

around... but this looks even better :)

Sam info> wrote:

> I realised that I had made the classic blunder, and was moving the camera to

> achieve focal blur, which gave me a focal point, and not a focal plane.

This is useful information! Thanks! I occasionally mess around with averaged

frames, and I can get a certain kind of focal_blur by moving the camera

around... but this looks even better :)

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Samuel Benge" <stb### [at] hotmail com> wrote:

> "Edouard Poor" <pov### [at] edouard com> wrote:

> "Edouard Poor" <pov### [at] edouard info> wrote:

> > I realised that I had made the classic blunder, and was moving the camera to

> > achieve focal blur, which gave me a focal point, and not a focal plane.

>

> This is useful information! Thanks! I occasionally mess around with averaged

> frames, and I can get a certain kind of focal_blur by moving the camera

> around... but this looks even better :)

Thank-you! I'm glad someone found it useful.

Attached is my test object with focal blur using the technique above - I'd

posted one a few days ago, but this one has the corrected focal blur, and does

look a little better for it.

This image also has anti-aliasing done by moving both the camera and look_at

points by a sub-pixel distance in x and y based on a random gaussian function.

Not having anti-aliasing per frame I'm rendering speeds things up quite

noticeably.

The code adds the following over what I posted above:

//This macro created by Rico Reusser <reu### [at] chorus info> wrote:

> > I realised that I had made the classic blunder, and was moving the camera to

> > achieve focal blur, which gave me a focal point, and not a focal plane.

>

> This is useful information! Thanks! I occasionally mess around with averaged

> frames, and I can get a certain kind of focal_blur by moving the camera

> around... but this looks even better :)

Thank-you! I'm glad someone found it useful.

Attached is my test object with focal blur using the technique above - I'd

posted one a few days ago, but this one has the corrected focal blur, and does

look a little better for it.

This image also has anti-aliasing done by moving both the camera and look_at

points by a sub-pixel distance in x and y based on a random gaussian function.

Not having anti-aliasing per frame I'm rendering speeds things up quite

noticeably.

The code adds the following over what I posted above:

//This macro created by Rico Reusser <reu### [at] chorus net>

#declare e = 2.718281828459;

#macro Gauss(RSR)

sqrt(-2*log(rand(RSR))/log(e))*cos(2*pi*rand(RSR))

#end

#declare cam_location = <-14, 16, -20>;

#declare look_location = < 0, 2.8, 0>;

#declare eye_direction = look_location - cam_location;

#declare rot_angle = VAngleD(z, eye_direction);

// -- Anti-aliasing emulation ---------------------------

#declare aa_scale = 1/ ( image_width / 6 );

#declare rot = rand(s) * 360;

#declare g = Gauss( s );

#declare offset_vec = < sin( rot ) * g, cos( rot ) * g, 0 >;

#if ( rot_angle = 0 )

#declare aa_offset = offset_vec;

#else

#declare aa_offset = vaxis_rotate(offset_vec, vcross(eye_direction, z),

-rot_angle);

#end

// -- Camera declaration (already using focal_blur calculations) --------

camera {

perspective

transform {

translate look_location * -1

Reorient_Trans( eye_direction, z )

matrix <1, 0, 0, 0, 1, 0, rx, ry, 1, 0, 0, 0>

Reorient_Trans( z, eye_direction )

translate look_location

}

location cam_location + (aa_offset * aa_scale)

look_at look_location + (aa_offset * aa_scale)

angle 20

right x * 1

up y * image_height/image_width

}

The next big challenges (and I'm not sure I'm quite up to the task - maths is

not my strong point) is to define the focal blur in terms of the aperture for

the angle of the camera (i.e. the "lens"), and to automatically set the

aa_scale value based on the camera angle, camera to look_at distance, and image

size being rendered.

> Sam

Cheers,

Edouard. net>

#declare e = 2.718281828459;

#macro Gauss(RSR)

sqrt(-2*log(rand(RSR))/log(e))*cos(2*pi*rand(RSR))

#end

#declare cam_location = <-14, 16, -20>;

#declare look_location = < 0, 2.8, 0>;

#declare eye_direction = look_location - cam_location;

#declare rot_angle = VAngleD(z, eye_direction);

// -- Anti-aliasing emulation ---------------------------

#declare aa_scale = 1/ ( image_width / 6 );

#declare rot = rand(s) * 360;

#declare g = Gauss( s );

#declare offset_vec = < sin( rot ) * g, cos( rot ) * g, 0 >;

#if ( rot_angle = 0 )

#declare aa_offset = offset_vec;

#else

#declare aa_offset = vaxis_rotate(offset_vec, vcross(eye_direction, z),

-rot_angle);

#end

// -- Camera declaration (already using focal_blur calculations) --------

camera {

perspective

transform {

translate look_location * -1

Reorient_Trans( eye_direction, z )

matrix <1, 0, 0, 0, 1, 0, rx, ry, 1, 0, 0, 0>

Reorient_Trans( z, eye_direction )

translate look_location

}

location cam_location + (aa_offset * aa_scale)

look_at look_location + (aa_offset * aa_scale)

angle 20

right x * 1

up y * image_height/image_width

}

The next big challenges (and I'm not sure I'm quite up to the task - maths is

not my strong point) is to define the focal blur in terms of the aperture for

the angle of the camera (i.e. the "lens"), and to automatically set the

aa_scale value based on the camera angle, camera to look_at distance, and image

size being rendered.

> Sam

Cheers,

Edouard.

Post a reply to this message

Attachments:

Download 'dof-shear-test-object.jpg' (54 KB)

Preview of image 'dof-shear-test-object.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Edouard Poor" <pov### [at] edouard info> wrote:

>

> Thank-you! I'm glad someone found it useful.

>

> Attached is my test object with focal blur using the technique above - I'd

> posted one a few days ago, but this one has the corrected focal blur, and does

> look a little better for it.

It looks good!

I tried rendering a 255-frame hdr version of your previously released code, and

of course I got the frames rendered just fine. The trouble came when I

attempted to average all 800x600p hdr images. I ran out of memory at one point,

I'm estimating at the 120th frame. I would have to average the whole sequence in

three parts, and then average the three parts together...

It would be nice to get the camera-shearing technique working with Rune's

illusion.inc, since I figured out a way to have each succeeding frame blend

proportionately with the previous ones. I can do motion blur that way, and see

the results accumulate. I don't think illusion.inc can take camera

transformations into account, and the result is a terrible "walking" of the

frames.

Good work, at any rate. Have you tried moving the camera in a circular fashion

to produce an even result? I've got an array of 227 points distributed evenly

within a circle, if you are interested.

Sam info> wrote:

>

> Thank-you! I'm glad someone found it useful.

>

> Attached is my test object with focal blur using the technique above - I'd

> posted one a few days ago, but this one has the corrected focal blur, and does

> look a little better for it.

It looks good!

I tried rendering a 255-frame hdr version of your previously released code, and

of course I got the frames rendered just fine. The trouble came when I

attempted to average all 800x600p hdr images. I ran out of memory at one point,

I'm estimating at the 120th frame. I would have to average the whole sequence in

three parts, and then average the three parts together...

It would be nice to get the camera-shearing technique working with Rune's

illusion.inc, since I figured out a way to have each succeeding frame blend

proportionately with the previous ones. I can do motion blur that way, and see

the results accumulate. I don't think illusion.inc can take camera

transformations into account, and the result is a terrible "walking" of the

frames.

Good work, at any rate. Have you tried moving the camera in a circular fashion

to produce an even result? I've got an array of 227 points distributed evenly

within a circle, if you are interested.

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Samuel Benge" <stb### [at] hotmail com> wrote:

> "Edouard Poor" <pov### [at] edouard com> wrote:

> "Edouard Poor" <pov### [at] edouard info> wrote:

> >

> > Thank-you! I'm glad someone found it useful.

> >

> > Attached is my test object with focal blur using the technique above - I'd

> > posted one a few days ago, but this one has the corrected focal blur, and does

> > look a little better for it.

>

> It looks good!

>

> I tried rendering a 255-frame hdr version of your previously released code, and

> of course I got the frames rendered just fine. The trouble came when I

> attempted to average all 800x600p hdr images. I ran out of memory at one point,

> I'm estimating at the 120th frame. I would have to average the whole sequence in

> three parts, and then average the three parts together...

I wrote a simple command-line program to merge the HDR images - I'll try and

post the source somewhere soonish, but if you were on Mac OS X, I could just

post that executable straight away.

I've merged 10000 images with it, plus doing it all at the end eliminates the

any possible accumulation errors that might build up.

> Good work, at any rate. Have you tried moving the camera in a circular fashion

> to produce an even result? I've got an array of 227 points distributed evenly

> within a circle, if you are interested.

Usually a random distribution seems to work well, and different scenes require a

different number of frames to look good.

Actually one issue I've noticed is that I think that the random number generator

in POV-Ray isn't quite as randomly distributed as I would like - esp with

averaging deep DoF and specular highlights (or HDR reflections of point light

sources) I notice a discernible pattern appearing in the bokeh. I guess I could

test it by loading a random array produced externally (with some high quality

RNG) and comparing the results.

> Sam

Cheers,

Edouard. info> wrote:

> >

> > Thank-you! I'm glad someone found it useful.

> >

> > Attached is my test object with focal blur using the technique above - I'd

> > posted one a few days ago, but this one has the corrected focal blur, and does

> > look a little better for it.

>

> It looks good!

>

> I tried rendering a 255-frame hdr version of your previously released code, and

> of course I got the frames rendered just fine. The trouble came when I

> attempted to average all 800x600p hdr images. I ran out of memory at one point,

> I'm estimating at the 120th frame. I would have to average the whole sequence in

> three parts, and then average the three parts together...

I wrote a simple command-line program to merge the HDR images - I'll try and

post the source somewhere soonish, but if you were on Mac OS X, I could just

post that executable straight away.

I've merged 10000 images with it, plus doing it all at the end eliminates the

any possible accumulation errors that might build up.

> Good work, at any rate. Have you tried moving the camera in a circular fashion

> to produce an even result? I've got an array of 227 points distributed evenly

> within a circle, if you are interested.

Usually a random distribution seems to work well, and different scenes require a

different number of frames to look good.

Actually one issue I've noticed is that I think that the random number generator

in POV-Ray isn't quite as randomly distributed as I would like - esp with

averaging deep DoF and specular highlights (or HDR reflections of point light

sources) I notice a discernible pattern appearing in the bokeh. I guess I could

test it by loading a random array produced externally (with some high quality

RNG) and comparing the results.

> Sam

Cheers,

Edouard.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Edouard Poor wrote:

> I wrote a simple command-line program to merge the HDR images - I'll try and

> post the source somewhere soonish, but if you were on Mac OS X, I could just

> post that executable straight away.

I'm running WinXP here. If your code uses SDL, then I might be able to

compile it myself.

> Usually a random distribution seems to work well, and different scenes require a

> different number of frames to look good.

>

> Actually one issue I've noticed is that I think that the random number generator

> in POV-Ray isn't quite as randomly distributed as I would like - esp with

> averaging deep DoF and specular highlights (or HDR reflections of point light

> sources) I notice a discernible pattern appearing in the bokeh. I guess I could

> test it by loading a random array produced externally (with some high quality

> RNG) and comparing the results.

What's RNG? Is that some sort of even distribution routine? I've been

able to make evenly-distributed random point arrays in the past by

testing each spot with a circle routine. It can be slow to compute

sometimes, but it's always nice to have such a point array handy.

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

stbenge <THI### [at] hotmail com> wrote:

> Edouard Poor wrote:

> > I wrote a simple command-line program to merge the HDR images - I'll try and

> > post the source somewhere soonish, but if you were on Mac OS X, I could just

> > post that executable straight away.

>

> I'm running WinXP here. If your code uses SDL, then I might be able to

> compile it myself.

The source is very simple - if you've got a C++ compiler of some sort (e.g.

gcc/g++ or the free vidual studio) you should be able to get something going

from this. Linux and Mac OS X will handle very long command lines (e.g. 1000

image names), I'm not sure about Windows though.

You'll need the rgbe files from

http://www.graphics.cornell.edu/online/formats/rgbe/ as well.

The output is "x.hdr".

/*

* join.cc

*

* g++ -g -o join join.cc rgbe.o

*

*/

// -- Include Files -----------------------------------------------------------

#include <cstdio>

// For: fopen(), FILE etc

extern "C"

{

#include "rgbe.h"

// For: Radience File Format Reading/Writing

}

#include <iostream>

// For: cout, etc

// -- Namespace Directives ----------------------------------------------------

using namespace std;

// -- Classes -----------------------------------------------------------------

class image

{

public:

double *pixel;

int width;

int height;

image( int width, int height )

: width( width ), height( height ),

pixel( new double[ width * height * 3 ] )

{

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] = 0.0;

}

~image()

{

delete [] pixel;

}

double& operator()( int x, int y, int p )

{

return pixel[ y * width * 3 + x * 3 + p ];

}

image& operator/=( const double d )

{

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] /= d;

return *this;

}

image& operator+=( const image& other )

{

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] += other.pixel[ i ];

return *this;

}

image( const image& other )

: width( other.width ), height( other.height ),

pixel( new double[ other.width * other.height * 3 ] )

{

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] = other.pixel[ i ];

}

image& operator=( const image& other )

{

if( this == &other ) return *this;

delete [] pixel;

width = other.width;

height = other.height;

pixel = new double[ width * height * 3 ];

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] = other.pixel[ i ];

return *this;

}

};

// -- Utility Functions -------------------------------------------------------

image loadHDR( string filename )

{

int width, height;

FILE *f = fopen( filename.c_str(), "rb" );

RGBE_ReadHeader( f, &width, &height, NULL );

float *fim = new float[ width * height * 3 ];

RGBE_ReadPixels_RLE( f, fim, width, height );

fclose( f );

image tmpImage( width, height );

for( int y = 0; y < height; y++ )

for( int x = 0; x < width; x++ )

for( int p = 0; p < 3; p++ )

tmpImage( x, y, p ) = fim[ y * width * 3 + x * 3 + p ];

delete [] fim;

return tmpImage;

}

void saveHDR( image& im, string filename )

{

float *fim = new float[ im.width * im.height * 3 ];

for( int y = 0; y < im.height; y++ )

for( int x = 0; x < im.width; x++ )

for( int p = 0; p < 3; p++ )

fim[ y * im.width * 3 + x * 3 + p ] = im( x, y, p );

FILE *f = fopen( filename.c_str(), "wb" );

RGBE_WriteHeader( f, im.width, im.height, NULL );

RGBE_WritePixels( f, fim, im.width * im.height );

fclose( f );

delete [] fim;

}

// -- Program Entry Point -----------------------------------------------------

int main( int argc, char *argv[] )

{

cout << "Joining " << argc-1 << " images" << endl;

cout << " " << argv[ 1 ] << endl;

image base = loadHDR( argv[ 1 ] );

for( int i = 2; i < argc; i++ )

{

cout << " " << argv[ i ] << endl;

image im = loadHDR( argv[ i ] );

base += im;

}

base /= argc-1;

saveHDR( base, "x.hdr" );

cout << "Done." << endl;

}

> > Usually a random distribution seems to work well, and different scenes require a

> > different number of frames to look good.

> >

> > Actually one issue I've noticed is that I think that the random number generator

> > in POV-Ray isn't quite as randomly distributed as I would like - esp with

> > averaging deep DoF and specular highlights (or HDR reflections of point light

> > sources) I notice a discernible pattern appearing in the bokeh. I guess I could

> > test it by loading a random array produced externally (with some high quality

> > RNG) and comparing the results.

>

> What's RNG? Is that some sort of even distribution routine? I've been

> able to make evenly-distributed random point arrays in the past by

> testing each spot with a circle routine. It can be slow to compute

> sometimes, but it's always nice to have such a point array handy.

Random Number Generator - POV-Ray's "rand()" (and "seed()"). POV's one is fast,

but, I'm suspecting, may show patterns when you use it in particular ways. An

interesting example of another RNG that was used in the past, but had problems

when used for 3D data, is at

http://www.cs.pitt.edu/~kirk/cs1501/animations/Random.html - POVs RNG is better

than that one I think, but might still show some non-randomness.

> Sam

Cheers,

Edouard. com> wrote:

> Edouard Poor wrote:

> > I wrote a simple command-line program to merge the HDR images - I'll try and

> > post the source somewhere soonish, but if you were on Mac OS X, I could just

> > post that executable straight away.

>

> I'm running WinXP here. If your code uses SDL, then I might be able to

> compile it myself.

The source is very simple - if you've got a C++ compiler of some sort (e.g.

gcc/g++ or the free vidual studio) you should be able to get something going

from this. Linux and Mac OS X will handle very long command lines (e.g. 1000

image names), I'm not sure about Windows though.

You'll need the rgbe files from

http://www.graphics.cornell.edu/online/formats/rgbe/ as well.

The output is "x.hdr".

/*

* join.cc

*

* g++ -g -o join join.cc rgbe.o

*

*/

// -- Include Files -----------------------------------------------------------

#include <cstdio>

// For: fopen(), FILE etc

extern "C"

{

#include "rgbe.h"

// For: Radience File Format Reading/Writing

}

#include <iostream>

// For: cout, etc

// -- Namespace Directives ----------------------------------------------------

using namespace std;

// -- Classes -----------------------------------------------------------------

class image

{

public:

double *pixel;

int width;

int height;

image( int width, int height )

: width( width ), height( height ),

pixel( new double[ width * height * 3 ] )

{

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] = 0.0;

}

~image()

{

delete [] pixel;

}

double& operator()( int x, int y, int p )

{

return pixel[ y * width * 3 + x * 3 + p ];

}

image& operator/=( const double d )

{

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] /= d;

return *this;

}

image& operator+=( const image& other )

{

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] += other.pixel[ i ];

return *this;

}

image( const image& other )

: width( other.width ), height( other.height ),

pixel( new double[ other.width * other.height * 3 ] )

{

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] = other.pixel[ i ];

}

image& operator=( const image& other )

{

if( this == &other ) return *this;

delete [] pixel;

width = other.width;

height = other.height;

pixel = new double[ width * height * 3 ];

for( int i = 0; i < width * height * 3; i++ )

pixel[ i ] = other.pixel[ i ];

return *this;

}

};

// -- Utility Functions -------------------------------------------------------

image loadHDR( string filename )

{

int width, height;

FILE *f = fopen( filename.c_str(), "rb" );

RGBE_ReadHeader( f, &width, &height, NULL );

float *fim = new float[ width * height * 3 ];

RGBE_ReadPixels_RLE( f, fim, width, height );

fclose( f );

image tmpImage( width, height );

for( int y = 0; y < height; y++ )

for( int x = 0; x < width; x++ )

for( int p = 0; p < 3; p++ )

tmpImage( x, y, p ) = fim[ y * width * 3 + x * 3 + p ];

delete [] fim;

return tmpImage;

}

void saveHDR( image& im, string filename )

{

float *fim = new float[ im.width * im.height * 3 ];

for( int y = 0; y < im.height; y++ )

for( int x = 0; x < im.width; x++ )

for( int p = 0; p < 3; p++ )

fim[ y * im.width * 3 + x * 3 + p ] = im( x, y, p );

FILE *f = fopen( filename.c_str(), "wb" );

RGBE_WriteHeader( f, im.width, im.height, NULL );

RGBE_WritePixels( f, fim, im.width * im.height );

fclose( f );

delete [] fim;

}

// -- Program Entry Point -----------------------------------------------------

int main( int argc, char *argv[] )

{

cout << "Joining " << argc-1 << " images" << endl;

cout << " " << argv[ 1 ] << endl;

image base = loadHDR( argv[ 1 ] );

for( int i = 2; i < argc; i++ )

{

cout << " " << argv[ i ] << endl;

image im = loadHDR( argv[ i ] );

base += im;

}

base /= argc-1;

saveHDR( base, "x.hdr" );

cout << "Done." << endl;

}

> > Usually a random distribution seems to work well, and different scenes require a

> > different number of frames to look good.

> >

> > Actually one issue I've noticed is that I think that the random number generator

> > in POV-Ray isn't quite as randomly distributed as I would like - esp with

> > averaging deep DoF and specular highlights (or HDR reflections of point light

> > sources) I notice a discernible pattern appearing in the bokeh. I guess I could

> > test it by loading a random array produced externally (with some high quality

> > RNG) and comparing the results.

>

> What's RNG? Is that some sort of even distribution routine? I've been

> able to make evenly-distributed random point arrays in the past by

> testing each spot with a circle routine. It can be slow to compute

> sometimes, but it's always nice to have such a point array handy.

Random Number Generator - POV-Ray's "rand()" (and "seed()"). POV's one is fast,

but, I'm suspecting, may show patterns when you use it in particular ways. An

interesting example of another RNG that was used in the past, but had problems

when used for 3D data, is at

http://www.cs.pitt.edu/~kirk/cs1501/animations/Random.html - POVs RNG is better

than that one I think, but might still show some non-randomness.

> Sam

Cheers,

Edouard.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

I'd like to know, what is the difference between this and POV-Ray's inbuilt

focal blur?

Shouldn't the POV blur be faster, since it can use the adaptive level to skip

some samples?

And if this yields a better result than the inbuilt, how much work would it be

to implement this version instead of POV's current version?

....Chambers

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()