|

|

|

|

|

|

|  |

|  |

|

|

From: Alain Martel

Subject: Re: Denoising POV-Ray images in Blender

Date: 15 Jan 2023 12:12:42

Message: <63c4340a@news.povray.org>

|

|

|

|  |

|  |

|

|

Le 2023-01-13 à 17:33, Samuel B. a écrit :

> Hi everyone,

>

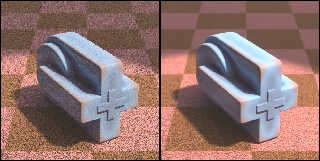

> On the left is a noisy image produced with POV-Ray, and on the right is the same

> image, but denoised in Blender. It was super easy to set up.

>

> The scene was rendered in UberPOV using no_cache radiosity and a count of 1, and

> 8 blur_samples for the camera. No AA. (It rendered in only 14 seconds. The

> denoising in Blender took a little over a second.)

>

> Works nicely, eh? I don't know yet if it will handle media, transparent objects

> and other such things, but this initial result is promising!

>

> Sam

Look like a simple blur operation using a 3x3 block size.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Alain Martel <kua### [at] videotron ca> wrote:

> > Hi everyone,

> >

> > On the left is a noisy image produced with POV-Ray, and on the right is the same

> > image, but denoised in Blender. It was super easy to set up.

> > (...)

>

> Look like a simple blur operation using a 3x3 block size.

Hi Alain,

I don't think it's that simple... Here's an article about it:

https://www.intel.com/content/www/us/en/developer/articles/technical/intel-employs-ml-to-create-graphics.html#gs.mshzmp

I haven't read through the whole thing, but they used machine learning somehow.

My guess is that they fed a neural network image pairs: one completely converged

(noise-free) image, and the same scene, but really noisy. The system eventually

learned that when the noise looks a certain way, it's meant to turn out a

certain way.

Sam ca> wrote:

> > Hi everyone,

> >

> > On the left is a noisy image produced with POV-Ray, and on the right is the same

> > image, but denoised in Blender. It was super easy to set up.

> > (...)

>

> Look like a simple blur operation using a 3x3 block size.

Hi Alain,

I don't think it's that simple... Here's an article about it:

https://www.intel.com/content/www/us/en/developer/articles/technical/intel-employs-ml-to-create-graphics.html#gs.mshzmp

I haven't read through the whole thing, but they used machine learning somehow.

My guess is that they fed a neural network image pairs: one completely converged

(noise-free) image, and the same scene, but really noisy. The system eventually

learned that when the noise looks a certain way, it's meant to turn out a

certain way.

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Here's a striking example of how well Intel's denoiser works. The plastic

material uses backside illumination, reflection, refraction, and a tiny granite

normal for roughness. This kind of material needs at least two radiosity bounces

to look decent, so render times can really go through the roof under normal

circumstances. Observe the reflection highlights. The denoiser was able to make

sense of the clusters of white pixels.

Post a reply to this message

Attachments:

Download 'blender-uberpov-ducting-13m_51s.jpg' (332 KB)

Preview of image 'blender-uberpov-ducting-13m_51s.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Op 16-1-2023 om 16:22 schreef Samuel B.:

> Here's a striking example of how well Intel's denoiser works. The plastic

> material uses backside illumination, reflection, refraction, and a tiny granite

> normal for roughness. This kind of material needs at least two radiosity bounces

> to look decent, so render times can really go through the roof under normal

> circumstances. Observe the reflection highlights. The denoiser was able to make

> sense of the clusters of white pixels.

This one is particularly impressive, Sam.

--

Thomas

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

A proximity pattern test. This type of proximity method can be quite noisy at

times, depending on the number of samples used. This object got 12 samples and

the scene took 35 seconds to render using low radiosity and focal blur settings.

Just in case any wants it, here's the proximity macro:

/* Proximity pattern macro

ProxObject = input object

Radius = proximity radius

Samples = number of priximy samples

TurbScale = scale of turbulence

TurbAmt = amount of turbulence

Example: Object_Prox(MyObject, .3, 20, 1000, 100)

*/

#macro Object_Prox(ProxObject, Radius, Samples, TurbScale, TurbAmt)

pigment_pattern{

average

pigment_map{

// fermat spiral-sphere distribution

#local Inc = pi * (3 - sqrt(5));

#local Off = 2 / Samples;

#for(K, 0, Samples-1)

#local Y = K * Off - 1 + (Off / 2);

#local R = sqrt(1 - Y*Y);

#local Phi = K * Inc;

[1

// object pattern with small-scale turbulence

pigment_pattern{

object{ProxObject}

scale TurbScale

warp{turbulence TurbAmt lambda 1}

scale 1/TurbScale

translate <cos(Phi)*R, Y, sin(Phi)*R>*Radius

}

]

#end

}

}

#end

Post a reply to this message

Attachments:

Download 'denoise-prox-test3.jpg' (338 KB)

Preview of image 'denoise-prox-test3.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Thomas de Groot <tho### [at] degroot org> wrote:

> Op 16-1-2023 om 16:22 schreef Samuel B.:

> > Here's a striking example of how well Intel's denoiser works. The plastic

> > material uses backside illumination, reflection, refraction, and a tiny granite

> > normal for roughness. (...)

> This one is particularly impressive, Sam.

> --

> Thomas

Hi Thomas,

Yeah, it's amazing how effective it can be. But writing POV script to output

three different images is a bit of a chore (for a few reasons). It would be cool

a future version of POV-Ray supported denoising natively, as a plugin or

something, so that all the difficult stuff was done behind the scenes.

Sam org> wrote:

> Op 16-1-2023 om 16:22 schreef Samuel B.:

> > Here's a striking example of how well Intel's denoiser works. The plastic

> > material uses backside illumination, reflection, refraction, and a tiny granite

> > normal for roughness. (...)

> This one is particularly impressive, Sam.

> --

> Thomas

Hi Thomas,

Yeah, it's amazing how effective it can be. But writing POV script to output

three different images is a bit of a chore (for a few reasons). It would be cool

a future version of POV-Ray supported denoising natively, as a plugin or

something, so that all the difficult stuff was done behind the scenes.

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Samuel B." <stb### [at] hotmail com> wrote:

> A proximity pattern test. This type of proximity method can be quite noisy at

> times, depending on the number of samples used. This object got 12 samples and

> the scene took 35 seconds to render using low radiosity and focal blur settings.

>

This is the best example of the denoiser technique that you've posted so far,

IMO. I am amazed that it can discern true noise from your applied ground pattern

that looks *almost like* noise. The foreground detail looks nice and sharp.

It would be interesting to see this same scene denoised but without the original

focal blur-- to see at what point (if any!) in the receding distance the

denoiser might mistake the smaller and smaller actual ground pattern detail for

what it 'perceives' as noise. To see if the denoiser tries to blur it there when

it should not(?)

----

BTW: A few days ago, I downloaded your 2013 file "ToVolume: Object-To-Volume

Conversion and Rendering Process". It also has an interesting and much more

complex 'proximity' file included. I'm sorry to admit that I haven't yet played

around with your amazing proximity pattern :-( I'm still going through that file

to try and understand its workings; much of it is beyond my brain-power, ha. com> wrote:

> A proximity pattern test. This type of proximity method can be quite noisy at

> times, depending on the number of samples used. This object got 12 samples and

> the scene took 35 seconds to render using low radiosity and focal blur settings.

>

This is the best example of the denoiser technique that you've posted so far,

IMO. I am amazed that it can discern true noise from your applied ground pattern

that looks *almost like* noise. The foreground detail looks nice and sharp.

It would be interesting to see this same scene denoised but without the original

focal blur-- to see at what point (if any!) in the receding distance the

denoiser might mistake the smaller and smaller actual ground pattern detail for

what it 'perceives' as noise. To see if the denoiser tries to blur it there when

it should not(?)

----

BTW: A few days ago, I downloaded your 2013 file "ToVolume: Object-To-Volume

Conversion and Rendering Process". It also has an interesting and much more

complex 'proximity' file included. I'm sorry to admit that I haven't yet played

around with your amazing proximity pattern :-( I'm still going through that file

to try and understand its workings; much of it is beyond my brain-power, ha.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Kenneth" <kdw### [at] gmail com> wrote:

>

> It would be interesting to see this same scene denoised but without the original

> focal blur--

Oops, what I meant to say was, *with* focal blur (and the inherent noise of low

blur samples) but so that the depth-of-field is much wider and not soft-focus

(if that makes sense.) In other words, noisy but not actually blurry-looking.

I'm wondering if the denoiser can distinguish the ground pattern in the

foreground from the same un-blurred smaller pattern in the receding distance. com> wrote:

>

> It would be interesting to see this same scene denoised but without the original

> focal blur--

Oops, what I meant to say was, *with* focal blur (and the inherent noise of low

blur samples) but so that the depth-of-field is much wider and not soft-focus

(if that makes sense.) In other words, noisy but not actually blurry-looking.

I'm wondering if the denoiser can distinguish the ground pattern in the

foreground from the same un-blurred smaller pattern in the receding distance.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Kenneth" <kdw### [at] gmail com> wrote:

> "Samuel B." <stb### [at] hotmail com> wrote:

> "Samuel B." <stb### [at] hotmail com> wrote:

> > A proximity pattern test. (...)

>

> This is the best example of the denoiser technique that you've posted so far,

> IMO. I am amazed that it can discern true noise from your applied ground pattern

> that looks *almost like* noise. The foreground detail looks nice and sharp.

>

> It would be interesting to see this same scene denoised but without the original

> focal blur-- to see at what point (if any!) in the receding distance the

> denoiser might mistake the smaller and smaller actual ground pattern detail for

> what it 'perceives' as noise. To see if the denoiser tries to blur it there when

> it should not(?)

>

> ----

> BTW: A few days ago, I downloaded your 2013 file "ToVolume: Object-To-Volume

> Conversion and Rendering Process". It also has an interesting and much more

> complex 'proximity' file included. I'm sorry to admit that I haven't yet played

> around with your amazing proximity pattern :-( I'm still going through that file

> to try and understand its workings; much of it is beyond my brain-power, ha.

Hi Kenneth,

The denoiser was able to preserve the ground bump largely thanks to the normal

pass I included. It also helps that the normal and albedo passes can take more

camera blur samples than the final pass, since they are faster to render.

Regarding what it would look like with no obvious focal blur, I'm /guessing/

that the denoiser would smooth out everything under a certain size and color

threshold.

Re: ToVolume. I can't remember which type of proximity technique I used for

that. And I would probably be a bit lost myself, opening up that file after all

these years... that tends to happen with old projects ;)

Sam com> wrote:

> > A proximity pattern test. (...)

>

> This is the best example of the denoiser technique that you've posted so far,

> IMO. I am amazed that it can discern true noise from your applied ground pattern

> that looks *almost like* noise. The foreground detail looks nice and sharp.

>

> It would be interesting to see this same scene denoised but without the original

> focal blur-- to see at what point (if any!) in the receding distance the

> denoiser might mistake the smaller and smaller actual ground pattern detail for

> what it 'perceives' as noise. To see if the denoiser tries to blur it there when

> it should not(?)

>

> ----

> BTW: A few days ago, I downloaded your 2013 file "ToVolume: Object-To-Volume

> Conversion and Rendering Process". It also has an interesting and much more

> complex 'proximity' file included. I'm sorry to admit that I haven't yet played

> around with your amazing proximity pattern :-( I'm still going through that file

> to try and understand its workings; much of it is beyond my brain-power, ha.

Hi Kenneth,

The denoiser was able to preserve the ground bump largely thanks to the normal

pass I included. It also helps that the normal and albedo passes can take more

camera blur samples than the final pass, since they are faster to render.

Regarding what it would look like with no obvious focal blur, I'm /guessing/

that the denoiser would smooth out everything under a certain size and color

threshold.

Re: ToVolume. I can't remember which type of proximity technique I used for

that. And I would probably be a bit lost myself, opening up that file after all

these years... that tends to happen with old projects ;)

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Op 20/01/2023 om 23:56 schreef Samuel B.:

> "Kenneth" <kdw### [at] gmail com> wrote:

>> "Samuel B." <stb### [at] hotmail com> wrote:

>> "Samuel B." <stb### [at] hotmail com> wrote:

>>> A proximity pattern test. (...)

>>

>> This is the best example of the denoiser technique that you've posted so far,

>> IMO. I am amazed that it can discern true noise from your applied ground pattern

>> that looks *almost like* noise. The foreground detail looks nice and sharp.

>>

>> It would be interesting to see this same scene denoised but without the original

>> focal blur-- to see at what point (if any!) in the receding distance the

>> denoiser might mistake the smaller and smaller actual ground pattern detail for

>> what it 'perceives' as noise. To see if the denoiser tries to blur it there when

>> it should not(?)

>>

>> ----

>> BTW: A few days ago, I downloaded your 2013 file "ToVolume: Object-To-Volume

>> Conversion and Rendering Process". It also has an interesting and much more

>> complex 'proximity' file included. I'm sorry to admit that I haven't yet played

>> around with your amazing proximity pattern :-( I'm still going through that file

>> to try and understand its workings; much of it is beyond my brain-power, ha.

>

> Hi Kenneth,

>

> The denoiser was able to preserve the ground bump largely thanks to the normal

> pass I included. It also helps that the normal and albedo passes can take more

> camera blur samples than the final pass, since they are faster to render.

>

> Regarding what it would look like with no obvious focal blur, I'm /guessing/

> that the denoiser would smooth out everything under a certain size and color

> threshold.

>

> Re: ToVolume. I can't remember which type of proximity technique I used for

> that. And I would probably be a bit lost myself, opening up that file after all

> these years... that tends to happen with old projects ;)

>

> Sam

>

There are/were also your "fastProx" and "nestProx" includes for doing

proximity patterns. It has been a while since I last used them. They

tended to be /superseded/ by Edouad Poor's "df3prox-0.95" utility in my

(slight) personal choice/preference ;-)

However, they are a notable part of my large collection of POV-Ray

utilities created by the users community. Good opportunity to say a warm

Thank You.

--

Thomas com> wrote:

>>> A proximity pattern test. (...)

>>

>> This is the best example of the denoiser technique that you've posted so far,

>> IMO. I am amazed that it can discern true noise from your applied ground pattern

>> that looks *almost like* noise. The foreground detail looks nice and sharp.

>>

>> It would be interesting to see this same scene denoised but without the original

>> focal blur-- to see at what point (if any!) in the receding distance the

>> denoiser might mistake the smaller and smaller actual ground pattern detail for

>> what it 'perceives' as noise. To see if the denoiser tries to blur it there when

>> it should not(?)

>>

>> ----

>> BTW: A few days ago, I downloaded your 2013 file "ToVolume: Object-To-Volume

>> Conversion and Rendering Process". It also has an interesting and much more

>> complex 'proximity' file included. I'm sorry to admit that I haven't yet played

>> around with your amazing proximity pattern :-( I'm still going through that file

>> to try and understand its workings; much of it is beyond my brain-power, ha.

>

> Hi Kenneth,

>

> The denoiser was able to preserve the ground bump largely thanks to the normal

> pass I included. It also helps that the normal and albedo passes can take more

> camera blur samples than the final pass, since they are faster to render.

>

> Regarding what it would look like with no obvious focal blur, I'm /guessing/

> that the denoiser would smooth out everything under a certain size and color

> threshold.

>

> Re: ToVolume. I can't remember which type of proximity technique I used for

> that. And I would probably be a bit lost myself, opening up that file after all

> these years... that tends to happen with old projects ;)

>

> Sam

>

There are/were also your "fastProx" and "nestProx" includes for doing

proximity patterns. It has been a while since I last used them. They

tended to be /superseded/ by Edouad Poor's "df3prox-0.95" utility in my

(slight) personal choice/preference ;-)

However, they are a notable part of my large collection of POV-Ray

utilities created by the users community. Good opportunity to say a warm

Thank You.

--

Thomas

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

![]()