|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

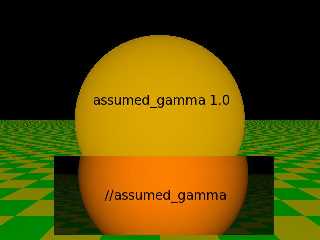

The Gods of non-linearity are having fun with me today. No wonder the 19th

century was so good for art: they didn't have gamma to contend with.

Attached is my render of the following scene, with an inset of what happens

when I remove the assumed_gamma. The scene is so simple that I'm sure I

haven't done anything stupid. Haven't I? Surely, an orange sphere should

be orange?

I get similar results with no .ini file, and an .ini file containing

"display_gamma 2.5" (which is what, in theory, I should be using). Is

something broken in my install?

Please help.

#include "colors.inc"

global_settings {

assumed_gamma 1

}

plane { y, -2 texture { pigment { checker colour Green colour Yellow} }}

light_source { -10*z colour White}

camera { location -3*z look_at 0 }

sphere {0, 1

texture {pigment {Orange}} // Did I say "banana"? Nooooooooo.

}

Post a reply to this message

Attachments:

Download 'gamma.jpg' (27 KB)

Preview of image 'gamma.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Sorry, I omitted the useful details: Official povray 3.6.1 binary on

i686-pc-linux-gnu, LibPNG 1.2.5. There are no gamma settings in

povray.conf.

Thanks again.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

You'll see exactly the same thing if you take a photo of an orange, then

load it into your favourite paint program and gamma correct it.

Gamma effectively adjusts the brightness of the midtones and leaves 0 and 1

in the same place, so if your orange colour is <1,0.5,0> then with

assumed_gamma it will look more like <1,.73,0>, which is

<1,pow(0.5,1/2.2),0> because your monitor gamma is probably 2.2ish.

If you want to pick a colour in a paint program and have it look the same in

pov then you need to gamma correct it from your monitor's gamma space (the

space you picked it in) into the assumed_gamma space you've told pov to work

in. Usually that means applying pow(<colour>, 2.2) to it.

Personally I find it's just easier to always work with assumed_gamma 1

turned on, and pick numbers in pov that look right, and forget about gamma

space altogether.

--

Tek

http://evilsuperbrain.com

"Ard" <ard### [at] waikato ac ac nz> wrote in message

news:web.4373fd35d28cb00fed802ab30@news.povray.org...

> The Gods of non-linearity are having fun with me today. No wonder the

> 19th

> century was so good for art: they didn't have gamma to contend with.

>

> Attached is my render of the following scene, with an inset of what

> happens

> when I remove the assumed_gamma. The scene is so simple that I'm sure I

> haven't done anything stupid. Haven't I? Surely, an orange sphere should

> be orange?

>

> I get similar results with no .ini file, and an .ini file containing

> "display_gamma 2.5" (which is what, in theory, I should be using). Is

> something broken in my install?

>

> Please help.

>

>

> #include "colors.inc"

>

> global_settings {

> assumed_gamma 1

> }

> plane { y, -2 texture { pigment { checker colour Green colour Yellow} }}

> light_source { -10*z colour White}

> camera { location -3*z look_at 0 }

> sphere {0, 1

> texture {pigment {Orange}} // Did I say "banana"? Nooooooooo.

> }

>

>

>

-------------------------------------------------------------------------------- nz> wrote in message

news:web.4373fd35d28cb00fed802ab30@news.povray.org...

> The Gods of non-linearity are having fun with me today. No wonder the

> 19th

> century was so good for art: they didn't have gamma to contend with.

>

> Attached is my render of the following scene, with an inset of what

> happens

> when I remove the assumed_gamma. The scene is so simple that I'm sure I

> haven't done anything stupid. Haven't I? Surely, an orange sphere should

> be orange?

>

> I get similar results with no .ini file, and an .ini file containing

> "display_gamma 2.5" (which is what, in theory, I should be using). Is

> something broken in my install?

>

> Please help.

>

>

> #include "colors.inc"

>

> global_settings {

> assumed_gamma 1

> }

> plane { y, -2 texture { pigment { checker colour Green colour Yellow} }}

> light_source { -10*z colour White}

> camera { location -3*z look_at 0 }

> sphere {0, 1

> texture {pigment {Orange}} // Did I say "banana"? Nooooooooo.

> }

>

>

>

--------------------------------------------------------------------------------

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Ard" <ard### [at] waikato ac ac nz> wrote:

> The Gods of non-linearity are having fun with me today. No wonder the 19th

> century was so good for art: they didn't have gamma to contend with.

>

Since I like to send my POV images to friends who use both PCs and Macs, I

decided to strike a "compromise" with the gamma settings.

I decided on 2.0 (the average between the Mac's 1.8 and the PC's 2.2), both

as an assumed_gamma AND in all of my QUICKRES.INI entries. This way, I can

be pretty sure my images will look *almost* the way they should, no matter

who gets 'em.

Of course, with modern computers and monitors, anyone can now fiddle around

with the gammas to suit themselves, which sounds like a recipe

for...chaos!!! :-)

Ken nz> wrote:

> The Gods of non-linearity are having fun with me today. No wonder the 19th

> century was so good for art: they didn't have gamma to contend with.

>

Since I like to send my POV images to friends who use both PCs and Macs, I

decided to strike a "compromise" with the gamma settings.

I decided on 2.0 (the average between the Mac's 1.8 and the PC's 2.2), both

as an assumed_gamma AND in all of my QUICKRES.INI entries. This way, I can

be pretty sure my images will look *almost* the way they should, no matter

who gets 'em.

Of course, with modern computers and monitors, anyone can now fiddle around

with the gammas to suit themselves, which sounds like a recipe

for...chaos!!! :-)

Ken

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

In article <437420f7$1@news.povray.org>, tek### [at] evilsuperbrain com says...

> You'll see exactly the same thing if you take a photo of an orange, then

> load it into your favourite paint program and gamma correct it.

>

> Gamma effectively adjusts the brightness of the midtones and leaves 0 and 1

> in the same place, so if your orange colour is <1,0.5,0> then with

> assumed_gamma it will look more like <1,.73,0>, which is

> <1,pow(0.5,1/2.2),0> because your monitor gamma is probably 2.2ish.

>

> If you want to pick a colour in a paint program and have it look the same in

> pov then you need to gamma correct it from your monitor's gamma space (the

> space you picked it in) into the assumed_gamma space you've told pov to work

> in. Usually that means applying pow(<colour>, 2.2) to it.

>

> Personally I find it's just easier to always work with assumed_gamma 1

> turned on, and pick numbers in pov that look right, and forget about gamma

> space altogether.

>

The assumption, (or at least how I assume its supposed to work), is that

they set your assumed gamma to what your display actually is, so that if

someone looks at it on one with a 1.0, it looks the same as on a 2.2, or

the same on a 2.2 as it did on your 1.0 display, etc. What people are

screwing up is that they are setting the gamma to something that has

*nothing* to do with their displays actual gamma, so when they load it

one something else, it looks wrong. In the case of programs that

correctly handle the setting in the file, *they* are assuming the 'real'

gamma is different that your monitor and are dropping or increasing the

image colors to 'match' what they 'think' your display uses, which is by

default 1.0, not 2.2. In other words, those programs are doing exactly

what they are told and compensating for *their* assumed gamma of 1.0, by

shifting everything to 2.2, when in fact the display is the same one as

used to produce the image, so 'should' have the same 'real' gamma.

Basically, if you use the damn thing wrong, its your own fault when it

doesn't work, which is imho a real good reason to leave it at 1.0, then

let those application, if they are set up correctly, adjust things to the

gamma or the display, not the other way around. Its like manufacturing

something that uses AA batteries, then 'suggesting' in the manual that

they try to cram a 9V battery into it. Of course its going to go wrong.

--

void main () {

call functional_code()

else

call crash_windows();

} com says...

> You'll see exactly the same thing if you take a photo of an orange, then

> load it into your favourite paint program and gamma correct it.

>

> Gamma effectively adjusts the brightness of the midtones and leaves 0 and 1

> in the same place, so if your orange colour is <1,0.5,0> then with

> assumed_gamma it will look more like <1,.73,0>, which is

> <1,pow(0.5,1/2.2),0> because your monitor gamma is probably 2.2ish.

>

> If you want to pick a colour in a paint program and have it look the same in

> pov then you need to gamma correct it from your monitor's gamma space (the

> space you picked it in) into the assumed_gamma space you've told pov to work

> in. Usually that means applying pow(<colour>, 2.2) to it.

>

> Personally I find it's just easier to always work with assumed_gamma 1

> turned on, and pick numbers in pov that look right, and forget about gamma

> space altogether.

>

The assumption, (or at least how I assume its supposed to work), is that

they set your assumed gamma to what your display actually is, so that if

someone looks at it on one with a 1.0, it looks the same as on a 2.2, or

the same on a 2.2 as it did on your 1.0 display, etc. What people are

screwing up is that they are setting the gamma to something that has

*nothing* to do with their displays actual gamma, so when they load it

one something else, it looks wrong. In the case of programs that

correctly handle the setting in the file, *they* are assuming the 'real'

gamma is different that your monitor and are dropping or increasing the

image colors to 'match' what they 'think' your display uses, which is by

default 1.0, not 2.2. In other words, those programs are doing exactly

what they are told and compensating for *their* assumed gamma of 1.0, by

shifting everything to 2.2, when in fact the display is the same one as

used to produce the image, so 'should' have the same 'real' gamma.

Basically, if you use the damn thing wrong, its your own fault when it

doesn't work, which is imho a real good reason to leave it at 1.0, then

let those application, if they are set up correctly, adjust things to the

gamma or the display, not the other way around. Its like manufacturing

something that uses AA batteries, then 'suggesting' in the manual that

they try to cram a 9V battery into it. Of course its going to go wrong.

--

void main () {

call functional_code()

else

call crash_windows();

}

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

I didn't understand all of that, but I'll try to explain my understanding of

gamma:

1/ Your monitor & computer do not display linearly scaled brightness values.

i.e. if you write a value to a pixel on the screen, then double that value,

it won't get exactly twice as bright. Normally, nobody cares about this, you

draw a picture using a value of 100, and that looks more or less the same on

everybody's machines because a value of 100 on their screens looks roughly

like a value of 100 on your screen.

2/ The first problem comes when you find someone with a computer/monitor

that makes everything brighter or darker than yours. It's possible to gamma

correct for the difference, but in general you should make images that will

look right on most people's PCs (probably windows PCs, probably gamma around

2.2).

3/ Some image viewing/editing programs do the phenominally irritating thing

of "correcting" this, meaning that although the file says "100" they'll

actually draw it as 125 or something, and then you wonder why you have

uneven colour banding in the dark colours. I'm looking at you, photoshop. If

you like seeing colours as numbers (and if you're using pov or picking

colours for websites I think maybe you do), find the option to turn this

"feature" off in that program. If you want to gamma correct an image there's

another menu option to do it anyway.

4/ And finally, why is any of this relevant to povray? Well pov has 2

numbers:

(i) in the ini file, pov has a setting for your display gamma. This

is NOT your display gamma! If, like all of us, you want to render pictures

for everyone to see, you should pick a gamma setting that everyone else

uses. Apparently (I'm told) this is about 2.2.

(ii) assumed_gamma 1 tells povray that the colour space for your

scene has a gamma of 1. THIS IS IMPORTANT! When twice as much light hits

something, it is twice as bright, because there's twice as much energy.

Povray will always internally compute the same lighting calculations on the

assumption that you're working in a linear colour space (i.e. when you shine

2 lights on something, you add them together, pov always does this). Pov

will then gamma correct the final image from the assumed_gamma space to the

display_gamma space.

IN CONCLUSION:

You want to tell pov to gamma correct the image for your monitor (or the

monitor of whoever's looking at it), so you do the following:

assumed_gamma 1 - tells pov to calculate the scene in linear colour

(scientifically correct)

display_gamma 2.2 - (or whatever your PC/monitor use) tells pov to take the

scientifically calculated image and adjust it, like a photograph, to fit the

colour-space of your screen.

I have no idea why pov has 2 numbers, because it only gamma corrects once,

using the ratio of those numbers.

--

Tek

http://evilsuperbrain.com

P.S. sorry for the ranting, but it's taken me 5 years to understand I should

have always used assumed_gamma 1, so I kinda want to make sure everyone else

knows that too.

P.P.S. to answer the original question: the colour looks different because

pov is gamma-correcting, so if you want it to look the same you need to

reverse gamma-correct the colour before giving it to pov. pow( colour, 2.2 )

should do it. Telling pov not to gamma correct will make the colour look

right, but the lighting will become wrong.

P.P.P.S. if you build a scene without assumed_gamma 1, it will usually look

fine anyway, so this is all academic. Ah the irony.

"Patrick Elliott" <sha### [at] hotmail com> wrote in message

news:MPG.1dded66182699b08989e4d@news.povray.org...

> In article <437420f7$1@news.povray.org>, tek### [at] evilsuperbrain com> wrote in message

news:MPG.1dded66182699b08989e4d@news.povray.org...

> In article <437420f7$1@news.povray.org>, tek### [at] evilsuperbrain com says...

>> You'll see exactly the same thing if you take a photo of an orange, then

>> load it into your favourite paint program and gamma correct it.

>>

>> Gamma effectively adjusts the brightness of the midtones and leaves 0 and

>> 1

>> in the same place, so if your orange colour is <1,0.5,0> then with

>> assumed_gamma it will look more like <1,.73,0>, which is

>> <1,pow(0.5,1/2.2),0> because your monitor gamma is probably 2.2ish.

>>

>> If you want to pick a colour in a paint program and have it look the same

>> in

>> pov then you need to gamma correct it from your monitor's gamma space

>> (the

>> space you picked it in) into the assumed_gamma space you've told pov to

>> work

>> in. Usually that means applying pow(<colour>, 2.2) to it.

>>

>> Personally I find it's just easier to always work with assumed_gamma 1

>> turned on, and pick numbers in pov that look right, and forget about

>> gamma

>> space altogether.

>>

> The assumption, (or at least how I assume its supposed to work), is that

> they set your assumed gamma to what your display actually is, so that if

> someone looks at it on one with a 1.0, it looks the same as on a 2.2, or

> the same on a 2.2 as it did on your 1.0 display, etc. What people are

> screwing up is that they are setting the gamma to something that has

> *nothing* to do with their displays actual gamma, so when they load it

> one something else, it looks wrong. In the case of programs that

> correctly handle the setting in the file, *they* are assuming the 'real'

> gamma is different that your monitor and are dropping or increasing the

> image colors to 'match' what they 'think' your display uses, which is by

> default 1.0, not 2.2. In other words, those programs are doing exactly

> what they are told and compensating for *their* assumed gamma of 1.0, by

> shifting everything to 2.2, when in fact the display is the same one as

> used to produce the image, so 'should' have the same 'real' gamma.

>

> Basically, if you use the damn thing wrong, its your own fault when it

> doesn't work, which is imho a real good reason to leave it at 1.0, then

> let those application, if they are set up correctly, adjust things to the

> gamma or the display, not the other way around. Its like manufacturing

> something that uses AA batteries, then 'suggesting' in the manual that

> they try to cram a 9V battery into it. Of course its going to go wrong.

>

> --

> void main () {

> call functional_code()

> else

> call crash_windows();

> } com says...

>> You'll see exactly the same thing if you take a photo of an orange, then

>> load it into your favourite paint program and gamma correct it.

>>

>> Gamma effectively adjusts the brightness of the midtones and leaves 0 and

>> 1

>> in the same place, so if your orange colour is <1,0.5,0> then with

>> assumed_gamma it will look more like <1,.73,0>, which is

>> <1,pow(0.5,1/2.2),0> because your monitor gamma is probably 2.2ish.

>>

>> If you want to pick a colour in a paint program and have it look the same

>> in

>> pov then you need to gamma correct it from your monitor's gamma space

>> (the

>> space you picked it in) into the assumed_gamma space you've told pov to

>> work

>> in. Usually that means applying pow(<colour>, 2.2) to it.

>>

>> Personally I find it's just easier to always work with assumed_gamma 1

>> turned on, and pick numbers in pov that look right, and forget about

>> gamma

>> space altogether.

>>

> The assumption, (or at least how I assume its supposed to work), is that

> they set your assumed gamma to what your display actually is, so that if

> someone looks at it on one with a 1.0, it looks the same as on a 2.2, or

> the same on a 2.2 as it did on your 1.0 display, etc. What people are

> screwing up is that they are setting the gamma to something that has

> *nothing* to do with their displays actual gamma, so when they load it

> one something else, it looks wrong. In the case of programs that

> correctly handle the setting in the file, *they* are assuming the 'real'

> gamma is different that your monitor and are dropping or increasing the

> image colors to 'match' what they 'think' your display uses, which is by

> default 1.0, not 2.2. In other words, those programs are doing exactly

> what they are told and compensating for *their* assumed gamma of 1.0, by

> shifting everything to 2.2, when in fact the display is the same one as

> used to produce the image, so 'should' have the same 'real' gamma.

>

> Basically, if you use the damn thing wrong, its your own fault when it

> doesn't work, which is imho a real good reason to leave it at 1.0, then

> let those application, if they are set up correctly, adjust things to the

> gamma or the display, not the other way around. Its like manufacturing

> something that uses AA batteries, then 'suggesting' in the manual that

> they try to cram a 9V battery into it. Of course its going to go wrong.

>

> --

> void main () {

> call functional_code()

> else

> call crash_windows();

> }

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Tek" <tek### [at] evilsuperbrain com> wrote:

> ...I'll try to explain my understanding of

> gamma:

>

THANK YOU THANK YOU THANK YOU! This is the absolute best discusion of the

topic I've yet seen. Up until this moment, I've been using an assumed_gamma

of 2 ...and wondering WHY so many people choose 1 instead.

Time to make the change!

So if I set assumed_gamma to be 1.0, and want my final POV images to be

viewable on others' machines (both PC and Mac), should I set the

display_gamma (in my QUICKRES.INI file) to a "compromise" value of 2.0

(telling my own monitor to "expand" the color space to 2...the average of

the PC's 2.2 and the Mac's 1.8), so that THEIR machines display the best

version of my image possible--i.e., that as nearly as possible matches what

I see when I render it?

Ken com> wrote:

> ...I'll try to explain my understanding of

> gamma:

>

THANK YOU THANK YOU THANK YOU! This is the absolute best discusion of the

topic I've yet seen. Up until this moment, I've been using an assumed_gamma

of 2 ...and wondering WHY so many people choose 1 instead.

Time to make the change!

So if I set assumed_gamma to be 1.0, and want my final POV images to be

viewable on others' machines (both PC and Mac), should I set the

display_gamma (in my QUICKRES.INI file) to a "compromise" value of 2.0

(telling my own monitor to "expand" the color space to 2...the average of

the PC's 2.2 and the Mac's 1.8), so that THEIR machines display the best

version of my image possible--i.e., that as nearly as possible matches what

I see when I render it?

Ken

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Patrick Elliott <sha### [at] hotmail com> wrote:

> >What people are

> screwing up is that they are setting the gamma to something that has

> *nothing* to do with their displays actual gamma, so when they load it

> one something else, it looks wrong. In the case of programs that

> correctly handle the setting in the file, *they* are assuming the 'real'

> gamma is different that your monitor and are dropping or increasing the

> image colors to 'match' what they 'think' your display uses, which is by

> default 1.0, not 2.2.

> --

I have to admit that I didn't know monitors (all of them?) have a default

gamma of 1. This raises a basic question. What is meant by a "gamma of 1.8"

for the Mac and a "gamma of 2.2" for the PC? These are values that I've

always taken as gospel.

Ken com> wrote:

> >What people are

> screwing up is that they are setting the gamma to something that has

> *nothing* to do with their displays actual gamma, so when they load it

> one something else, it looks wrong. In the case of programs that

> correctly handle the setting in the file, *they* are assuming the 'real'

> gamma is different that your monitor and are dropping or increasing the

> image colors to 'match' what they 'think' your display uses, which is by

> default 1.0, not 2.2.

> --

I have to admit that I didn't know monitors (all of them?) have a default

gamma of 1. This raises a basic question. What is meant by a "gamma of 1.8"

for the Mac and a "gamma of 2.2" for the PC? These are values that I've

always taken as gospel.

Ken

Post a reply to this message

|

|

|  |

|  |

|

|

From: scott

Subject: Re: assumed_gamma makes a lemon out of U and my orange

Date: 12 Nov 2005 16:14:52

Message: <43765b4c$1@news.povray.org>

|

|

|

|  |

|  |

|

|

"Kenneth" <kdw### [at] earthlink net> wrote in message

news:web.437641f51f234627b3cb5aca0@news.povray.org

> Patrick Elliott <sha### [at] hotmail net> wrote in message

news:web.437641f51f234627b3cb5aca0@news.povray.org

> Patrick Elliott <sha### [at] hotmail com> wrote:

>

>>> What people are

>> screwing up is that they are setting the gamma to something that

>> has *nothing* to do with their displays actual gamma, so when they

>> load it one something else, it looks wrong. In the case of

>> programs that correctly handle the setting in the file, *they* are

>> assuming the 'real' gamma is different that your monitor and are

>> dropping or increasing the image colors to 'match' what they

>> 'think' your display uses, which is by default 1.0, not 2.2.

>> --

>

> I have to admit that I didn't know monitors (all of them?) have a

> default gamma of 1.

They don't. Most PC monitors (the ones I've measured) have a gamma of

almost exactly 2.2. This means, if you tell it to display RGB 128,128,128

and measure the brightness, it will be 0.5^(2.2)=0.2 of the brightness of

RGB 255,255,255. com> wrote:

>

>>> What people are

>> screwing up is that they are setting the gamma to something that

>> has *nothing* to do with their displays actual gamma, so when they

>> load it one something else, it looks wrong. In the case of

>> programs that correctly handle the setting in the file, *they* are

>> assuming the 'real' gamma is different that your monitor and are

>> dropping or increasing the image colors to 'match' what they

>> 'think' your display uses, which is by default 1.0, not 2.2.

>> --

>

> I have to admit that I didn't know monitors (all of them?) have a

> default gamma of 1.

They don't. Most PC monitors (the ones I've measured) have a gamma of

almost exactly 2.2. This means, if you tell it to display RGB 128,128,128

and measure the brightness, it will be 0.5^(2.2)=0.2 of the brightness of

RGB 255,255,255.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"scott" <spa### [at] spam com> wrote:

> They don't. Most PC monitors (the ones I've measured) have a gamma of

> almost exactly 2.2. This means, if you tell it to display RGB 128,128,128

> and measure the brightness, it will be 0.5^(2.2)=0.2 of the brightness of

> RGB 255,255,255.

Except... (there's always those damn exceptions!) it can't be strictly a

matter of your monitor, because I have a dual boot PC with Linux & Windows,

yet I cannot get the two to agree on display_gamma, even though its the

same hardware. With both set the same, what looks good in Linux will be too

dark in Windows.

And 2.2 is out of the question! If I set my display_gamma to 2.2, it doesn't

look right on anything else, and every scene I've ever rendered comes out

dark and oversaturated.

RG com> wrote:

> They don't. Most PC monitors (the ones I've measured) have a gamma of

> almost exactly 2.2. This means, if you tell it to display RGB 128,128,128

> and measure the brightness, it will be 0.5^(2.2)=0.2 of the brightness of

> RGB 255,255,255.

Except... (there's always those damn exceptions!) it can't be strictly a

matter of your monitor, because I have a dual boot PC with Linux & Windows,

yet I cannot get the two to agree on display_gamma, even though its the

same hardware. With both set the same, what looks good in Linux will be too

dark in Windows.

And 2.2 is out of the question! If I set my display_gamma to 2.2, it doesn't

look right on anything else, and every scene I've ever rendered comes out

dark and oversaturated.

RG

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()