|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Just some musings, little head or tail,

For some time now I of and on work as a volunteer in a local museum. The big job

to be dome is digitizing the collection, mostly by means of photography. The

whole process has to be reviewed every now and then.

In the workflow we convert RAW images to DNG files. Every operation on the image

is stored as a kind of 'script' in the file and no pixel is changed. From the

script(s) a new image(s) can be rendered. Parametric Image Editing [1]. In a way

POV-Ray is a Parametric Image Editor/Creator, an image is rendered on a set of

rules.

I.i.r.c. POV-Ray' internal resolution(?) and or colour depth is different than

what is written to file. So the ray-tracing part is one set of parameters. The

conversion to the actual image is an other set of, mostly fixed, parameters.

Imagine that we can use something else for the second part. Lets say

ImageMagick. POV-Ray's "internal image" then would be comparable with a raw file

from a camera.

Now we can use ImageMagick to do the conversion to screen ready image. But with

the power of it a lot more can be done. All the processing it can do can be

applied to the RAW part for rendering. You could write a parametric processing

script in your POV-Ray file that is applied after rendering, or you can store it

in the 'RAW image'. You can write multiple processing scripts for different

outputs. You can use the post processing for manipulation of many aspects of the

image.

Can it be pushed even further? During rendering POV-Ray has to know with what

object a ray intersects. Can these data somehow be saved? Primary intersection,

secondary intersection? "RedSphere" is a list of pixels. BlueSphere an other

one. Now we can select pixels by object (-intersection) instead of by colour as

in the Gimp. These pixels can be fed into the post-processor, so we can apply

effects to the red sphere and not to the red box. Can such data be stored in a

DNG file? Or can we finally use SQLite ;) (SQLite as a project container format

could be nice) Or, even store the whole POV-Ray scene in a DNG as metadata.

An other thought, could it be possible to attach such scripts directly to an

object and modify the object/texture with them while rendering?

[1] https://dpbestflow.org/image-editing/parametric-image-editing

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"ingo" <nomail@nomail> wrote:

> During rendering POV-Ray has to know with what

> object a ray intersects. Can these data somehow be saved? Primary intersection,

> secondary intersection?

Damned good idea(s) and something I've thought about and suggested in the past,

however not to the extent of saving the data to a file!

So consider this:

We take my idea of having an object registry, so we can store all of the objects

in an array, and loop through them.

Next, we loop through all the y and x of the camera view frustum, and use trace

() to cycle through every object in the registry for every pixel.

Using that <x, y, z> data, we have a map of the image with a sort of z-buffer.

Now we write image data perhaps using a format like:

<x, y, object1_z, . . . , objectN_z>

What do you think?

Also, I've wanted a custom version of trace () that would return ALL of the

intersections with an object, not just the first one, so we could do something

like custom inside/outside tests using crossing numbers.

Any idea on how to approach that via SDL that doesn't involve testing dozens of

thin slices of the object perpendicular to the camera ray?

- BE

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Bald Eagle" <cre### [at] netscape net> wrote:

> So consider this:

>

> We take my idea of having an object registry, so we can store all of the objects

> in an array, and loop through them.

> Next, we loop through all the y and x of the camera view frustum, and use trace

> () to cycle through every object in the registry for every pixel.

> Using that <x, y, z> data, we have a map of the image with a sort of z-buffer.

> Now we write image data perhaps using a format like:

> <x, y, object1_z, . . . , objectN_z>

>

> What do you think?

Go, go, go ;) Maybe also get al the bounding boxes (min- max_extent) of the

object so one does not have to test every ray against every object. But, before

you know you are writing a ray-tracer within a ray-tracer, within a ray-tracer

....

POV-Ray has so many usable internal data that are not available to the user.

I've manipulated the radiosity proces by rendering an image with the radiosity

data from an other image. What if we knew the data file format and can

manipulate it? Or photon data? Normals, intersection points, mirror things, all

stuff that's available in OpenGL for shaders.

> Also, I've wanted a custom version of trace () that would return ALL of the

> intersections with an object, not just the first one, so we could do something

> like custom inside/outside tests using crossing numbers.

> Any idea on how to approach that via SDL that doesn't involve testing dozens of

> thin slices of the object perpendicular to the camera ray?

That is difficult as shapes can have all kind of holes and protrusions. What I

tried in the past is create a "slit camera" that works like a flat bed scanner,

but with the object rotating above the sensor.

https://en.wikipedia.org/wiki/Slit-scan_photography You could rotate such a

camera around the object and inside the object. Or make a point source camera

and scan in a sphere shape, but there will always be "self shadowing". Some of

these must be doable with the advanced camera features.

An other way could be to "shrink wrap" a mesh around an object and use the

vertices, but how in SDL?

ingo net> wrote:

> So consider this:

>

> We take my idea of having an object registry, so we can store all of the objects

> in an array, and loop through them.

> Next, we loop through all the y and x of the camera view frustum, and use trace

> () to cycle through every object in the registry for every pixel.

> Using that <x, y, z> data, we have a map of the image with a sort of z-buffer.

> Now we write image data perhaps using a format like:

> <x, y, object1_z, . . . , objectN_z>

>

> What do you think?

Go, go, go ;) Maybe also get al the bounding boxes (min- max_extent) of the

object so one does not have to test every ray against every object. But, before

you know you are writing a ray-tracer within a ray-tracer, within a ray-tracer

....

POV-Ray has so many usable internal data that are not available to the user.

I've manipulated the radiosity proces by rendering an image with the radiosity

data from an other image. What if we knew the data file format and can

manipulate it? Or photon data? Normals, intersection points, mirror things, all

stuff that's available in OpenGL for shaders.

> Also, I've wanted a custom version of trace () that would return ALL of the

> intersections with an object, not just the first one, so we could do something

> like custom inside/outside tests using crossing numbers.

> Any idea on how to approach that via SDL that doesn't involve testing dozens of

> thin slices of the object perpendicular to the camera ray?

That is difficult as shapes can have all kind of holes and protrusions. What I

tried in the past is create a "slit camera" that works like a flat bed scanner,

but with the object rotating above the sensor.

https://en.wikipedia.org/wiki/Slit-scan_photography You could rotate such a

camera around the object and inside the object. Or make a point source camera

and scan in a sphere shape, but there will always be "self shadowing". Some of

these must be doable with the advanced camera features.

An other way could be to "shrink wrap" a mesh around an object and use the

vertices, but how in SDL?

ingo

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"ingo" <nomail@nomail> wrote:

> Go, go, go ;) Maybe also get al the bounding boxes (min- max_extent) of the

> object so one does not have to test every ray against every object. But, before

> you know you are writing a ray-tracer within a ray-tracer, within a ray-tracer

> ....

:D I've already started writing the raytracer within a raytracer to figure out

the inverse transform sampling that clipka wanted for lighting.

> POV-Ray has so many usable internal data that are not available to the user.

> I've manipulated the radiosity proces by rendering an image with the radiosity

> data from an other image. What if we knew the data file format and can

> manipulate it? Or photon data? Normals, intersection points, mirror things, all

> stuff that's available in OpenGL for shaders.

That's a good point. We have the source, and Bill Pokorny, Jerome Grimbert, and

others have experience in following the internal workings. We need to find some

more programmers to help the rest of us get up to speed on how it all works and

how to make edits to the code without breaking too much / everything.

> > Also, I've wanted a custom version of trace () that would return ALL of the

> That is difficult as shapes can have all kind of holes and protrusions.

Correct. Which is why I think your approach might not work, as it seems to be

(unless I'm missing something) just a variation on my many failed past attempts.

What I'm thinking is that you:

1. shoot a ray with trace () from the camera to the World Coordinates that are

back calculated from the screen pixel position.

2. in the event that you get a Hit, you increment the position farther along the

ray by Epsilon, and initiate a subsequent trace () call.

3. repeat until you get <0, 0, 0> for the normal vector.

That seems like a guaranteed method for getting ALL of the surfaces, down to

pixel-level granularity.

And of course, you could go finer if you wanted / needed to.

The bounding box array is a good idea for partitioning / limiting the number of

trace ()- tests for any given ray.

- BE

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Bald Eagle" <cre### [at] netscape net> wrote:

> What I'm thinking is that you:

> 1. shoot a ray with trace () from the camera to the World Coordinates that are

> back calculated from the screen pixel position.

> 2. in the event that you get a Hit, you increment the position farther along the

> ray by Epsilon, and initiate a subsequent trace () call.

> 3. repeat until you get <0, 0, 0> for the normal vector.

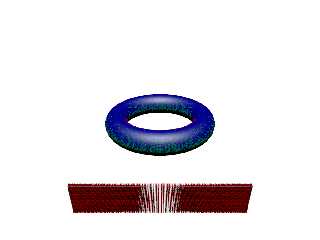

Would it detect the hole of the doughnut?

Marching cubes should find all cavities in an object.

That brings me to another line of thought. A mesh engine. With the nearly same

SDL generate mesh' of al POV-Ray objects. Difference would be an added meshify

keyword with an int for the base resolution of the mesh. A mesh resolution

multiplier could be set in global_settings so you can make the mesh resolution

fitting the image resolution.

It would make the SDL the heart of the system, from there one can ray trace

solids, or mesh. One could push the mesh to openGL, or use a scan line renderer.

ingo net> wrote:

> What I'm thinking is that you:

> 1. shoot a ray with trace () from the camera to the World Coordinates that are

> back calculated from the screen pixel position.

> 2. in the event that you get a Hit, you increment the position farther along the

> ray by Epsilon, and initiate a subsequent trace () call.

> 3. repeat until you get <0, 0, 0> for the normal vector.

Would it detect the hole of the doughnut?

Marching cubes should find all cavities in an object.

That brings me to another line of thought. A mesh engine. With the nearly same

SDL generate mesh' of al POV-Ray objects. Difference would be an added meshify

keyword with an int for the base resolution of the mesh. A mesh resolution

multiplier could be set in global_settings so you can make the mesh resolution

fitting the image resolution.

It would make the SDL the heart of the system, from there one can ray trace

solids, or mesh. One could push the mesh to openGL, or use a scan line renderer.

ingo

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Bald Eagle" <cre### [at] netscape net> wrote:

>

> What I'm thinking is that you:

> 1. shoot a ray with trace () from the camera to the World Coordinates that are

> back calculated from the screen pixel position.

> 2. in the event that you get a Hit, you increment the position farther along the

> ray by Epsilon, and initiate a subsequent trace () call.

> 3. repeat until you get <0, 0, 0> for the normal vector.

>

Unfinished thought:

Get min-, max_extent of object.

1. trace() a corner, lets assume it's a hit.

2. At the hit point create a virtual box with the point as center.

3. Use the inside() function to test the four corners of the box.

[*]

Assume one point is inside the object

4. Trace from the other corners to the one inside the object to find points on

thesurface.

5.Move the box and repeat. Scan the whole bounding box using cubes.

[*] Ok it's simplistic and it may require some subdivision of the box before

using trace() or create 4 boxes with the inside point as common corner straight

away.

It may be more efficient to first scan one face of the bounding box with trace()

and start with the cubes from there.

ingo net> wrote:

>

> What I'm thinking is that you:

> 1. shoot a ray with trace () from the camera to the World Coordinates that are

> back calculated from the screen pixel position.

> 2. in the event that you get a Hit, you increment the position farther along the

> ray by Epsilon, and initiate a subsequent trace () call.

> 3. repeat until you get <0, 0, 0> for the normal vector.

>

Unfinished thought:

Get min-, max_extent of object.

1. trace() a corner, lets assume it's a hit.

2. At the hit point create a virtual box with the point as center.

3. Use the inside() function to test the four corners of the box.

[*]

Assume one point is inside the object

4. Trace from the other corners to the one inside the object to find points on

thesurface.

5.Move the box and repeat. Scan the whole bounding box using cubes.

[*] Ok it's simplistic and it may require some subdivision of the box before

using trace() or create 4 boxes with the inside point as common corner straight

away.

It may be more efficient to first scan one face of the bounding box with trace()

and start with the cubes from there.

ingo

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"ingo" <nomail@nomail> wrote:

> Would it detect the hole of the doughnut?

"No," because we're only detecting surfaces, but

Yes, because we're doing winding numbers, so you'd be going from the the odd

number of crossings to an even number when you hit the hole.

Picture spearing the doughnut with a bamboo skewer, all the way through.

> Marching cubes should find all cavities in an object.

>

> That brings me to another line of thought. A mesh engine. With the nearly same

> SDL generate mesh' of al POV-Ray objects. Difference would be an added meshify

> keyword with an int for the base resolution of the mesh. A mesh resolution

> multiplier could be set in global_settings so you can make the mesh resolution

> fitting the image resolution.

> Get min-, max_extent of object.

> 1. trace() a corner, lets assume it's a hit.

> 2. At the hit point create a virtual box with the point as center.

Of what size?

> 3. Use the inside() function to test the four corners of the box.

A box has 8 corners. You now have _7_ left.

> [*]

> Assume one point is inside the object

> 4. Trace from the other corners to the one inside the object to find points on

> thesurface.

You're not going to find (all the) holes with that method. Trace suffers from an

object's (potential) self-occlusion.

> 5.Move the box and repeat. Scan the whole bounding box using cubes.

Marching cubes has a lot to offer, and is definitely something that should be

implemented in parallel to the sort of pseudo-ray-marching method that I

suggested.

Using our primitives vs SDF's necessitates different methods, or at least a

conversion. I've even looked into generating an SDF from an arbitrary mesh, and

apparently that too is possible with certain software.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Bald Eagle" <cre### [at] netscape net> wrote:

> A box has 8 corners. You now have _7_ left.

... incomplete thought disclaimer ;) I was doing a quick 2d visualization.

>

> You're not going to find (all the) holes with that method. Trace suffers from an

> object's (potential) self-occlusion.

Yes that's related to your other question, "what's the box size". Too big and

you miss holes too small and you and you waste cpu ticks.

It would be helpful if we had Roentgen images. Can we create them? Maybe. Fill

the object with an absorbing medium. X-Ray from three sides, but how to analyze

the result? Again, it would be nice if the ray density sampling data could be

written to a file (per object).

But, I think your method, trace, move by epsilon, trace, should work. You'd get

all the object/ empty space transitions. Depending on the goal, instead of

tracing from the camera, you could scan from one surface of the bounding box to

the opposite. Store all intersections in arrays of arrays. For test render each

point as a small sphere.

ingo net> wrote:

> A box has 8 corners. You now have _7_ left.

... incomplete thought disclaimer ;) I was doing a quick 2d visualization.

>

> You're not going to find (all the) holes with that method. Trace suffers from an

> object's (potential) self-occlusion.

Yes that's related to your other question, "what's the box size". Too big and

you miss holes too small and you and you waste cpu ticks.

It would be helpful if we had Roentgen images. Can we create them? Maybe. Fill

the object with an absorbing medium. X-Ray from three sides, but how to analyze

the result? Again, it would be nice if the ray density sampling data could be

written to a file (per object).

But, I think your method, trace, move by epsilon, trace, should work. You'd get

all the object/ empty space transitions. Depending on the goal, instead of

tracing from the camera, you could scan from one surface of the bounding box to

the opposite. Store all intersections in arrays of arrays. For test render each

point as a small sphere.

ingo

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"ingo" <nomail@nomail> wrote:

> But, I think your method, trace, move by epsilon, trace, should work. You'd get

> all the object/ empty space transitions. Depending on the goal, instead of

> tracing from the camera, you could scan from one surface of the bounding box to

> the opposite. Store all intersections in arrays of arrays. For test render each

> point as a small sphere.

>

> ingo

Post a reply to this message

Attachments:

Download 'multipletrace.png' (167 KB)

Preview of image 'multipletrace.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

I also found this (!)

But haven't had the opportunity to look it over.

http://news.povray.org/povray.binaries.scene-files/thread/%3C38CB12D0.732F7F15@enter.net%3E/?ttop=321382&toff=950

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()