|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Greeting,

continuing experiments with Python (which continues to piss me off), I

decided to write small program to convert image heightfields into

triangle meshes. My may intention was to improve quality for

heightfields having both small image size and low bit depth - I supposed

that converting them into meshes will make POVRay interpolate

nonexisting data, thus improving visual appearance.

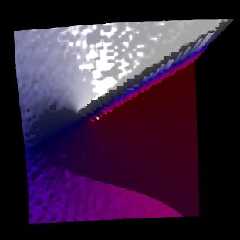

Attached is my test image 1.test grey 001.png, used as a heightfield

(with "smooth" on) for rendering 2.heightfield.jpg. Since the source is

only 64 * 64 pixels, result looks... well, correspondingly.

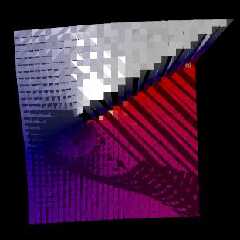

Next, I created my converter (attached as img2mesh.01.004.py.bz2) and

used it to convert the same source into triangle mesh. Rendering result

is attached as 3.img2mesh.01.004.jpg and, as you can see, it's clearer

but full of holes. After that I finally took a look at POVRay docs, that

say that POVRay internally uses 4 pixels for 2 triangles, just like my

program (never read docs before doing something yourself - it's

discouraging!), except for I didn't do force connection of square

corners, that lead to a holes. Instead of fixing it (I find fixing

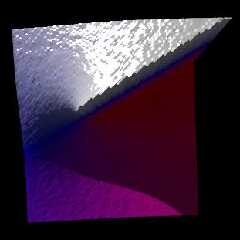

discouraging too), I simply invented different approach - using 4 pixels

for 4 triangles (don't tell me if this is described somewhere already),

that lead to a completely different program, attached as

img2mesh.02.001.py.bz2), which provides output like attached

4.img2mesh.02.001.jpg.

The latter looks to me significantly better than POVRay heightfield

rendering (not to mention my first attempt), although I plan some

additional explorations (artifacts on flat surfaces are still

unexplained, and I even start to suspect POVRay roundoff errors).

Meanwhile, I hope someone will find this tiny utility useful, and

probably even come with new ideas.

Prerequisites:

1) Python (I used 3.12 but previous versions of Python 3 are supposed to

be fine). Seem to be preinstalled on many computers nowadays (that's how

I got it, for example). My program is equipped with minimal GUI, using

tkinter, which seem to be redistributed with all standard Python

distributions, so doesn't require separate download.

2) Pillow for reading images. As a simple image reading library, pillow

is distributed everywhere; at worst, it takes few seconds to download

and no seconds to install and configure.

So, have a day.

Ilya

Post a reply to this message

Attachments:

Download '1.test grey 001.png' (2 KB)

Download '2.heightfield.jpg' (9 KB)

Download 'img2mesh.01.004.py.bz2.zip' (3 KB)

Download '3.img2mesh.01.004.jpg' (14 KB)

Download 'img2mesh.02.001.py.bz2.zip' (2 KB)

Download '4.img2mesh.02.001.jpg' (10 KB)

Preview of image '1.test grey 001.png'

Preview of image '2.heightfield.jpg'

Preview of image '3.img2mesh.01.004.jpg'

Preview of image '4.img2mesh.02.001.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

hi,

Ilya Razmanov <ily### [at] gmail com> wrote:

> Greeting,

>

> continuing experiments with Python (which continues to piss me off), I

> decided to write small program to convert image heightfields into

> triangle meshes. ...

> The latter looks to me significantly better than POVRay heightfield

> rendering ...

for data "extrapolated" from a 64x64 image, that last image looks real good I

think. wondering if the calculation could not be done in SDL ?

regards, jr. com> wrote:

> Greeting,

>

> continuing experiments with Python (which continues to piss me off), I

> decided to write small program to convert image heightfields into

> triangle meshes. ...

> The latter looks to me significantly better than POVRay heightfield

> rendering ...

for data "extrapolated" from a 64x64 image, that last image looks real good I

think. wondering if the calculation could not be done in SDL ?

regards, jr.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

On 30.11.2023 16:59, jr wrote:

> for data "extrapolated" from a 64x64 image, that last image looks real good I

> think. wondering if the calculation could not be done in SDL ?

For this to be done within POVRay only, I need:

1) POVRay opening image as some sort of array with easy access to any

pixel data I want

2) Not only math but some programming (I need cycles first of all)

What as to 1), surely, POVRay have something to read images - after all,

it uses images as heightfield, that requires image reading and

representation as some data structure internally. But I see no way for

*me* to access these data directly. Well, after all, POVRay is neither a

bitmap editor, nor a programming environment (or, as our CC said,

"neither both"). So, I use external tool, taking advantage of that POV

language is simple text, easy to understand and write. I used Python

since I got my Windows notebook with Python 3.8 preinstalled, and I was

curious enough to take a look at what is this and what it is good for.

As long as I know, it also comes preinstalled with Linux, and, in

general, looks to be more widely available than POVRay itself, that

makes my tiny programs cross-platform. And, since POV files are so easy

to write, why not to use this advantage of POVRay? ;-)

Ilya

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

hi,

Ilya Razmanov <ily### [at] gmail com> wrote:

> > for data "extrapolated" from a 64x64 image, that last image looks real good I

> > think. wondering if the calculation could not be done in SDL ?

> For this to be done within POVRay only, I need:

> 1) POVRay opening image as some sort of array with easy access to any

> pixel data I want

> 2) Not only math but some programming (I need cycles first of all)

aiui, POV-Ray (as of 3.7) has the "tools", and your first script (the spheres

image) would, I think, be fairly straightforward to code up in SDL. hence my

question re the details of the height_field processing.

> ...

> As long as I know, it also comes preinstalled with Linux, and, in

> general, looks to be more widely available than POVRay itself, that

> makes my tiny programs cross-platform. And, since POV files are so easy

> to write, why not to use this advantage of POVRay? ;-)

:-) I'm sure many/most (can) use Python, but all of us have POV-Ray to use.

regards, jr. com> wrote:

> > for data "extrapolated" from a 64x64 image, that last image looks real good I

> > think. wondering if the calculation could not be done in SDL ?

> For this to be done within POVRay only, I need:

> 1) POVRay opening image as some sort of array with easy access to any

> pixel data I want

> 2) Not only math but some programming (I need cycles first of all)

aiui, POV-Ray (as of 3.7) has the "tools", and your first script (the spheres

image) would, I think, be fairly straightforward to code up in SDL. hence my

question re the details of the height_field processing.

> ...

> As long as I know, it also comes preinstalled with Linux, and, in

> general, looks to be more widely available than POVRay itself, that

> makes my tiny programs cross-platform. And, since POV files are so easy

> to write, why not to use this advantage of POVRay? ;-)

:-) I'm sure many/most (can) use Python, but all of us have POV-Ray to use.

regards, jr.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Ilya Razmanov <ily### [at] gmail com> wrote:

> On 30.11.2023 16:59, jr wrote:

>

> > for data "extrapolated" from a 64x64 image, that last image looks real good I

> > think. wondering if the calculation could not be done in SDL ?

>

> For this to be done within POVRay only, I need:

> 1) POVRay opening image as some sort of array with easy access to any

> pixel data I want

> 2) Not only math but some programming (I need cycles first of all)

you can use an image as a pattern and you can use a pattern in a function. Then

you can sample the "image function" to fill arrays. But, the image will already

be interpolated.

ingo com> wrote:

> On 30.11.2023 16:59, jr wrote:

>

> > for data "extrapolated" from a 64x64 image, that last image looks real good I

> > think. wondering if the calculation could not be done in SDL ?

>

> For this to be done within POVRay only, I need:

> 1) POVRay opening image as some sort of array with easy access to any

> pixel data I want

> 2) Not only math but some programming (I need cycles first of all)

you can use an image as a pattern and you can use a pattern in a function. Then

you can sample the "image function" to fill arrays. But, the image will already

be interpolated.

ingo

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"jr" <cre### [at] gmail com> wrote:

> Ilya Razmanov <ily### [at] gmail com> wrote:

> Ilya Razmanov <ily### [at] gmail com> wrote:

> ...> > For this to be done within POVRay only, I need:

> aiui, POV-Ray (as of 3.7) has the "tools", ...

proof of concept (640x480 image source). ~20 minutes ;-)

regards, jr.

-----<snip>-----

/* cf Iyla's "spheres" script */

#version 3.8;

#include "functions.inc"

global_settings {assumed_gamma 1}

#declare im_ = pigment {

image_map {

png "s_o_l.png"

gamma 1

}

scale <640,480,1>

};

#declare ext_ = max_extent(im_);

#declare arr_ = array [640][480];

#for (r_,0,479)

#for (c_,0,639)

#local arr_[c_][r_] = eval_pigment(im_,<c_,r_,.5>);

#end

#end

#for (r_,0,479)

#for (c_,0,639)

sphere {

<(c_+.5),(r_+.5),0>, .495

texture {

pigment {arr_[c_][r_]}

finish {emission 1}

}

}

#end

#end

camera {

location <320,240,-275>

right x * (4/3)

up y

angle 90

look_at <320,240,0>

} com> wrote:

> ...> > For this to be done within POVRay only, I need:

> aiui, POV-Ray (as of 3.7) has the "tools", ...

proof of concept (640x480 image source). ~20 minutes ;-)

regards, jr.

-----<snip>-----

/* cf Iyla's "spheres" script */

#version 3.8;

#include "functions.inc"

global_settings {assumed_gamma 1}

#declare im_ = pigment {

image_map {

png "s_o_l.png"

gamma 1

}

scale <640,480,1>

};

#declare ext_ = max_extent(im_);

#declare arr_ = array [640][480];

#for (r_,0,479)

#for (c_,0,639)

#local arr_[c_][r_] = eval_pigment(im_,<c_,r_,.5>);

#end

#end

#for (r_,0,479)

#for (c_,0,639)

sphere {

<(c_+.5),(r_+.5),0>, .495

texture {

pigment {arr_[c_][r_]}

finish {emission 1}

}

}

#end

#end

camera {

location <320,240,-275>

right x * (4/3)

up y

angle 90

look_at <320,240,0>

}

Post a reply to this message

Attachments:

Download '231201_ir.png' (960 KB)

Preview of image '231201_ir.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Op 1-12-2023 om 08:03 schreef jr:

> "jr" <cre### [at] gmail com> wrote:

>> Ilya Razmanov <ily### [at] gmail com> wrote:

>> Ilya Razmanov <ily### [at] gmail com> wrote:

>> ...> > For this to be done within POVRay only, I need:

>> aiui, POV-Ray (as of 3.7) has the "tools", ...

>

> proof of concept (640x480 image source). ~20 minutes ;-)

>

LOL!

--

Thomas com> wrote:

>> ...> > For this to be done within POVRay only, I need:

>> aiui, POV-Ray (as of 3.7) has the "tools", ...

>

> proof of concept (640x480 image source). ~20 minutes ;-)

>

LOL!

--

Thomas

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

On 12/1/23 02:03, jr wrote:

> proof of concept (640x480 image source). ~20 minutes 😉

This a good example to show the value of the yuqk / v4.0 idea of

providing a 'munctions.inc' / macro defined functions include.

The yuqk fork has no 'eval_pigment', but it does have a munction called

M_evaluate_pigment(). The following runs in about 4.5 seconds,

elapsed, on my old i3.

In this case, I think, you can mostly emulate what was done with

M_evaluate_pigment() in official releases of POV-Ray - to some degree or

other.

Bill P.

----- snip ------

#version unofficial 3.8; // yuqk

#if (file_exists("version.inc"))

#include "version.inc"

#end

#if (!defined(Fork_yuqk))

#error "This POV-Ray SDL code requires the yuqk fork."

#end

global_settings { assumed_gamma 1 }

#declare Grey50 = srgb <0.5,0.5,0.5>;

background { Grey50 }

#declare VarOrthoMult =

2.0/max(image_width/image_height,image_height/image_width);

#declare Camera01z = camera {

orthographic

location <0,0,-2>

direction z

right VarOrthoMult*x*max(1,image_width/image_height)

up VarOrthoMult*y*max(1,image_height/image_width)

}

#declare PigIm = pigment {

image_map {

png "Test680x480.png"

gamma srgb

interpolate 0

}

scale <640,480,1>

};

#include "munctions.inc"

// Following defines FnPg00() so it can be used directly.

#declare Vec00 =

M_evaluate_pigment("FnPg00",PigIm,<0,0,0>,false);

//--- scene ---

union {

#for (R,0,479)

#for (C,0,639)

sphere {

<(C+.5),(R+.5),0>, .495

texture {

pigment {

// Can call 'munction' repeatedly too, but it's

// 2x slower than FnPg00() due the macro call.

//color

//M_evaluate_pigment("FnPg00",PigIm,<C,R,0>,false)

color FnPg00(C,R,0)

}

finish {emission 1}

}

}

#end

#end

scale <1/640,1/480,1>

translate <-0.5,-0.5,0>

scale <VarOrthoMult*max(1,image_width/image_height),

VarOrthoMult*max(1,image_height/image_width),

1>

}

camera { Camera01z }

----- snip ------

Post a reply to this message

Attachments:

Download 'jr.png' (33 KB)

Preview of image 'jr.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

On 12/1/23 09:13, William F Pokorny wrote:

> This a good example

Apologies for attaching the pointless image. Foggy mind / autopilot :-(

Bill P.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

hi,

William F Pokorny <ano### [at] anonymous org> wrote:

> On 12/1/23 02:03, jr wrote:

> > proof of concept (640x480 image source). ~20 minutes 😉

> ...

> The yuqk fork has no 'eval_pigment', but it does have a munction called

> M_evaluate_pigment(). The following runs in about 4.5 seconds,

> elapsed, on my old i3.

that's pretty good. modifying my posted code to junk the array and call

'eval_pigment' inside the 'texture{}', I get parsing down to just under 5.5 secs

(plus ~1s render) using the beta.2.

have not tried tried to install the renamed branch yet, will try later tonight

to get your code to run with last-but-one-before-renaming version.

> Apologies for attaching the pointless image. Foggy mind / autopilot :-(

:-)

@Ilya

sorry about misspelling your name in the posted scene code, have corrected my

local copy.

regards, jr. org> wrote:

> On 12/1/23 02:03, jr wrote:

> > proof of concept (640x480 image source). ~20 minutes 😉

> ...

> The yuqk fork has no 'eval_pigment', but it does have a munction called

> M_evaluate_pigment(). The following runs in about 4.5 seconds,

> elapsed, on my old i3.

that's pretty good. modifying my posted code to junk the array and call

'eval_pigment' inside the 'texture{}', I get parsing down to just under 5.5 secs

(plus ~1s render) using the beta.2.

have not tried tried to install the renamed branch yet, will try later tonight

to get your code to run with last-but-one-before-renaming version.

> Apologies for attaching the pointless image. Foggy mind / autopilot :-(

:-)

@Ilya

sorry about misspelling your name in the posted scene code, have corrected my

local copy.

regards, jr.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()