|

|

|

|

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 27 Apr 2018 09:25:43

Message: <5ae324d7$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 04/26/2018 10:16 AM, Bald Eagle wrote:

> William F Pokorny <ano### [at] anonymous org> wrote:

>

...

>

> Just for clarification, POV-Ray uses:

> bisection

> regula falsa

> Newton's method, and

> the Sturmian method

> ?

> Or are some of those the same?

>

> and - where is this all located in the source code?

> Because I'm a hopeless intellectual masochist like that.

>

Thanks. Suppose I've been exposed to some of the concepts in the past,

but trust me, I'm climbing the learning curve/mountain(a) with this

stuff. There is much I don't understand. My standard set of test scenes

number a 195 at present which have corrected many of my missteps.

You can find most of the code in source/core/math/polynomialsolver.cpp.

There are three custom solvers in solve_quadratic(), solve_cubic() and

solve_quartic() for orders 2 through 4. I've hardly looked at these.

In polysolve / 'sturm' - a sturm chain based bisection method is used in

combination with regula_falsa(). Value based bisection isn't used at

in the sturm chain are, in my present version, the original and it's

normalized derivative. Who knows, might play with it as an alternative

or adjunct to regula_falsa(). The spin is it's really fast when it works

- but sometimes it doesn't.

Near term looking more at the missing root issue - some(b) of which was

addressed with (9), but the rest comes to the roots having collapsed

toward each other so severely the sturm chain root counting / isolation

falls apart. Not looking like it will be easy to mitigate...

Bill P.

(a) - Trying to get out of the media whirlpool! :-)

(b) - Where the sturm chain root counting and isolation stuff still

works despite how close the roots are. org> wrote:

>

...

>

> Just for clarification, POV-Ray uses:

> bisection

> regula falsa

> Newton's method, and

> the Sturmian method

> ?

> Or are some of those the same?

>

> and - where is this all located in the source code?

> Because I'm a hopeless intellectual masochist like that.

>

Thanks. Suppose I've been exposed to some of the concepts in the past,

but trust me, I'm climbing the learning curve/mountain(a) with this

stuff. There is much I don't understand. My standard set of test scenes

number a 195 at present which have corrected many of my missteps.

You can find most of the code in source/core/math/polynomialsolver.cpp.

There are three custom solvers in solve_quadratic(), solve_cubic() and

solve_quartic() for orders 2 through 4. I've hardly looked at these.

In polysolve / 'sturm' - a sturm chain based bisection method is used in

combination with regula_falsa(). Value based bisection isn't used at

in the sturm chain are, in my present version, the original and it's

normalized derivative. Who knows, might play with it as an alternative

or adjunct to regula_falsa(). The spin is it's really fast when it works

- but sometimes it doesn't.

Near term looking more at the missing root issue - some(b) of which was

addressed with (9), but the rest comes to the roots having collapsed

toward each other so severely the sturm chain root counting / isolation

falls apart. Not looking like it will be easy to mitigate...

Bill P.

(a) - Trying to get out of the media whirlpool! :-)

(b) - Where the sturm chain root counting and isolation stuff still

works despite how close the roots are.

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 29 Apr 2018 16:52:54

Message: <5ae630a6$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 03/30/2018 08:21 AM, William F Pokorny wrote:

>

...

> Bill P.

While studying the missing root problem I finished off some initial

lower and upper root bound estimation code aimed at polysolve()

performance. The update is at:

https://github.com/wfpokorny/povray/tree/fix/polynomialsolverAccuracy

The update is directly related to a sub-discussion of issue:

https://github.com/POV-Ray/povray/issues/236

Currently the upper bound method(d) is hard coded to run always. Longer

term, and given I've found some POV-Ray objects such as blob already

calculate a 'max_bounds' value, I'm thinking about a change to the

Solve_Polynomial(), polysolve() calls to add a parameter where :

a) if > 0 value, we'd be passing a known upper bound for all roots.

b) if == 0, we'd do what we do today.

c) if == -1, we'd run the original Cauchy's bound estimate method good

for the lowest value root. In some applications, that first root might

be all we need.

d) if == -2, we'd run an estimator based on Cauchy's method from a 2009

paper(z) found via:

https://en.wikipedia.org/wiki/Properties_of_polynomial_roots#Other_bounds

and good for the upper bound of all roots.

There are better bound estimators, but all I've looked at are more

complex - and several I don't understand. In any case I doubt we'd buy

back the performance given our equation orders are tiny compared to that

aimed at by the more generic solvers for which these better estimator

were created. Many others also use complex numbers.

Bill P.

(z) - Had to tweak it slightly due two sor fails in testing. Due the

surprise with sturm-cubic it's on my list to enable sturm-quadratic in

Solve_Poly() to do some more testing.

Performance info:

------------ /usr/bin/time povray -j -wt1 -fn -p -d -c lemonSturm.pov

0) master 30.22user 0.04system 0:30.89elapsed

9) 687f57b 19.44user 0.01system 0:20.06elapsed -35.67%

10) 0f6afcc 14.77user 0.02system 0:15.36elapsed -24.02% -51.13%

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 8 May 2018 09:11:34

Message: <5af1a206$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 03/30/2018 08:21 AM, William F Pokorny wrote:

>....

Sturm / polysove() investigation. Chapter ??. Corruption of the Sturm Chain.

Let me start this post with the news I found something amiss in the

recent bound estimate code recently added to:

https://github.com/wfpokorny/povray/tree/fix/polynomialsolverAccuracy

despite having run 200 plus scenes and image compares without a difference.

Caught the issue while playing with the idea of trading off the one root

vs all roots method automatically. Not trusting the implementation;

added an auto-bound check for lost roots and an assert and BOOM. So, I

have more to do there even if at worst it's leaving a sanity check in

place that resorts to an upper bound of MAX_DISTANCE. Back to that later.

---

Today let us elsewhere ponder. The function modp() does the bulk of the

sturm chain set up. At the end of modp is this bit of code:

k = v->ord - 1;

while (k >= 0 && fabs(r->coef[k]) < SMALL_ENOUGH)

{

r->coef[k] = 0.0;

k--;

}

r->ord = (k < 0) ? 0 : k;

It individually prunes equations in the sturm chain at a depth of >= 2

where the leading coefficients are small.

In Mr. Enzmann's original 1.0 code, 'SMALL_ENOUGH' was 1e-20. In the

days of single floats likely meant lots of looping and looking while

doing little. As of POV-Ray v3.0 'SMALL_ENOUGH' was drastically

increased to 1e-10.

Over the past week or more I've been looking again at achieving better

accuracy. Tried quite a lot of things finally coding up a __float128

version of polysolve all aiming at more accuracy. Finally realized the

remaining issues are far from all about accuracy. Sometimes the

ray-surface equation coming in is simply messed up with respect to any

sign change based methods for isolating the roots - unless we cheat.

For best accuracy while avoiding spurious roots with ill-formed

equations, the value used should be right at the best accuracy for the

floating point type. This would be 1e-7 for floats, 1e-15 for doubles

and 1e-33 for 128 bit floats. With well behaved equations with no zero,

near zero or cross term zero terms during the creation of the sturm

chain equations can support much smaller minimum values, but in a

raytracer we cannot count on the good behavior of the ray-surface equations.

So, why is that 'SMALL_ENOUGH' value sitting at a much larger 1e-10

value for doubles. My guess is someone understood or stumbled upon a

wonderful bit of serendipity in how the pruning of the sturm chain

equations effectively work. Namely, when we have ill-formed equations

with respect to sign chain based root isolation, the pruning value can

be adjusted upward and by 'magic' some or all of the 'ill' in the

incoming equation is pruned and the root isolation works well enough to

hand the regula-falsi method a problem it can solve.

The downside is you cannot universally set this value above the minimum

for the float size when you have well behaved incoming equations or you

prune off sign change information you need to accurately see the

intervals in which roots exist. We are using a fixed value today.

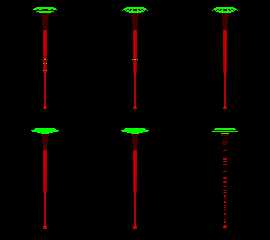

To better demonstrate attaching two images. In both the left column is

what the current master renders. The remaining columns to the right are

rendered with a float128 version of polysolve to better drive home the

point it our primary need isn't better overall accuracy, but more

accurate root isolation.

In the latheQuadratic.png image the top row is the point set from last

falls lathe issue used in a quadratic spline. The top row is a

perspective camera but zoomed way out and using a camera angle of 2. The

bottom row is the same input except with an orthographic camera set

up(1). In the middle column is what the current patched branch renders

using the SMALL_ENOUGH 1e-10 value. The value has been set so as to

behave well for the orthographic case, but this causes issues for the

perspective case. In the right column SMALL_ENOUGH has been set to

float128's minimum value of 1e-33. This orthographic case falls apart

showing the underlying ill-formed for sign change isolation problem -

while the perspective camera case is OK.

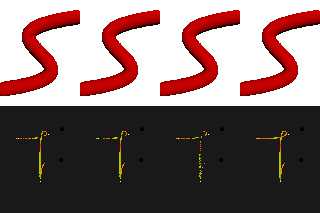

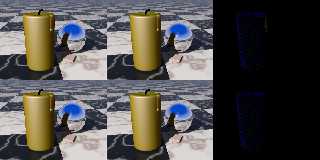

In the sphere_sweeps.png image showing the same progression(2) in

columns 1-3 but in column 4 we dial in a higher SMALL_ENOUGH value which

prunes off just the right about of the sturm chain for the root

isolation to work well.

I'm busy with real life for a while starting later today, but I think we

likely have a path to cleaner renders in these fringe cases given our

sturm chain based method.

We're working in a ray tracer and it is the ray's dx,dy,dz going to zero

which primarily causes the ill-formed for sign base root isolation

issue. We'll need to detect problem directions in the object's code and

create some calculation(3) which we'd pass all the way into the modp()

pruning. Possible I think.

Bill P.

(1) - It's not just the orthographic camera which tends to pull out the

worst case solver issues. Shadow rays with cylindrical and parallel

lights too. There are too the occasional rays which line up orthogonally

in one or compound dimensions.

(2) - Except running with jitter off. Our AA implementations are grid

based which unfortunately tends to line up with the compound (diagonal

type) missing root issues. Thinking something like a Halton sequence

based AA never keeping the center pixel might really help in these cases

by getting the samples more often off the problem ray / surface

grid-axis alignments.

(3) - Perhaps coupled with some ability for users to apply a multiplier

from the SDL?

Post a reply to this message

Attachments:

Download 'lathequadratic.png' (17 KB)

Download 'sphere_sweeps.png' (63 KB)

Preview of image 'lathequadratic.png'

Preview of image 'sphere_sweeps.png'

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 18 May 2018 13:00:10

Message: <5aff069a@news.povray.org>

|

|

|

|  |

|  |

|

|

On 03/30/2018 08:21 AM, William F Pokorny wrote:

....

Sturm / polysove() investigation. Those Difficult Coefficients.

When we have 4th order equations we are today running this piece of code:

/* Test for difficult coeffs. */

if (difficult_coeffs(4, c))

{

sturm = true;

}

in the Solve_Polynomial wrapper for sturm/polysolve() and three specific

solvers including the one for 4th order equations in solve_quartic().

The difficult_coeffs() function scans the coefficients looking for large

differences in values. If found, user end up running sturm for some rays

no matter what was specified in the scene SDL. The code existed in the

original POV-Ray 1.0, but in a slightly modified form and used with an

older version of solve_quartic().

I've had the feeling for a while the code was probably doing nothing

useful for us today. I was almost completely right. Close enough it's

going into the bit bucket with this set of commits.

The code was set up to crash anytime difficult_coeffs() returned true.

Scanned hundreds of scenes. Turned up helix.pov and a handful of scenes

with blobs. Ran those hard coded to use only solve_quartic() and not a

single difference in result. Changed the code above to return

immediately with no roots on difficult_coeffs() returning true. The

helix scene was unchanged as the 'rays' were not part of the resultant

shape.

The blobs were a different story. Many rays are being re-directed to

sturm / polysolve for no benefit given the current solve_quartic code.

To oblivion with that code you all say!

I thought the same, but remembered we have known blob accuracy issues in

https://github.com/POV-Ray/povray/issues/187, maybe half a dozen related

problem scenes in my test space. Plus to support the subsurface feature

Chrisoph added this bit of code in blob.cpp to avoid visible seams:

DBL depthTolerance = (ray.IsSubsurfaceRay()? 0 : DEPTH_TOLERANCE);

I've been running with DEPTH_TOLERANCE at 1e-4 for a long time in my

personal version of POV-Ray which fixes all the blob accuracy issues

except for the subsurface one.

Given difficult_coeffs() is almost exclusively active with blobs,

updated blob.cpp to run with accuracy addressing the issues in the

previous paragraph. With this update in place, we do occasionally see

cases where the bump into to sturm / polysolve method is necessary.

However, the automatic difficult_coeffs() method doesn't currently catch

all of the fringe case rays.

It could be adjusted so as to push more solve_quartic rays into sturm /

polysolve, but it's aleady the case most difficult-coefficient rays are

unnecessarily run in sturm / polysolve when solve_quartic would work

just fine. The right thing to do is let users decide when to use 'sturm'

and this set of commit dumps the difficult_coeffs() code.

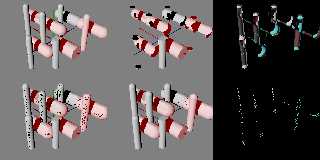

Examples.

The attached image is a scene originally created to explore default

texturing related to negative blobs, but it demonstrates nicely the

issues described above.

In the top row we have on the left the image rendered with the blob

accuracy unmodified and all the difficult_coeffs() code running as it

does today. In the middle image the 'if difficult_coeffs()' test has

been hard coded to return no roots. On the right the difference image

shows all the rays running in the sturm / polysolve method which all run

fine - and faster given the blob set up - with solve_quartic().

In the second row we are running with the new more accurate blob.cpp.

Further, the blobs in the scene have been changed to have a threshold of

0.001 instead of 0.1 - values this low are not common because you don't

get much blobbing.

The first column of the second row was rendered with a version of

POV-Ray compiled to always force the use of solve_quartic(). The middle

column restores the 'if difficult_coeffs()' test and internal swap to

sturm / polysolve. It does help as shown in column 3. However column 2

shows the already too aggressive use of sturm / polysolve with blobs is

not enough to automatically 'fix' all the solve_quartic issues. Running

with sturm is clean in all cases.

Aside: The potentials as glows image I did sometime back had what I

thought were media related speckles. I applied very aggressive and

expensive AA to hide them as I usually do with media. Turns out using

sturm would have been the better choice. I wanted no blobbing for the

blob cylinders and used a threshold of 1e-5. It was a case of outrunning

the effectiveness of the 'if difficult_coeffs() patch' with existing

blob accuracy, but I didn't realize it at the time.

Updates at:

https://github.com/wfpokorny/povray/tree/fix/polynomialsolverAccuracy

Performance and specific commit details below.

Bill P.

Performance info:

------------ /usr/bin/time povray -j -wt1 -fn -p -d -c lemonSturm.pov

0) master 30.22user 0.04system 0:30.89elapsed

10) 0f6afcc 14.77user 0.02system 0:15.36elapsed -51.13%

11) 0f9509b 14.77user 0.01system 0:15.36elapsed

12) c590c0e 15.08user 0.01system 0:15.71elapsed +2.10%

13) 4ab17df 14.88user 0.02system 0:15.51elapsed -1.33% -50.76%

14) 78e664c --- NA ---

15) 5d8cedc --- NA ---

16) 21387e2 --- NA ---

---- povray subsurface.pov -p +wt2 -j -d -c -fn

master sturm on 540.89user 0.06system 4:31.15elapsed

21387e2 sturm on 347.29user 0.07system 2:54.42elapsed -35.79%

master sturm off 286.99user 0.06system 2:24.28elapsed

21387e2 sturm off 278.54user 0.05system 2:20.12elapsed -2.94%

If blob container needs sturm. 278.54 -> 347.29 +24.68%

11) Changing polysolve visible_roots and numchanges zero test to <=0.

Depending upon value used for reducing and trimming the sturm chain

equations in modp, the sphere_sweep b_spline functions can return

negative root counts when there are no roots.

12) Adding sanity test of upper bound Cauchy method result.

In practice have occasionally found roots slightly above upper bound.

Perhaps related to how sturm chain is tweaked during pruning. Sort it later.

13) Removing pointless operations in polysolve and Solve_Polynomial.

visible_roots() passed a couple integer pointers which it set prior to

return but the values were never used in polysolve itself.

When fourth order equations seen a test call to difficult_coeffs() was

made which if true would set sturm true - even when sturm was already true.

14) Updating blob accuracy so better synchronized with other shapes.

Fix for GitHub issue #187

Returned minimum intersection depths now set to MIN_ISECT_DEPTH.

Internal determine_influences() intersect_<sub-element> functions using

>0.0 as was already the subsurface feature. Anything more opens up gaps

or jumps where the sub-element density influences are added too late or

dropped too earlier. The change does create ray equations slighly more

difficult to solve which was likely the reason for the original,

largish, v1.0 1e-2 value. The sturm / polysolve solver is sometimes

necessary over solve_quartic().

15) Removing difficult_coeffs() and related code in Solve_Polynomial().

Code not completely effective where sturm / polysolve needed and very

costly in most scenes with 4th order equations due use of sturm over

solve_quartic() where solver_quartic works fine and is much faster.

16) Reversing change to sor.cpp as unneeded with updated polysolve().

Not verified, but suspect bump made in commit 3f2e1a9 to get sign change

was previously needed where regula_falsa() handed single root problem

with 0 to MAX_DISTANCE range. In any case, the patch is no longer needed

with updated polysolve() code.

Post a reply to this message

Attachments:

Download 'storydifficultcoeff.png' (110 KB)

Preview of image 'storydifficultcoeff.png'

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 18 May 2018 13:10:55

Message: <5aff091f$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/18/2018 01:00 PM, William F Pokorny wrote:

> On 03/30/2018 08:21 AM, William F Pokorny wrote:

> ....

>

> Sturm / polysove() investigation. Those Difficult Coefficients.

>

I'll add quickly this set of updates fixes the remaining media speckles

for the scene which started this thread - see attached image. The

general media speckling issue is better with more accurate roots, but

not completely fixed.

Bill P.

Post a reply to this message

Attachments:

Download 'gailshawexampleclean.png' (167 KB)

Preview of image 'gailshawexampleclean.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

William F Pokorny <ano### [at] anonymous org> wrote:

> On 03/30/2018 08:21 AM, William F Pokorny wrote:

>

[snip]

> I've had the feeling for a while the code was probably doing nothing

> useful for us today. I was almost completely right. Close enough it's

> going into the bit bucket with this set of commits.

> ...

>

> The blobs were a different story. Many rays are being re-directed to

> sturm / polysolve for no benefit given the current solve_quartic code.

> To oblivion with that code you all say!

>

[snip]

Your detailed research into these arcane issues is really appreciated. I can't

say I understand some of it (most of it!), but we are all lucky to have your

keen eye probing the code. org> wrote:

> On 03/30/2018 08:21 AM, William F Pokorny wrote:

>

[snip]

> I've had the feeling for a while the code was probably doing nothing

> useful for us today. I was almost completely right. Close enough it's

> going into the bit bucket with this set of commits.

> ...

>

> The blobs were a different story. Many rays are being re-directed to

> sturm / polysolve for no benefit given the current solve_quartic code.

> To oblivion with that code you all say!

>

[snip]

Your detailed research into these arcane issues is really appreciated. I can't

say I understand some of it (most of it!), but we are all lucky to have your

keen eye probing the code.

Post a reply to this message

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 18 May 2018 22:16:31

Message: <5aff88ff@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 18.05.2018 um 19:00 schrieb William F Pokorny:

> Chrisoph added this bit of code in blob.cpp to avoid visible seams:

>

> DBL depthTolerance = (ray.IsSubsurfaceRay()? 0 : DEPTH_TOLERANCE);

>

> I've been running with DEPTH_TOLERANCE at 1e-4 for a long time in my

> personal version of POV-Ray which fixes all the blob accuracy issues

> except for the subsurface one.

For subsurface rays it's safer to keep it at exactly 0.

The `depthTolerance` mechanism, to all of my knowledge, is in there to

make sure that transmitted, reflected or shadow rays don't pick up the

original surface again.

This problem is absent in rays earmarked as subsurface rays (presuming

we have high enough precision) because such rays are never shot directly

from the original intersection point, but a point translated slightly

into the object. So subsurface rays already carry an implicit

depthTolerance with them.

> It could be adjusted so as to push more solve_quartic rays into sturm /

> polysolve, but it's aleady the case most difficult-coefficient rays are

> unnecessarily run in sturm / polysolve when solve_quartic would work

> just fine. The right thing to do is let users decide when to use 'sturm'

> and this set of commit dumps the difficult_coeffs() code.

Would it make sense to, rather than ditching the test entirely, instead

have it trigger a warning after the render (akin to the isosurfaces

max_gradient info messages) informing the user that /if/ they are seeing

artifacts with the object they may want to turn on sturm (provided of

course sturm is off)?

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 19 May 2018 09:49:07

Message: <5b002b53@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/18/2018 10:16 PM, clipka wrote:

> Am 18.05.2018 um 19:00 schrieb William F Pokorny:

>

>> Chrisoph added this bit of code in blob.cpp to avoid visible seams:

>>

>> DBL depthTolerance = (ray.IsSubsurfaceRay()? 0 : DEPTH_TOLERANCE);

>>

>> I've been running with DEPTH_TOLERANCE at 1e-4 for a long time in my

>> personal version of POV-Ray which fixes all the blob accuracy issues

>> except for the subsurface one.

Formally a blob's 'DEPTH_TOLERANCE' value was used for both the returned

from ray origin intersection tolerance and the internal

determine_influences() 'mind_dist' tolerances - though they control

different things. The internal sub-element influences mechanism now uses

>0.0. Should have always I believe except it does sometimes create

slightly more difficult ray-surface equations - which is my bet for why

the original v1.0 code didn't.

>

> For subsurface rays it's safer to keep it at exactly 0.

>

> The `depthTolerance` mechanism, to all of my knowledge, is in there to

> make sure that transmitted, reflected or shadow rays don't pick up the

> original surface again.

>

It depends about which intersection depth tolerance you speak. There are

variations of the mechanism.

a) What you say is close to my understanding for the continued and

shadow ray mechanisms which exists outside the code for each shape.

These mechanisms live in the 'ray tracing' code. I'd slightly reword

what you wrote to say these mechanisms prevent the use of ray - surface

intersections within some distance of a previous intersection. This

distance is today usually SMALL_TOLERANCE or 1e-3. SHADOW_TOLERANCE has

also been 1e-3 for more than 25 years and was only every something

different because Enzmann's initial solvers had a hard coded shadow ray

filter at a larger the SMALL_TOLERANCE value. There is too

MIN_ISECT_DEPTH.

b) Root / intersection depth tolerance filters exist in the code for

each shape/surface too. These control only whether all roots /

intersections for the shape are greater than a minimum intersection

depth relative to the incoming ray's origin or less than MAX_DISTANCE.

They do not control how closely ray surface intersections are spaced

from each other(1)(2). True the shape depth tolerance can influence

continued or shadow ray handling as seen by being 'different' than those

used in (a). However, we should aim for (b) <= (a) depth tolerance

alignment.

On seeing your subsurface code running the (b) variety at >0.0. I had

the thought, why not do this with all shapes / surfaces? Let (a) filter

the roots for its need. This would get us closer to a place where we'd

be able to make SMALL_TOLERANCE & MIN_ISECT_DEPTH smaller so people

don't have to so often do the ugly scale up their scene trick. There is

no numerical reason we cannot support generally much smaller scene

dimensions than we do.

Well it turns out returning all the roots >0.0 in each shapes (b)

mechanism costs cpu time - about 1% of a 3% increase with the internal

blob influence min_dist change for a glass blob scene. Time is saved

where intersections which (a) would have to filter later are just never

returned by (b). This experiment is why I set blob.cpp's DEPTH_TOLERANCE

= MIN_ISECT_DEPTH - which only happens also be the 1e-4 I've long been

using. I currently think the (b) depth for each shape / surface should

be migrated to MIN_ISECT_DEPTH over time.

(1) - I expect CSG generally needs all the roots regardless of spacing,

but this is code I've never reviewed.

(2) - Mostly true for what I've seen. But who knows, might be other

inbuilt shape, surface implementations do spacial root filtering for

internal reasons. The sphere_sweep's code seems to be doing a sort of a

root correction thing - with which you are far more familiar than me.

> This problem is absent in rays earmarked as subsurface rays (presuming

> we have high enough precision) because such rays are never shot directly

> from the original intersection point, but a point translated slightly

> into the object. So subsurface rays already carry an implicit

> depthTolerance with them.

>

I tested subsurface scenes with my updates and believe the changes are

compatible without the need for the returned depth to be >0.0. Thinking

about it another way, if the 'returned' min depth needed to be >0.0 for

subsurface to work, all shapes would need that conditional for >0.0 and

this is not the case. Believe we're good, but we can fix things if not.

>> It could be adjusted so as to push more solve_quartic rays into sturm /

>> polysolve, but it's aleady the case most difficult-coefficient rays are

>> unnecessarily run in sturm / polysolve when solve_quartic would work

>> just fine. The right thing to do is let users decide when to use 'sturm'

>> and this set of commit dumps the difficult_coeffs() code.

>

> Would it make sense to, rather than ditching the test entirely, instead

> have it trigger a warning after the render (akin to the isosurfaces

> max_gradient info messages) informing the user that /if/ they are seeing

> artifacts with the object they may want to turn on sturm (provided of

> course sturm is off)?

>

We should think about it, but currently I answer no to your specific

question. The difficult coefficient method is wildly inaccurate in

practice unlike the warning with isosurfaces.

A warning would be good if we can figure out something better... Mostly

it will be obvious - and more often obvious with this update. If running

blobs and you see speckles / noise - try sturm. Advice good today by the

way. Advice I should have taken with my own potential glow scene over

extreme AA - but the code dropped hid much of the solve_quartic issue

from me and I thought media the cause.

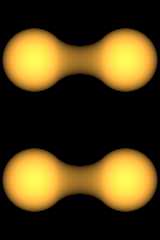

I ran test scenes where knowing you need sturm is pretty much impossible

to see without trying sturm and comparing images. For example, attached

is an image where I was aiming for a fruit looking thing with a faked

subsurface effect using multiple concentric blobs positive and negative

- taking advantage of blob's texture blending.

In the left column we see the <= master (3.8) current result where the

internal blob surfaces are not accurately resolved. The right column

shows the difference between column 1 and 2 for each row.

The top row, middle shows the new all solve_quartic() solution which in

fact has bad roots on a surface internal to the outer ones. Negative

blobs in addition to tiny thresholds tend to trip the solve_quartic

failures. We cannot 'see' we've got bad roots... The bottom row is the

accurate - though it happens less attractive - sturm result.

Reasonable accurate warnings would be good. Wonder if there is a way to

pick the issue up in solve_quartic itself or in blob.cpp knowing sturm

not set....

Bill P.

Post a reply to this message

Attachments:

Download 'diffcoeffhidden.png' (160 KB)

Preview of image 'diffcoeffhidden.png'

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 20 May 2018 12:10:08

Message: <5b019de0$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/18/2018 10:16 PM, clipka wrote:

> Am 18.05.2018 um 19:00 schrieb William F Pokorny:

>

>> Chrisoph added this bit of code in blob.cpp to avoid visible seams:

>>

>> DBL depthTolerance = (ray.IsSubsurfaceRay()? 0 : DEPTH_TOLERANCE);

>>

>> I've been running with DEPTH_TOLERANCE at 1e-4 for a long time in my

>> personal version of POV-Ray which fixes all the blob accuracy issues

>> except for the subsurface one.

>

> For subsurface rays it's safer to keep it at exactly 0.

>

...

>

> This problem is absent in rays earmarked as subsurface rays (presuming

> we have high enough precision) because such rays are never shot directly

> from the original intersection point, but a point translated slightly

> into the object. So subsurface rays already carry an implicit

> depthTolerance with them.

>

Aside: 'exactly 0' unless we change blob.cpp code means >0.0.

I woke up this morning thinking more about your description of SSLT and

though I picked up no major differences in result, results were not

identical - but I didn't expect them to be. I'd not run the returned

root depth threshold at both 0.0 and 1e-4 (MIN_ISECT_DEPTH) and compared

results.

The top row in the attached image is without sturm the bottom with. The

differences are very subtle, but can be seen after being multiplied by 50.

The left column is less my recent changes so min_dist and the return

depth >0.0 with sturm automatically getting used often in the upper left

image.

The middle column is the updated code so the middle top now using

strictly solve_quartic() making the differences on the right are

greater. The bottom, sturm always row best shows the effect of not

returning roots <= 1e-4 from the ray origin.

My position is still the difference isn't large enough to be of concern,

but if so, maybe we should more strongly consider going to >0.0 or some

very small values for all shapes. Doing it only in blob.cpp doesn't make

sense to me.

Aside: MIN_ISECT_DEPTH doesn't exist before 3.7. Is it's creation

related to the SSLT implementation?

Bill P.

Post a reply to this message

Attachments:

Download 'sslt_rtrnrootquestion.png' (460 KB)

Preview of image 'sslt_rtrnrootquestion.png'

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 20 May 2018 17:09:35

Message: <5b01e40f$1@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 20.05.2018 um 18:10 schrieb William F Pokorny:

> My position is still the difference isn't large enough to be of concern,

> but if so, maybe we should more strongly consider going to >0.0 or some

> very small values for all shapes. Doing it only in blob.cpp doesn't make

> sense to me.

I guess the reason I changed it only for blobs was because there I

identified it as a problem - and I might not have been familiar enough

with the shapes stuff to know that the other shapes have a similar

mechanism.

After all, why would one expect such a mechanism if there's the "global"

MIN_ISECT_DEPTH?

Technically, I'm pretty sure the mechanism should be disabled for SSLT

rays in all shapes.

> Aside: MIN_ISECT_DEPTH doesn't exist before 3.7. Is it's creation

> related to the SSLT implementation?

No, it was already there before I joined the team. The only relation to

SSLT is that it gets in the way there.

My best guess would be that someone tried to pull the DEPTH_TOLERANCE

mechanism out of all the shapes, and stopped with the work half-finished.

BTW, one thing that has been bugging me all along about the

DEPTH_TOLERANCE and MIN_ISECT_DEPTH mechanisms (and other near-zero

tests in POV-Ray) is that they're using absolute values, rather than

adapting to the overall scale of stuff. That should be possible, right?

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()