|

|

On 05/18/2018 10:16 PM, clipka wrote:

> Am 18.05.2018 um 19:00 schrieb William F Pokorny:

>

>> Chrisoph added this bit of code in blob.cpp to avoid visible seams:

>>

>> DBL depthTolerance = (ray.IsSubsurfaceRay()? 0 : DEPTH_TOLERANCE);

>>

>> I've been running with DEPTH_TOLERANCE at 1e-4 for a long time in my

>> personal version of POV-Ray which fixes all the blob accuracy issues

>> except for the subsurface one.

Formally a blob's 'DEPTH_TOLERANCE' value was used for both the returned

from ray origin intersection tolerance and the internal

determine_influences() 'mind_dist' tolerances - though they control

different things. The internal sub-element influences mechanism now uses

>0.0. Should have always I believe except it does sometimes create

slightly more difficult ray-surface equations - which is my bet for why

the original v1.0 code didn't.

>

> For subsurface rays it's safer to keep it at exactly 0.

>

> The `depthTolerance` mechanism, to all of my knowledge, is in there to

> make sure that transmitted, reflected or shadow rays don't pick up the

> original surface again.

>

It depends about which intersection depth tolerance you speak. There are

variations of the mechanism.

a) What you say is close to my understanding for the continued and

shadow ray mechanisms which exists outside the code for each shape.

These mechanisms live in the 'ray tracing' code. I'd slightly reword

what you wrote to say these mechanisms prevent the use of ray - surface

intersections within some distance of a previous intersection. This

distance is today usually SMALL_TOLERANCE or 1e-3. SHADOW_TOLERANCE has

also been 1e-3 for more than 25 years and was only every something

different because Enzmann's initial solvers had a hard coded shadow ray

filter at a larger the SMALL_TOLERANCE value. There is too

MIN_ISECT_DEPTH.

b) Root / intersection depth tolerance filters exist in the code for

each shape/surface too. These control only whether all roots /

intersections for the shape are greater than a minimum intersection

depth relative to the incoming ray's origin or less than MAX_DISTANCE.

They do not control how closely ray surface intersections are spaced

from each other(1)(2). True the shape depth tolerance can influence

continued or shadow ray handling as seen by being 'different' than those

used in (a). However, we should aim for (b) <= (a) depth tolerance

alignment.

On seeing your subsurface code running the (b) variety at >0.0. I had

the thought, why not do this with all shapes / surfaces? Let (a) filter

the roots for its need. This would get us closer to a place where we'd

be able to make SMALL_TOLERANCE & MIN_ISECT_DEPTH smaller so people

don't have to so often do the ugly scale up their scene trick. There is

no numerical reason we cannot support generally much smaller scene

dimensions than we do.

Well it turns out returning all the roots >0.0 in each shapes (b)

mechanism costs cpu time - about 1% of a 3% increase with the internal

blob influence min_dist change for a glass blob scene. Time is saved

where intersections which (a) would have to filter later are just never

returned by (b). This experiment is why I set blob.cpp's DEPTH_TOLERANCE

= MIN_ISECT_DEPTH - which only happens also be the 1e-4 I've long been

using. I currently think the (b) depth for each shape / surface should

be migrated to MIN_ISECT_DEPTH over time.

(1) - I expect CSG generally needs all the roots regardless of spacing,

but this is code I've never reviewed.

(2) - Mostly true for what I've seen. But who knows, might be other

inbuilt shape, surface implementations do spacial root filtering for

internal reasons. The sphere_sweep's code seems to be doing a sort of a

root correction thing - with which you are far more familiar than me.

> This problem is absent in rays earmarked as subsurface rays (presuming

> we have high enough precision) because such rays are never shot directly

> from the original intersection point, but a point translated slightly

> into the object. So subsurface rays already carry an implicit

> depthTolerance with them.

>

I tested subsurface scenes with my updates and believe the changes are

compatible without the need for the returned depth to be >0.0. Thinking

about it another way, if the 'returned' min depth needed to be >0.0 for

subsurface to work, all shapes would need that conditional for >0.0 and

this is not the case. Believe we're good, but we can fix things if not.

>> It could be adjusted so as to push more solve_quartic rays into sturm /

>> polysolve, but it's aleady the case most difficult-coefficient rays are

>> unnecessarily run in sturm / polysolve when solve_quartic would work

>> just fine. The right thing to do is let users decide when to use 'sturm'

>> and this set of commit dumps the difficult_coeffs() code.

>

> Would it make sense to, rather than ditching the test entirely, instead

> have it trigger a warning after the render (akin to the isosurfaces

> max_gradient info messages) informing the user that /if/ they are seeing

> artifacts with the object they may want to turn on sturm (provided of

> course sturm is off)?

>

We should think about it, but currently I answer no to your specific

question. The difficult coefficient method is wildly inaccurate in

practice unlike the warning with isosurfaces.

A warning would be good if we can figure out something better... Mostly

it will be obvious - and more often obvious with this update. If running

blobs and you see speckles / noise - try sturm. Advice good today by the

way. Advice I should have taken with my own potential glow scene over

extreme AA - but the code dropped hid much of the solve_quartic issue

from me and I thought media the cause.

I ran test scenes where knowing you need sturm is pretty much impossible

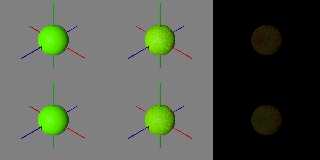

to see without trying sturm and comparing images. For example, attached

is an image where I was aiming for a fruit looking thing with a faked

subsurface effect using multiple concentric blobs positive and negative

- taking advantage of blob's texture blending.

In the left column we see the <= master (3.8) current result where the

internal blob surfaces are not accurately resolved. The right column

shows the difference between column 1 and 2 for each row.

The top row, middle shows the new all solve_quartic() solution which in

fact has bad roots on a surface internal to the outer ones. Negative

blobs in addition to tiny thresholds tend to trip the solve_quartic

failures. We cannot 'see' we've got bad roots... The bottom row is the

accurate - though it happens less attractive - sturm result.

Reasonable accurate warnings would be good. Wonder if there is a way to

pick the issue up in solve_quartic itself or in blob.cpp knowing sturm

not set....

Bill P.

Post a reply to this message

Attachments:

Download 'diffcoeffhidden.png' (160 KB)

Preview of image 'diffcoeffhidden.png'

|

|

![]()