|

|

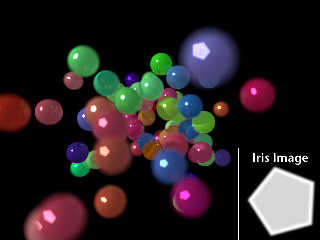

A simple image to show focal blur from my stochastic render rig. The image is

comprised of 10,000 renders, each taking a little under a second. The total

wall time was 55 minutes across two CPUs (2.4GHz Core Duos).

All I'm doing is moving the camera to a random offset each render. The offset is

chosen from the iris image I've superimposed in the bottom right. This one is a

pentagram, but any bitmap can be chosen.

The idea of the stochastic render rig is, that on each render pass, a number of

elements are randomly changed (camera position for focal blur and

anti-aliasing, lights sampled from a high density light dome, micro-facets for

blurred reflection, jitter for area lights, etc) so that the final composition

of all the effects is faster than a single render with all the elements turned

on, and avoiding the limitations of things like multi-texturing for blurry

reflections (e.g. the limitation on the number of samples, and the lack of

reflection control).

The picture shows that my code still has some issues - out of focus elements in

certain areas (towards the edge of the picture near the axes) have some odd

distortions. I'm going to have to track down the problem on those...

Cheers,

Edouard.

Post a reply to this message

Attachments:

Download 'focal-blur-10000-passes.jpg' (39 KB)

Preview of image 'focal-blur-10000-passes.jpg'

|

|

|

|

"Edouard Poor" <pov### [at] edouard info> wrote:

> All I'm doing is moving the camera to a random offset each render. The offset is

> chosen from the iris image I've superimposed in the bottom right. This one is a

> pentagram, but any bitmap can be chosen.

Interesting technique. My approach in the past was a physical aperture + focal

blur, or an ugly patch to change the sampling. I was surprised how long it

took even for simple spheres, as you have here.

> so that the final composition of all the effects is faster than a single render

> with all the elements turned on

With a single render you can use adaptive sampling much like focal blur, but

then you lose out on some of the effects you've mentioned. Is it really

faster? Maybe it's the *combination* of effects that slows it down in a single

render. Interesting. If nothing else, this method is sure easy enough to

parallelize!

> The picture shows that my code still has some issues - out of focus elements in

> certain areas (towards the edge of the picture near the axes) have some odd

> distortions. I'm going to have to track down the problem on those...

If you mean the lower left, I think it looks alright. The distortion seems

natural due to the camera angle, and if the specular reflection is distorted,

then a convolution with the aperture image will show the same effect.

- Ricky info> wrote:

> All I'm doing is moving the camera to a random offset each render. The offset is

> chosen from the iris image I've superimposed in the bottom right. This one is a

> pentagram, but any bitmap can be chosen.

Interesting technique. My approach in the past was a physical aperture + focal

blur, or an ugly patch to change the sampling. I was surprised how long it

took even for simple spheres, as you have here.

> so that the final composition of all the effects is faster than a single render

> with all the elements turned on

With a single render you can use adaptive sampling much like focal blur, but

then you lose out on some of the effects you've mentioned. Is it really

faster? Maybe it's the *combination* of effects that slows it down in a single

render. Interesting. If nothing else, this method is sure easy enough to

parallelize!

> The picture shows that my code still has some issues - out of focus elements in

> certain areas (towards the edge of the picture near the axes) have some odd

> distortions. I'm going to have to track down the problem on those...

If you mean the lower left, I think it looks alright. The distortion seems

natural due to the camera angle, and if the specular reflection is distorted,

then a convolution with the aperture image will show the same effect.

- Ricky

Post a reply to this message

|

|

|

|

> > All I'm doing is moving the camera to a random offset each render. The offset is

> > chosen from the iris image I've superimposed in the bottom right. This one is a

> > pentagram, but any bitmap can be chosen.

>

> Interesting technique. My approach in the past was a physical aperture + focal

> blur, or an ugly patch to change the sampling. I was surprised how long it

> took even for simple spheres, as you have here.

>

> > so that the final composition of all the effects is faster than a single render

> > with all the elements turned on

>

> With a single render you can use adaptive sampling much like focal blur, but

> then you lose out on some of the effects you've mentioned. Is it really

> faster? Maybe it's the *combination* of effects that slows it down in a single

> render. Interesting. If nothing else, this method is sure easy enough to

> parallelize!

I started down this path by trying to make some blurred reflections...

The advice from the POV FAQ, and also from advanced users to people on these

newgroups wanting to try rendering that kind of material was to use

multi-texturing

i.e. texture { average texture_map { [1 texture { ... }] [1 texture { ... }]

.... } }

(see http://tag.povray.org/povQandT/languageQandT.html#blurredreflection for the

FAQ)

That worked great for a single object, but I noticed that it became really slow

when I had multiple reflective objects in the scene. And even slower again when

I added area lights, and again when I added focal blur, and then anti-aliasing.

And much worse again when I tried it with blurry glass that was both reflecting

and refracting at the same time.

I think the problem was that I'd shoot a single ray at a blurry reflector, and

it would shoot 256 reflection rays into the scene, a lot of which would then

hit another blurry reflectors, and then I would suddenly have generated ten

thousand rays. Even if those reflections landed on non-blurry reflectors, they

would have to all do area_light calculations. Then factor in depth of field

blur and anti-aliasing, and the same pixel would get another hit another 50

times. End result, really slow, but nice looking renders. I had one render go

for a week and it only had three spheres and a plane.

There was also the limit of 256 textures, which limited the amount of blur

somewhat without getting too much grain. And 256 usually became 128, as I

wanted to combine blurry reflection with, say, dents.

So I thought I'd try to cure the problem with an experiment - render the same

scene 100 or 1000 times with just one texture, but change the texture between

each render pass. Then average all the frames together. That worked pretty

well. I didn't get the multiplicative effect I'd been seeing with heavy

reflective multi-texturing.

Then, THEN, I realised that if I was rendering the same scene many times, I

could simply move the camera for depth of field (and choose the pattern for the

iris). And jigger it very slightly for anti-aliasing. And my area_lights could

be just 2x2 as long as I turned on jitter so the pattern changed with every

frame. And have a light dome of 1000 area lights to choose from and just use a

random 3 every render. Everything seemed to have a good way of doing very

little but randomised for each render (except media...)

And each of the effects I added above I got "for free". I still did 1000

renders, but the final averaged image had all the effects showing up in the

final scene. And the render time wasn't that different.

And, of course, rendering separate scenes makes, as you say, for trivial

parallelisation across multiple CPUs and machines. Also, if the scene doesn't

have enough passes (effects are looking a little grainy), you can just fire off

another 1000 renders and the scene improves with each new frame you add to the

ones you already have.

Each scene, because it has been made simpler (no anti-aliasing, no DoF, very few

lights, very coarse area light grids, etc) renders pretty fast. Also Povray

seems to cache things that take a long time to load (big HDRI images for

example), so you only get a hit on the first render out of your batch of 1000.

Now I'm hardly inventing anything clever here - the technique is really really

old, and is called stochastic ray tracing. I'm just doing it a frame at a time.

Phew - that was a bit of a big explanation for a really simple technique. The

post at

http://news.povray.org/povray.binaries.images/thread/%3Cweb.488b23dba9609166666f43cd0%40news.povray.org%3E/

shows some of the individual renders and the final, averaged, one.

> > The picture shows that my code still has some issues - out of focus elements in

> > certain areas (towards the edge of the picture near the axes) have some odd

> > distortions. I'm going to have to track down the problem on those...

>

> If you mean the lower left, I think it looks alright. The distortion seems

> natural due to the camera angle, and if the specular reflection is distorted,

> then a convolution with the aperture image will show the same effect.

It's the ones on the center left that are concerning me. Hopefully it should be

fixable.

> - Ricky

Cheers,

Edouard.

Post a reply to this message

|

|

![]()