|

|

|

|

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: On the long unfinished normal related warps in warp.cpp.

Date: 17 May 2021 09:00:10

Message: <60a268da$1@news.povray.org>

|

|

|

|  |

|  |

|

|

The warp.cpp source code has long had some unfinished normal related

warp and unwarp hooks. Those of us working on the code of late haven't

really understood them - as far as I know.

Patterns today work in the normal block with all sorts of transforms and

warps, so those hooks are aimed at...?

While working of late on normal block patterns it hit me we don't warp

the incoming normal, but rather the perturbation patterns/methods via

the unwarped / warped EPoint(1). We cannot today warp the incoming raw

normals with warp{}s.

So, I started to play with an idea for new normal pattern which

allows/enables this. It's currently working with the difference between

the intersection location and the warp{} / turbulence affected EPoint.

In other words, there isn't an actual specific perturbation method /

pattern - it's whatever your warp{}s create.

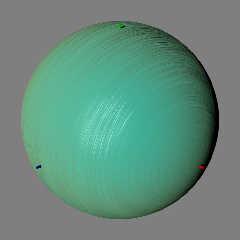

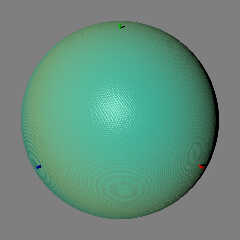

Attached are a couple of images. One uses three repeat warps and a touch

of turbulence. The second a spiral -> turbulence -> spiral combination.

Still playing / thinking, but looks promising.

Bill P.

Post a reply to this message

Attachments:

Download 'nrmlpertbywarps.jpg' (77 KB)

Download 'nrmlpertbywarps1.jpg' (85 KB)

Preview of image 'nrmlpertbywarps.jpg'

Preview of image 'nrmlpertbywarps1.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

William F Pokorny <ano### [at] anonymous org> wrote:

> The warp.cpp source code has long had some unfinished normal related

> warp and unwarp hooks. Those of us working on the code of late haven't

> really understood them - as far as I know.

So, when I was coding some C++ stuff, I had to puzzle out passing things by

pointer, reference, or value. Some of the code in the section we're talking

about I can read just fine - the stuff with the "->", notsomuch.

> While working of late on normal block patterns it hit me we don't warp

> the incoming normal, but rather the perturbation patterns/methods via

> the unwarped / warped EPoint(1). We cannot today warp the incoming raw

> normals with warp{}s.

I have read that 4 or 5 times, and I think I understand it and agree. :)

> So, I started to play with an idea for new normal pattern which

> allows/enables this. It's currently working with the difference between

> the intersection location and the warp{} / turbulence affected EPoint.

> In other words, there isn't an actual specific perturbation method /

> pattern - it's whatever your warp{}s create.

So it would be much like doing normal {null warp repeat x turbulence 0.3}

where "null" is just sort of like an identity matrix - no change.

>

> Attached are a couple of images. One uses three repeat warps and a touch

> of turbulence. The second a spiral -> turbulence -> spiral combination.

That second one seems like it has real potential. Given that one of the mothods

for arranging things "evenly" on the surface of a sphere is using a spiral /

loxodrome, then accomplishing a very close approximation to that using only a

simple normal statement would be a really powerful and welcome development.

This reminds me of my unsolved problem applying normals to bezier patches:

http://news.povray.org/web.5f6a91889d9b31d91f9dae300%40news.povray.org

regarding

https://news.povray.org/povray.binaries.images/message/%3Cweb.5f45adba5d69181b1f9dae300%40news.povray.org%3E/#%3Cweb.5f

45adba5d69181b1f9dae300%40news.povray.org%3E

Do you have any inkling if the uv-mapping coordinates affect the application of

the normal pattern, and where in the code that factors in? Because I see that

as being another thing that's hiding in there that we might not be fully aware

of.

So I guess maybe what I'm asking is, do the uv-mapping vectors override the

global POV-space location vectors, in essence (locally/temporarily) redefining

the EPoint? And with regard to applying a global normal pattern that is

dependent upon an object's location in POV-space, is this desirable, expected,

and can it be overridden? org> wrote:

> The warp.cpp source code has long had some unfinished normal related

> warp and unwarp hooks. Those of us working on the code of late haven't

> really understood them - as far as I know.

So, when I was coding some C++ stuff, I had to puzzle out passing things by

pointer, reference, or value. Some of the code in the section we're talking

about I can read just fine - the stuff with the "->", notsomuch.

> While working of late on normal block patterns it hit me we don't warp

> the incoming normal, but rather the perturbation patterns/methods via

> the unwarped / warped EPoint(1). We cannot today warp the incoming raw

> normals with warp{}s.

I have read that 4 or 5 times, and I think I understand it and agree. :)

> So, I started to play with an idea for new normal pattern which

> allows/enables this. It's currently working with the difference between

> the intersection location and the warp{} / turbulence affected EPoint.

> In other words, there isn't an actual specific perturbation method /

> pattern - it's whatever your warp{}s create.

So it would be much like doing normal {null warp repeat x turbulence 0.3}

where "null" is just sort of like an identity matrix - no change.

>

> Attached are a couple of images. One uses three repeat warps and a touch

> of turbulence. The second a spiral -> turbulence -> spiral combination.

That second one seems like it has real potential. Given that one of the mothods

for arranging things "evenly" on the surface of a sphere is using a spiral /

loxodrome, then accomplishing a very close approximation to that using only a

simple normal statement would be a really powerful and welcome development.

This reminds me of my unsolved problem applying normals to bezier patches:

http://news.povray.org/web.5f6a91889d9b31d91f9dae300%40news.povray.org

regarding

https://news.povray.org/povray.binaries.images/message/%3Cweb.5f45adba5d69181b1f9dae300%40news.povray.org%3E/#%3Cweb.5f

45adba5d69181b1f9dae300%40news.povray.org%3E

Do you have any inkling if the uv-mapping coordinates affect the application of

the normal pattern, and where in the code that factors in? Because I see that

as being another thing that's hiding in there that we might not be fully aware

of.

So I guess maybe what I'm asking is, do the uv-mapping vectors override the

global POV-space location vectors, in essence (locally/temporarily) redefining

the EPoint? And with regard to applying a global normal pattern that is

dependent upon an object's location in POV-space, is this desirable, expected,

and can it be overridden?

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: On the long unfinished normal related warps in warp.cpp.

Date: 18 May 2021 06:53:08

Message: <60a39c94$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 5/17/21 2:26 PM, Bald Eagle wrote:

> William F Pokorny <ano### [at] anonymous org> wrote:

...

>

>> So, I started to play with an idea for new normal pattern which

>> allows/enables this. It's currently working with the difference between

>> the intersection location and the warp{} / turbulence affected EPoint.

>> In other words, there isn't an actual specific perturbation method /

>> pattern - it's whatever your warp{}s create.

>

> So it would be much like doing normal {null warp repeat x turbulence 0.3}

> where "null" is just sort of like an identity matrix - no change.

>

(1) - I forgot to add the planned footnote in my original post. :-) Was

just some ramble about options and complications.

Yes, that's a good way to think about it. Might even add 'null" as the

normal pattern name in the end(2) - it's currently the default option in

the normal bevy "collection."

(2) - I'm playing with two actual methods. "null type n" - don't know.

...

> That second one seems like it has real potential. Given that one of the mothods

> for arranging things "evenly" on the surface of a sphere is using a spiral /

> loxodrome, then accomplishing a very close approximation to that using only a

> simple normal statement would be a really powerful and welcome development.

Yes, I've been thinking some about geodesic centered - normal direction

sets where the incoming normal would be matched to closest direction

match creating a frame for normal perturbations. As you suggest, others

possible.

I need at some point to go back and work more on the black hole

'spherically contained' warps. Perhaps adding cylindrical black holes,

etc, with an ability to orient to an arbitrary direction vector. With

warps too there is more possible.

> This reminds me of my unsolved problem applying normals to bezier patches:

>

> http://news.povray.org/web.5f6a91889d9b31d91f9dae300%40news.povray.org

> regarding

>

https://news.povray.org/povray.binaries.images/message/%3Cweb.5f45adba5d69181b1f9dae300%40news.povray.org%3E/#%3Cweb.5f

> 45adba5d69181b1f9dae300%40news.povray.org%3E

>

> Do you have any inkling if the uv-mapping coordinates affect the application of

> the normal pattern, and where in the code that factors in? Because I see that

> as being another thing that's hiding in there that we might not be fully aware

> of.

I've not spent any time looking at it, but there is a normal block uv

mapping in normal.cpp. I don't use uv mapping much, but when active it

should map the intersection location on the uv map-able shape/object to

the appropriate 2d uv map position in the normal pattern in the 0-1, xy

square in the z=0 plane. Said another way, when uv mapping normals you

are explicitly setting the perturbed normal for the 3d surface

intersection from a 2d map. This is the normal pattern method if uv

mapping used for the normal block of the texture.

A point on your first link, given the questions asked there. There is

only one normal per material/texture for an object. That normal can

itself be and average, normal list or normal map of other normals, but

it's one normal as included in the texture. When you add a normal block

after an existing texture on an object as follows:

sphere { ... texture { T_wNormal } normal { granite } }

The normal in the sphere's texture is now 'normal { granite }' with

everything else the same; the normal originally in the texture

T_wNormal is gone for this sphere's texture.

> So I guess maybe what I'm asking is, do the uv-mapping vectors override the

> global POV-space location vectors, in essence (locally/temporarily) redefining

> the EPoint?

If I understand your question, yes, in that you are not using a warped

EPoint when uv mapping. The 'EPoint' is the local shape intersection

point.

> And with regard to applying a global normal pattern that is

> dependent upon an object's location in POV-space, is this desirable, expected,

> and can it be overridden?

>

Again not quite sure I follow. You can delay defining all shape's

textures until you are at the global scene level. Say a set of 50

spheres in a union where the (not uv mapped) texture including the

normal is defined at the union definition or later. There the position

of the 50 spheres within the texture eventually applied will result in

differences sphere to sphere.

Bill P. org> wrote:

...

>

>> So, I started to play with an idea for new normal pattern which

>> allows/enables this. It's currently working with the difference between

>> the intersection location and the warp{} / turbulence affected EPoint.

>> In other words, there isn't an actual specific perturbation method /

>> pattern - it's whatever your warp{}s create.

>

> So it would be much like doing normal {null warp repeat x turbulence 0.3}

> where "null" is just sort of like an identity matrix - no change.

>

(1) - I forgot to add the planned footnote in my original post. :-) Was

just some ramble about options and complications.

Yes, that's a good way to think about it. Might even add 'null" as the

normal pattern name in the end(2) - it's currently the default option in

the normal bevy "collection."

(2) - I'm playing with two actual methods. "null type n" - don't know.

...

> That second one seems like it has real potential. Given that one of the mothods

> for arranging things "evenly" on the surface of a sphere is using a spiral /

> loxodrome, then accomplishing a very close approximation to that using only a

> simple normal statement would be a really powerful and welcome development.

Yes, I've been thinking some about geodesic centered - normal direction

sets where the incoming normal would be matched to closest direction

match creating a frame for normal perturbations. As you suggest, others

possible.

I need at some point to go back and work more on the black hole

'spherically contained' warps. Perhaps adding cylindrical black holes,

etc, with an ability to orient to an arbitrary direction vector. With

warps too there is more possible.

> This reminds me of my unsolved problem applying normals to bezier patches:

>

> http://news.povray.org/web.5f6a91889d9b31d91f9dae300%40news.povray.org

> regarding

>

https://news.povray.org/povray.binaries.images/message/%3Cweb.5f45adba5d69181b1f9dae300%40news.povray.org%3E/#%3Cweb.5f

> 45adba5d69181b1f9dae300%40news.povray.org%3E

>

> Do you have any inkling if the uv-mapping coordinates affect the application of

> the normal pattern, and where in the code that factors in? Because I see that

> as being another thing that's hiding in there that we might not be fully aware

> of.

I've not spent any time looking at it, but there is a normal block uv

mapping in normal.cpp. I don't use uv mapping much, but when active it

should map the intersection location on the uv map-able shape/object to

the appropriate 2d uv map position in the normal pattern in the 0-1, xy

square in the z=0 plane. Said another way, when uv mapping normals you

are explicitly setting the perturbed normal for the 3d surface

intersection from a 2d map. This is the normal pattern method if uv

mapping used for the normal block of the texture.

A point on your first link, given the questions asked there. There is

only one normal per material/texture for an object. That normal can

itself be and average, normal list or normal map of other normals, but

it's one normal as included in the texture. When you add a normal block

after an existing texture on an object as follows:

sphere { ... texture { T_wNormal } normal { granite } }

The normal in the sphere's texture is now 'normal { granite }' with

everything else the same; the normal originally in the texture

T_wNormal is gone for this sphere's texture.

> So I guess maybe what I'm asking is, do the uv-mapping vectors override the

> global POV-space location vectors, in essence (locally/temporarily) redefining

> the EPoint?

If I understand your question, yes, in that you are not using a warped

EPoint when uv mapping. The 'EPoint' is the local shape intersection

point.

> And with regard to applying a global normal pattern that is

> dependent upon an object's location in POV-space, is this desirable, expected,

> and can it be overridden?

>

Again not quite sure I follow. You can delay defining all shape's

textures until you are at the global scene level. Say a set of 50

spheres in a union where the (not uv mapped) texture including the

normal is defined at the union definition or later. There the position

of the 50 spheres within the texture eventually applied will result in

differences sphere to sphere.

Bill P.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

William F Pokorny <ano### [at] anonymous org> wrote:

> If I understand your question, yes, in that you are not using a warped

> EPoint when uv mapping. The 'EPoint' is the local shape intersection

> point.

OK

> > And with regard to applying a global normal pattern that is

> > dependent upon an object's location in POV-space, is this desirable, expected,

> > and can it be overridden?

> >

>

> Again not quite sure I follow. You can delay defining all shape's

> textures until you are at the global scene level. Say a set of 50

> spheres in a union where the (not uv mapped) texture including the

> normal is defined at the union definition or later. There the position

> of the 50 spheres within the texture eventually applied will result in

> differences sphere to sphere.

What I'm asking about is the separability of the texture and the normal.

Can I have a distinct uv-mapped texture for each object (which is bounded /

defined by the uv vectors), but have them all share a "regular" normal that

extends throughout all of POV-space and is calculated based on the POV-space

<x,y,z> location vector?

So: can I apply a POV-space normal to an object but then also apply the local

shape intersection point uv-mapped texture. Or some other way of accomplishing

the same end result. org> wrote:

> If I understand your question, yes, in that you are not using a warped

> EPoint when uv mapping. The 'EPoint' is the local shape intersection

> point.

OK

> > And with regard to applying a global normal pattern that is

> > dependent upon an object's location in POV-space, is this desirable, expected,

> > and can it be overridden?

> >

>

> Again not quite sure I follow. You can delay defining all shape's

> textures until you are at the global scene level. Say a set of 50

> spheres in a union where the (not uv mapped) texture including the

> normal is defined at the union definition or later. There the position

> of the 50 spheres within the texture eventually applied will result in

> differences sphere to sphere.

What I'm asking about is the separability of the texture and the normal.

Can I have a distinct uv-mapped texture for each object (which is bounded /

defined by the uv vectors), but have them all share a "regular" normal that

extends throughout all of POV-space and is calculated based on the POV-space

<x,y,z> location vector?

So: can I apply a POV-space normal to an object but then also apply the local

shape intersection point uv-mapped texture. Or some other way of accomplishing

the same end result.

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: On the long unfinished normal related warps in warp.cpp.

Date: 18 May 2021 14:56:24

Message: <60a40dd8$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 5/18/21 1:34 PM, Bald Eagle wrote:

> What I'm asking about is the separability of the texture and the normal.

>

> Can I have a distinct uv-mapped texture for each object (which is bounded /

> defined by the uv vectors), but have them all share a "regular" normal that

> extends throughout all of POV-space and is calculated based on the POV-space

> <x,y,z> location vector?

>

> So: can I apply a POV-space normal to an object but then also apply the local

> shape intersection point uv-mapped texture. Or some other way of accomplishing

> the same end result.

OK. Thanks. I believe I better understand your question.

With the repeated disclaimer I'm not a regular uv map user, I think the

answer is no, but...

---

The reason is uv mapping works with primitives(1). It's a mechanism

which associates the ray intersection on the surface of that primitive

with a 2d map position. This mapping comes from the primitive

shape/surface code and is the same for pigments and normals.

(1) You can use the uv_mapping keyword for csg entities, but I believe

it simply does a default (planar?) map. Somewhere in recent years there

are posts related to someone trying this.

Because uv mapping works at the primitive level you must define a

texture's pigment and normal mapping at the base shape/surface - and

once (a) texture(s) are defined for the surfaces of something - they are

set - even if the object is included in later CSG. You cannot change

them or add to them.

--- but

What you should be able to do - since you can define pigments and

normals independently ahead of inclusion in a texture for each

shape/surface - is use different maps for the pigment and normal

uv_mapping for 'each' low level uv map-able thingy. Pigments, normals or

both could use maps created for the object as they need to be when

placed in global space. No automation for this as far as I know - you'd

have to work out how to create the thingy by thingy map(s) cut from

global space on your own.

All as far as know - maybe I've got something wrong, or someone has done

something clever(2).

Bill P.

(2) - A very rough idea would be to do something with _map values based

on passing global space values to a function, but I don't see all of how

it would work at the moment - and the _map would either be huge to

encompass all the global positions or limited. Another idea might be to

make use of povr like normal chaining within average maps, but I have no

idea, if, or how uv mapped normals might work/act within a normal {

average ... } alongside other types of normals. Never tried to mix uv

mapped types with others for pigments or normals.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

William F Pokorny <ano### [at] anonymous org> wrote:

> --- but

> What you should be able to do - since you can define pigments and

> normals independently ahead of inclusion in a texture for each

> shape/surface - is use different maps for the pigment and normal

> uv_mapping for 'each' low level uv map-able thingy. Pigments, normals or

> both could use maps created for the object as they need to be when

> placed in global space. No automation for this as far as I know - you'd

> have to work out how to create the thingy by thingy map(s) cut from

> global space on your own.

So, I could define a function that translated an <x, y, z> coordinate (that is

used internally to calculate a uv coordinate) to a projected <x, y, z>

coordinate that is outside the range of the 0-1 uv vectors of the local space...

<insert headache here>

Then do normal {function {f_translate (x, y, x)}, which should should remap the

local <x, y, z> mapping of the uv vectors to a projected set of uv vectors that

in turn correspond to the actual spatial positions of the bezier patch...

Egads.

I'm a _little_ surprised that no one has ever brought this up before, but then

again, I'm always going off into the weeds when doing what seem to be perfectly

ordinary things...

I'm wondering if you were to implement this in povr source, if it would be as

simple as defining a "no_uv" normal type that simply uses the native EPoint

instead of the uv_vector coordinate.

I will have think a bit more on this and see when I can puzzle out something

functional [sic].

Thanks for the analysis and ideas for a solution :) org> wrote:

> --- but

> What you should be able to do - since you can define pigments and

> normals independently ahead of inclusion in a texture for each

> shape/surface - is use different maps for the pigment and normal

> uv_mapping for 'each' low level uv map-able thingy. Pigments, normals or

> both could use maps created for the object as they need to be when

> placed in global space. No automation for this as far as I know - you'd

> have to work out how to create the thingy by thingy map(s) cut from

> global space on your own.

So, I could define a function that translated an <x, y, z> coordinate (that is

used internally to calculate a uv coordinate) to a projected <x, y, z>

coordinate that is outside the range of the 0-1 uv vectors of the local space...

<insert headache here>

Then do normal {function {f_translate (x, y, x)}, which should should remap the

local <x, y, z> mapping of the uv vectors to a projected set of uv vectors that

in turn correspond to the actual spatial positions of the bezier patch...

Egads.

I'm a _little_ surprised that no one has ever brought this up before, but then

again, I'm always going off into the weeds when doing what seem to be perfectly

ordinary things...

I'm wondering if you were to implement this in povr source, if it would be as

simple as defining a "no_uv" normal type that simply uses the native EPoint

instead of the uv_vector coordinate.

I will have think a bit more on this and see when I can puzzle out something

functional [sic].

Thanks for the analysis and ideas for a solution :)

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()