|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

So, I am using this macro to generate chromadepth textures:

// this script assumes the depth effect is *linear* from near to far,

// which may not be the case

// red, white, blue

#macro MakeChromadepthTextureCameraRWB(CameraLocation, CameraLookAt)

#local iMax = 240;

pigment

{

spherical

color_map

{

[0.0 color srgb <0,0,1>]

[0.5 color srgb <1,1,1>]

[1.0 color srgb <1,0,0>]

}

}

// finish

// {

// ambient 1

// diffuse 0

// }

scale vlength(CameraLocation - CameraLookAt) * 2

translate CameraLocation

#end

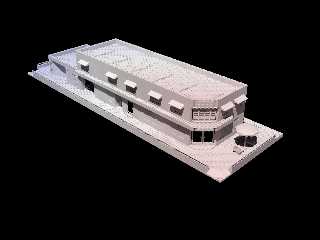

The texture is centered on the camera location, and stretches to a point

opposite the model. The problem is that the model ends up mostly white,

with only touches of blue and red, as you can see in the attached image.

I think the texture should hug the model a bit closer, so that more red

and blue are visible. The problem is that the texture is centered on the

camera instead of the model, or I could just scale the texture a bit

smaller.

What method can I use to fix the texture? I know what the model's center

point and bounding box are.

Thanks.

Mike

Post a reply to this message

Attachments:

Download 'wrapper_showcase.jpg' (225 KB)

Preview of image 'wrapper_showcase.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

On 17/02/2018 12:29, Mike Horvath wrote:

> or I could just scale the texture a bit smaller.

I would experiment with that to start with. The results might give you

an idea.

It could be that you just need to "calibrate".

--

Regards

Stephen

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Mike Horvath <mik### [at] gmail com> wrote:

>

> scale vlength(CameraLocation - CameraLookAt) * 2

> translate CameraLocation

Just from sketching some graphical ideas on paper, I think there are probably

two problems involved-- although I hope that I'm understanding the intended use

of your macro!

The spherical pattern by default occupies a sphere space of radius 1.0. The

macro is finding the distance from the *center* of that sphere to the center of

your model (I'm guessing that's what you have in mind, anyway.) Then the camera

is translated to the center point of the scaled-up pattern. So far, the idea

sounds OK. But the spherical pattern's outer 'surface' is already 1 unit away

from the camera location to start with-- so I'm wondering how far that 'new'

outer surface extends to. And it looks like the X2 multiplier is making it much

larger than it needs to be.(?)

If that's the case, then the color_map entries are also getting 'stretched out'.

Maybe they need pre-compressing, so to speak.

Instead of this...

[0.0 color srgb <0,0,1>]

[0.5 color srgb <1,1,1>]

[1.0 color srgb <1,0,0>]

.... maybe *something* like this...

[0.4 color srgb <0,0,1>]

[0.5 color srgb <1,1,1>]

[0.6 color srgb <1,0,0>]

Or maybe the vlength formula should be

scale vlength(CameraLocation - CameraLookAt - 1)

??? com> wrote:

>

> scale vlength(CameraLocation - CameraLookAt) * 2

> translate CameraLocation

Just from sketching some graphical ideas on paper, I think there are probably

two problems involved-- although I hope that I'm understanding the intended use

of your macro!

The spherical pattern by default occupies a sphere space of radius 1.0. The

macro is finding the distance from the *center* of that sphere to the center of

your model (I'm guessing that's what you have in mind, anyway.) Then the camera

is translated to the center point of the scaled-up pattern. So far, the idea

sounds OK. But the spherical pattern's outer 'surface' is already 1 unit away

from the camera location to start with-- so I'm wondering how far that 'new'

outer surface extends to. And it looks like the X2 multiplier is making it much

larger than it needs to be.(?)

If that's the case, then the color_map entries are also getting 'stretched out'.

Maybe they need pre-compressing, so to speak.

Instead of this...

[0.0 color srgb <0,0,1>]

[0.5 color srgb <1,1,1>]

[1.0 color srgb <1,0,0>]

.... maybe *something* like this...

[0.4 color srgb <0,0,1>]

[0.5 color srgb <1,1,1>]

[0.6 color srgb <1,0,0>]

Or maybe the vlength formula should be

scale vlength(CameraLocation - CameraLookAt - 1)

???

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Mike Horvath <mik### [at] gmail com> wrote:

> The texture is centered on the camera location, and stretches to a point

> opposite the model. The problem is that the model ends up mostly white,

> with only touches of blue and red, as you can see in the attached image.

I think what you want to do is center the texture on the model, and scale it

according to the bounding box. That way you get the full range of colors on

the model.

If you're actually trying to somehow encode the distance from the camera into

the texture, then clipka posted a way to do that recently in response to

someone's query.

In any event, include a plane in the scene, and texture that with your color-map

so you can see the whole pattern and how it behaves in relation to where your

object is. com> wrote:

> The texture is centered on the camera location, and stretches to a point

> opposite the model. The problem is that the model ends up mostly white,

> with only touches of blue and red, as you can see in the attached image.

I think what you want to do is center the texture on the model, and scale it

according to the bounding box. That way you get the full range of colors on

the model.

If you're actually trying to somehow encode the distance from the camera into

the texture, then clipka posted a way to do that recently in response to

someone's query.

In any event, include a plane in the scene, and texture that with your color-map

so you can see the whole pattern and how it behaves in relation to where your

object is.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

I ended up using this:

// This script assumes the depth effect is *linear* from near to far,

which may not be the case.

// red, white, blue

#macro MakeChromadepthTextureCameraRWB(CameraLocation, CameraLookAt,

FudgePercent)

#local FudgeMin = FudgePercent/100/2;

#local FudgeMax = 1 - FudgePercent/100/2;

pigment

{

spherical

color_map

{

[0.0 color srgb <0,0,1>]

[FudgeMin color srgb <0,0,1>]

[0.5 color srgb <1,1,1>]

[FudgeMax color srgb <1,0,0>]

[1.0 color srgb <1,0,0>]

}

}

// finish

// {

// ambient 1

// diffuse 0

// }

scale vlength(CameraLocation - CameraLookAt) * 2

translate CameraLocation

#end

I can shrink and grow the texture using the FudgePercent parameter. I

think this is as close as I'll get.

Mike

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

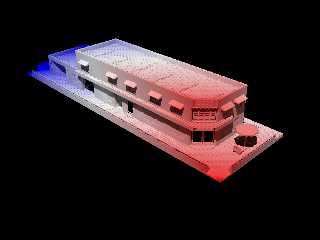

Forgot to post the images.

Mike

Post a reply to this message

Attachments:

Download 'wrapper_showcase_chromadepth_01.png' (582 KB)

Download 'wrapper_showcase_chromadepth_02.png' (511 KB)

Preview of image 'wrapper_showcase_chromadepth_01.png'

Preview of image 'wrapper_showcase_chromadepth_02.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Mike Horvath <mik### [at] gmail com> wrote:

> I ended up using this:

>

>

> // This script assumes the depth effect is *linear* from near to far,

> which may not be the case.

>

The idea looks interesting! And your model proves that it works.

In the interim time, I had a brainstorm. I had to work it out graphically on

paper, discarding one idea after another, but finally came up with a solution.

It's more complex than your's, but it doesn't have any fudge factor that I know

of. I have to describe it in words; if you can put it into an equation, kudos

;-) (I'm really tired and the ol' brain is fizzling out...)

1) Choose a camera position; it can be anywhere. (So can the object.)

2) Get the bounding-box coordinates of the object-- the farthest and nearest

corner locations, whatever they happen to be.

3) find vlength from camera to nearest bounding-box corner. Call it L-1

4) find vlength from camera to farthest B-B corner. Call it L-2

5) L-1 / L-2 = S This will be somewhere between 0.0 and 1.0-- it depends on

how far your object is from the camera. The farher apart, the larger this will

be.

6) Then, (1.0 - S) = T

7) Using T, change your spherical color_map's original 0.0-to-1.0 index values

to a more 'squased' version, closer to the outer spherical 'surface'. (And don't

scale it up yet.)

[0.0 BLUE] // outer radius of spherical pattern

[.5*T WHITE]

[T RED ] // what used to be the center point of the pattern; now father

// out toward edge

8) Now scale-up the spherical pattern by L-2. That *should* put the outer

edge of the BLUE at or near the object's farthest bounding-box corner; and RED

should begin at or neat the closer corner.

CAVEAT: No guarantees are implied... :-P com> wrote:

> I ended up using this:

>

>

> // This script assumes the depth effect is *linear* from near to far,

> which may not be the case.

>

The idea looks interesting! And your model proves that it works.

In the interim time, I had a brainstorm. I had to work it out graphically on

paper, discarding one idea after another, but finally came up with a solution.

It's more complex than your's, but it doesn't have any fudge factor that I know

of. I have to describe it in words; if you can put it into an equation, kudos

;-) (I'm really tired and the ol' brain is fizzling out...)

1) Choose a camera position; it can be anywhere. (So can the object.)

2) Get the bounding-box coordinates of the object-- the farthest and nearest

corner locations, whatever they happen to be.

3) find vlength from camera to nearest bounding-box corner. Call it L-1

4) find vlength from camera to farthest B-B corner. Call it L-2

5) L-1 / L-2 = S This will be somewhere between 0.0 and 1.0-- it depends on

how far your object is from the camera. The farher apart, the larger this will

be.

6) Then, (1.0 - S) = T

7) Using T, change your spherical color_map's original 0.0-to-1.0 index values

to a more 'squased' version, closer to the outer spherical 'surface'. (And don't

scale it up yet.)

[0.0 BLUE] // outer radius of spherical pattern

[.5*T WHITE]

[T RED ] // what used to be the center point of the pattern; now father

// out toward edge

8) Now scale-up the spherical pattern by L-2. That *should* put the outer

edge of the BLUE at or near the object's farthest bounding-box corner; and RED

should begin at or neat the closer corner.

CAVEAT: No guarantees are implied... :-P

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Kenneth" <kdw### [at] gmail com> wrote:

> Mike Horvath <mik### [at] gmail com> wrote:

> Mike Horvath <mik### [at] gmail com> wrote:

> > I ended up using this:

> >

> >

> > // This script assumes the depth effect is *linear* from near to far,

> > which may not be the case.

> >

>

> The idea looks interesting! And your model proves that it works.

>

> In the interim time, I had a brainstorm. I had to work it out graphically on

> paper, discarding one idea after another, but finally came up with a solution.

> It's more complex than your's, but it doesn't have any fudge factor that I know

> of. I have to describe it in words; if you can put it into an equation, kudos

> ;-) (I'm really tired and the ol' brain is fizzling out...)

>

> 1) Choose a camera position; it can be anywhere. (So can the object.)

>

> 2) Get the bounding-box coordinates of the object-- the farthest and nearest

> corner locations, whatever they happen to be.

>

> 3) find vlength from camera to nearest bounding-box corner. Call it L-1

>

> 4) find vlength from camera to farthest B-B corner. Call it L-2

>

> 5) L-1 / L-2 = S This will be somewhere between 0.0 and 1.0-- it depends on

> how far your object is from the camera. The farher apart, the larger this will

> be.

>

> 6) Then, (1.0 - S) = T

>

> 7) Using T, change your spherical color_map's original 0.0-to-1.0 index values

> to a more 'squased' version, closer to the outer spherical 'surface'. (And don't

> scale it up yet.)

>

> [0.0 BLUE] // outer radius of spherical pattern

> [.5*T WHITE]

> [T RED ] // what used to be the center point of the pattern; now father

> // out toward edge

>

> 8) Now scale-up the spherical pattern by L-2. That *should* put the outer

> edge of the BLUE at or near the object's farthest bounding-box corner; and RED

> should begin at or neat the closer corner.

>

> CAVEAT: No guarantees are implied... :-P

Maybe this is asking a lot, but I believe such features should be hardcoded into

POV, with a pass system where pov could render a z pass, motion vector pass,

ambient occlusion (proximity pattern) etc. so they would be rendered in the same

process as main image, stored in its secondary layers when using EXR format, and

be usable for compositing. com> wrote:

> > I ended up using this:

> >

> >

> > // This script assumes the depth effect is *linear* from near to far,

> > which may not be the case.

> >

>

> The idea looks interesting! And your model proves that it works.

>

> In the interim time, I had a brainstorm. I had to work it out graphically on

> paper, discarding one idea after another, but finally came up with a solution.

> It's more complex than your's, but it doesn't have any fudge factor that I know

> of. I have to describe it in words; if you can put it into an equation, kudos

> ;-) (I'm really tired and the ol' brain is fizzling out...)

>

> 1) Choose a camera position; it can be anywhere. (So can the object.)

>

> 2) Get the bounding-box coordinates of the object-- the farthest and nearest

> corner locations, whatever they happen to be.

>

> 3) find vlength from camera to nearest bounding-box corner. Call it L-1

>

> 4) find vlength from camera to farthest B-B corner. Call it L-2

>

> 5) L-1 / L-2 = S This will be somewhere between 0.0 and 1.0-- it depends on

> how far your object is from the camera. The farher apart, the larger this will

> be.

>

> 6) Then, (1.0 - S) = T

>

> 7) Using T, change your spherical color_map's original 0.0-to-1.0 index values

> to a more 'squased' version, closer to the outer spherical 'surface'. (And don't

> scale it up yet.)

>

> [0.0 BLUE] // outer radius of spherical pattern

> [.5*T WHITE]

> [T RED ] // what used to be the center point of the pattern; now father

> // out toward edge

>

> 8) Now scale-up the spherical pattern by L-2. That *should* put the outer

> edge of the BLUE at or near the object's farthest bounding-box corner; and RED

> should begin at or neat the closer corner.

>

> CAVEAT: No guarantees are implied... :-P

Maybe this is asking a lot, but I believe such features should be hardcoded into

POV, with a pass system where pov could render a z pass, motion vector pass,

ambient occlusion (proximity pattern) etc. so they would be rendered in the same

process as main image, stored in its secondary layers when using EXR format, and

be usable for compositing.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

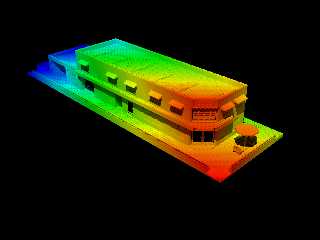

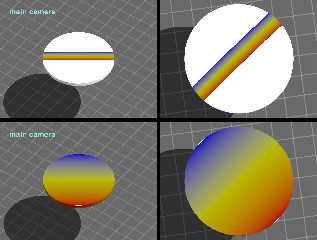

So, I finally coded-up my idea, and it does indeed put the 'center' color of the

color_map across the center of the object. That part works, at least. But the

color pattern's 'limits' on the object have a problem: the color spread doesn't

extend all the way across. So far, after several days of trying to figure out

why, I still don't have a clue. I tried changing *every* parameter in the code,

but no luck-- although there's probably a hidden 'clue' in those trials.

So, I had to resort to some fudge-factors as well :-/ I eventually found one

set of those that actually works consistently... so the overall scheme is

workable after all. Only two 'fudging' values need changing, by eye; they depend

on relative distance between object and camera, and on the scale of the object.

I don't know if those values change linearly, exponentially, or what.

Essentially, they increase the distance between the object's found bounding-box

coordinates, *as if* the object is larger. A clue?

The first image is the result of the initial code idea; the second is with the

fix. The fudge factors indicate that the 'squashed' color_map values depend on

some kind of so-far-unknown variable. There HAS to be an answer; I just haven't

found it yet :-(

The camera and object positions are arbitrary, as is object scale, and can be

anything... within reason ; there can be precision issues behind-the-scenes. See

the #debug messages.

//------------------------

#declare CAM_LOCATION = <32, 56, -35>*3; // easily change location using the

// (positive) multiplier (actually changes it between location <0,0,0> and

// farther out)

#declare OBJECT_CENTER_LOCATION = <3,6,22>*1.0; // ditto

#declare OBJECT_SCALE = 2.7;

#declare CAM_ANGLE = 3; // 0.9 Just to easily change the camera angle when

// you change object scale or camera/object distance.

// main camera

camera {

perspective

location CAM_LOCATION

look_at OBJECT_CENTER_LOCATION

right x*image_width/image_height // aspect

angle CAM_ANGLE

}

/*

// OR, separate camera, 'un-coupled' from code, looking from another location

// way above

camera {

perspective

location <0,500,0>

look_at OBJECT_CENTER_LOCATION

right x*image_width/image_height // aspect

angle CAM_ANGLE

}

*/

light_source {

0*x

color rgb .7

translate <40, 80, 30>

}

background{rgb .1}

// stripes are 1-unit apart

plane{y,0

pigment{

average

pigment_map{

[ 1 gradient x

color_map{

[.05 rgb .8]

[.05 rgb .3]

}

]

[ 1 gradient z

color_map{

[.05 rgb .8]

[.05 rgb .3]

}

]

}

scale 1

}

}

#declare OBJECT_1 = // does not need to be centered on origin when made

// box{0,<1,.1,1> scale OBJECT_SCALE

// rotate 40

// translate OBJECT_CENTER_LOCATION

// }

// OR...

cylinder{-.05*y,.05*y 1

scale OBJECT_SCALE

translate OBJECT_CENTER_LOCATION // yes, PRE-translated -- it's part

// of the idea

}

#declare NEAR_CORNER = min_extent(OBJECT_1);

#declare FAR_CORNER = max_extent(OBJECT_1);

#declare L_1 = vlength(NEAR_CORNER - CAM_LOCATION) - 0; // or - 1.68 FUDGE

// FACTOR

#declare L_2 = vlength(FAR_CORNER - CAM_LOCATION) + 0; // or + 1.44 FUDGE

// FACTOR

#declare S = L_1/L_2;

#declare T = 1.0 - S;

#declare SPHERE_PIG =

pigment{

spherical

color_map{

[0 rgb 3]

[0 rgb <0,0,1>] // BLUE -- outside edge of pattern

[0.5*T rgb <1,1,0>] // YELLOW

[T rgb <1,0,0>] // RED

[T rgb 3]

}

/*

// to experiment with...

color_map{

[0 rgb 3]

[0 rgb <0,0,1>] // BLUE -- outside edge of pattern

[.48*T rgb <0,0,1>]

[.48*T rgb <1,1,0>]

[.52*T rgb <1,1,0>]

[.52*T rgb <1,0,0>]

[T rgb <1,0,0>]

[T rgb 3]

}

*/

scale L_2

translate CAM_LOCATION

}

object{OBJECT_1// already translated

pigment{SPHERE_PIG} // ditto

}

#debug concat("\n", "object SCALE = ",str(OBJECT_SCALE,0,9),"\n")

#debug concat("\n", "distance to bounding box NEAR corner (L_1) =

",str(vlength(NEAR_CORNER - CAM_LOCATION) - 0,0,9),"\n")

#debug concat("\n", "distance to bounding box FAR corner (L_2) =

",str(vlength(FAR_CORNER - CAM_LOCATION) - 0,0,9),"\n")

#debug concat("\n", "L_1/L_2 = ",str(L_1/L_2,0,9),

" (should never go over 1.0 !)","\n")

#debug concat("\n", "T = ",str(T,0,9),"\n")

Post a reply to this message

Attachments:

Download 'chromadepth_example.jpg' (225 KB)

Preview of image 'chromadepth_example.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

On 2/19/2018 11:13 AM, Kenneth wrote:

> So, I finally coded-up my idea, and it does indeed put the 'center' color of the

> color_map across the center of the object. That part works, at least. But the

> color pattern's 'limits' on the object have a problem: the color spread doesn't

> extend all the way across. So far, after several days of trying to figure out

> why, I still don't have a clue. I tried changing *every* parameter in the code,

> but no luck-- although there's probably a hidden 'clue' in those trials.

>

> So, I had to resort to some fudge-factors as well :-/ I eventually found one

> set of those that actually works consistently... so the overall scheme is

> workable after all. Only two 'fudging' values need changing, by eye; they depend

> on relative distance between object and camera, and on the scale of the object.

> I don't know if those values change linearly, exponentially, or what.

> Essentially, they increase the distance between the object's found bounding-box

> coordinates, *as if* the object is larger. A clue?

>

> The first image is the result of the initial code idea; the second is with the

> fix. The fudge factors indicate that the 'squashed' color_map values depend on

> some kind of so-far-unknown variable. There HAS to be an answer; I just haven't

> found it yet :-(

>

> The camera and object positions are arbitrary, as is object scale, and can be

> anything... within reason ; there can be precision issues behind-the-scenes. See

> the #debug messages.

Awesome! Let us know when you figure out why the fudge factor is needed.

If you flip over the disc you'll see that the second fudge factor is too

small. You need to set it to + 1.65 instead of + 1.44.

Mike

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()