|

|

On 03/30/2018 08:21 AM, William F Pokorny wrote:

> ....

Sturm / polysove() investigation. Other solvers. Root polishing.

I've continue to look at the root solvers over the past month. At a good

place to write another post about findings and changes.

A couple preliminaries before the main topic.

First, while most results are better, the polynomialsolverAccuracy

branch still lacks planned updates. For example, scenes with lathes and

sphere_sweeps using orthographic cameras currently render with more

artifacts, not less.

Second, this set of commits updates the solve_quadratic function in a

way both more accurate and faster.

---

The main topic is root finding tolerance and scaling.

All the solvers find roots / surface intersections to some tolerance.

They do this in a coordinate space which has been normalized with

respect all scene transforms. Additionally, objects like blobs

internally normalized to internal 'unit' coordinate spaces.

Suppose in the solver coordinate space a ray/surface equation has a root

essentially at the ray origin - at distance 0.0 - as happens with self

shadowing rays. All solvers due their tolerances have the potential to

allow such near-zero roots to drift into the >0.0 value space as

ray-surface intersections. This numerical reality is why objects filter

roots to a minimum returned intersection depth larger than zero(1).

Further, this minimum returned intersection depth must account for the

translation of every root and its tolerance back into the original

coordinate space. A wrongly positive 1e-8 root in solver space is a much

more positive 1e-4 root after a scale up by 10000. The solvers each have

different tolerances. There are many shape custom 'solvers' in use

beyond those I've been working upon and differing internal

normalizations. Why we've got many differing returned depth values. Why

blob's have long used a minimum returned intersection depth of 1e-2

while a sphere, for example, uses 1e-6.

It's the case that even blob's large 1e-2 filtering value is inadequate

given solve_quartic()'s tolerance is relatively large. Continuing to use

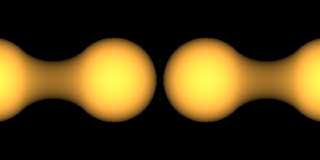

Gail Shaw's blob scene from 2005 to demonstrate, the attached image was

rendered with the current 3.8 master branch without sturm - so using

solve_quartic(). The original scene render is on the left. On the right

is the same scene scaled up by 10000. The vertical bars showing up on

the right happen due slightly ~=0.0 roots drifting positive in

solve_quartic(). The wrongly positive roots are then scaled up to a

value greater than the current 1e-2 small root/intersection depth

filter. Not being filtered, the roots corrupt results.

While zero crossing roots due tolerance are the most serious tolerance

multiplied by scale issue, roots well positive can drift quite a lot too

in the global space where the scale up is large.

The improvement adopted for the solve_quartic() and polysolve()/sturm

solvers - where 'scaled tolerance' issues have been seen - was to add a

Newton-Raphson root polishing step to each. With blobs this looks to

allow a returned depth on the order of 4e-8 over 1e-2 for a 1e-7 to 1e7

global working range at DBL = 'double'.

Aside for thought: Might we be able to determine when we are evaluating

a self-shadowing or same-shape-terminating ray-surface equation? If so,

it should be we can use the knowledge we have a root 'at 0.0' to always

deflate / reduce the order of these polynomials prior to finding roots.

Updates at:

https://github.com/wfpokorny/povray/tree/fix/polynomialsolverAccuracy

Performance and specific commit details below.

Bill P.

(1) - The idea of going as small as >0.0 in each shape's implementation

fly is not presently possible. Trying instead a new much smaller

MIN_ISECT_DEPTH_RETURNED value - see commit comments.

Performance info:

------------ /usr/bin/time povray -j -wt1 -fn -p -d -c lemonSturm.pov

0) master 30.22user 0.04system 0:30.89elapsed

19) 4e16623 14.94user 0.01system 0:15.56elapsed -50.56%

20) 0d66160 15.22user 0.03system 0:15.80elapsed +1.87%

(hilbert_curve linear sphere_sweep

scene with new solve_quadratic()) (-7.5%)

21) 761dd0b 15.05user 0.04system 0:15.71elapsed -1.12%

22) 552b625 (quartic polish non-sturm lemon scene) (+0.82%)

23) 63e0456 15.16user 0.02system 0:15.76elapsed +1.20%

24) ec28851 NA Code documentation only.

25) 8962513 15.43user 0.02system 0:16.05elapsed +1.78% -48.94%

(quartic polish non-sturm lemon scene) (+1.02% +2.70%)

19) Removing leading, near zero coefficient reduction in Solve_Polynomial().

Leading coefficients of < SMALL_ENOUGH (1e-10) were being dropped

reducing the order of the incoming polynomial when the near zero values

had meaning with respect to roots. Led to both false roots and missed roots.

20) New zero coefficient stripping and solve_quadratic implementation.

General effort to implement better 'effective zero' coefficient

handling. Created new POV_DBL_EPSILON macro value which is 2x the C++

standards <float type>_EPSILON value and updated PRECISE_EPSILON to be

2x the single bit epsilon as well.

Zero filtering in polysolve now looks at all polynomial coefficients and

either sets 'effective zeros' to exactly 0.0 or strips them if they are

leading coefficients.

The much more accurate near zero coefficients drove the need for a

better solve_quadratic implementation included with this commit. Note it

supports the PRECISE_FLOAT options like polysolve.

Zero filtering in solve_quadratic, solve_cubic and solve_quartic now

standardized both in implementation and use of POV_DBL_EPSILON.

21) Created new constexpr DBL variable MIN_ISECT_DEPTH_RETURNED.

Near term need to use a value not MIN_ISECT_DEPTH in blob.cpp to test

Jérôme's github pull request #358. Longer term aim is to drive all

returned intersection depths from shape code to

MIN_ISECT_DEPTH_RETURNED. The value is automatically derived from DBL

setting and at double resolves to about 4.44089e-08. On the order of the

square root of POV_DBL_EPSILON.

Moving blob.cpp's inside test value INSIDE_TOLERANCE to POV_DBL_EPSILON

over previous recent re-calculation. Over time plan is to move EPSILONs

best nearer a double's step to POV_DBL_EPSILON.

Cleaning up doxygen documentation added during recent solver related

updates.

22) Adding root polishing step to solve_quartic function.

Newton-Raphson step added to polish initial roots found by the

solve_quartic function. The core solve_quartic tolerance allows roots to

drift from <=0.0 to >0.0 values with the latter causing artifacts and

additional root filtering. This the reason for the long too large 1e-2

intersection depth value in blob.cpp now reduced to

MIN_ISECT_DEPTH_RETURNED.

As part of this change created a new FUDGE_FACTOR4(1e-8) constant DBL

value to replace previous use of SMALL_ENOUGH(1e-10) within

solve_quartic. Looks like the value had been smaller to make roots more

accurate, but at the cost of missing roots in some difficult equation

cases. With the root polishing can move back to a larger value so as to

always get roots. Yes, this better addresses most of what the already

remove difficult_coeffs() function and bump into polysolve was trying to do.

23) Adding root polishing step to polysolve function.

24) Cleaning up a few comments and doxygen documentation.

25) Moving to more conservative root polishing implementations.

Post a reply to this message

Attachments:

Download 'tolerancestory.png' (97 KB)

Preview of image 'tolerancestory.png'

|

|

![]()