|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Norbert Kern" <nor### [at] t-online de> wrote:

>

> The figures are excellent - i love them...

Thanks Norbert!

> I dont like the structure and the material - you made materials much more

> elaborate than this...

Well, ancient aliens apparently have a very different design aesthetic!

No, seriously, I'm thinking of doing another version with something else there

instead. I've been working on a simple spaceship... de> wrote:

>

> The figures are excellent - i love them...

Thanks Norbert!

> I dont like the structure and the material - you made materials much more

> elaborate than this...

Well, ancient aliens apparently have a very different design aesthetic!

No, seriously, I'm thinking of doing another version with something else there

instead. I've been working on a simple spaceship...

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

hi,

"Kenneth" <kdw### [at] gmail com> wrote:

> ... I can't even get

> a *basic* experiment with a matrix to work in v3.8xx for Windows:

>

> #declare MY_MATRIX =

> transform{

> matrix

> <1,1,1,

> 1,1,1,

> 1,1,1,

> 0,0,1>

> }

>

> .... with or without a semi-colon at the end. "Fatal error in parser:

> uncategorized error"

>

> So I must be doing something basically wrong-- or else my syntax is incorrect,

> although I don't see where.

the problem must be elsewhere, the syntax is correct, tried it on (Linux,

self-compiled) alpha.10064268.

regards, jr. com> wrote:

> ... I can't even get

> a *basic* experiment with a matrix to work in v3.8xx for Windows:

>

> #declare MY_MATRIX =

> transform{

> matrix

> <1,1,1,

> 1,1,1,

> 1,1,1,

> 0,0,1>

> }

>

> .... with or without a semi-colon at the end. "Fatal error in parser:

> uncategorized error"

>

> So I must be doing something basically wrong-- or else my syntax is incorrect,

> although I don't see where.

the problem must be elsewhere, the syntax is correct, tried it on (Linux,

self-compiled) alpha.10064268.

regards, jr.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Op 20/02/2021 om 02:41 schreef Robert McGregor:

> Thomas de Groot <tho### [at] degroot org> wrote:

>

>> Excellent! And Thanks for the detailed explanation; I shall note that

>> carefully down for future study and use. The pose rig is particularly

>> interesting for me. I have done some of this in Poser and there it is -

>> not difficult - but demanding a close attention. How is this in Blender?

>> more straightforward? Somehow, I guess it needs a close attention there too.

>

> Thanks Thomas. The posing in Blender is pretty straightforward, it just takes

> time to get all the bones in situated properly within the mesh. I spent about 3

> hours building the rig and trying various poses.

>

Yes, that is pretty close to what Poser needs too. I good incentive to

explore that direction again.

--

Thomas org> wrote:

>

>> Excellent! And Thanks for the detailed explanation; I shall note that

>> carefully down for future study and use. The pose rig is particularly

>> interesting for me. I have done some of this in Poser and there it is -

>> not difficult - but demanding a close attention. How is this in Blender?

>> more straightforward? Somehow, I guess it needs a close attention there too.

>

> Thanks Thomas. The posing in Blender is pretty straightforward, it just takes

> time to get all the bones in situated properly within the mesh. I spent about 3

> hours building the rig and trying various poses.

>

Yes, that is pretty close to what Poser needs too. I good incentive to

explore that direction again.

--

Thomas

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"jr" <cre### [at] gmail com> wrote:

>

> "Kenneth" <kdw### [at] gmail com> wrote:

>

> "Kenneth" <kdw### [at] gmail com> wrote:

> > ... I can't even get a *basic* experiment with a matrix to work

> > in v3.8xx for Windows:

> > ...

> > So I must be doing something basically wrong-- or else my syntax is

> > incorrect, although I don't see where.

>

> the problem must be elsewhere, the syntax is correct, tried it on (Linux,

> self-compiled) alpha.10064268.

>

Thanks.

That's strange, though; I just ran my matrix code snippet in v3.7.1 beta 9

(Windows), and it fails there as well, same error.

But I found the cause (at least for Windows builds): It's the matrix vector

values themselves that I used-- which I chose blindly, as just some combination

of 0's and 1's! Thinking that any combination would work for a simple syntax

test; apparently a bad idea. :-[

So I substituted the example values given in POV-ray's "matrix" docs...

matrix < 1, 1, 0,

0, 1, 0,

0, 0, 1,

0, 0, 0 >

....and the #declared transform now runs without an error.

I guess it's not good to play around with things that I don't yet fully

understand ;-) com> wrote:

> > ... I can't even get a *basic* experiment with a matrix to work

> > in v3.8xx for Windows:

> > ...

> > So I must be doing something basically wrong-- or else my syntax is

> > incorrect, although I don't see where.

>

> the problem must be elsewhere, the syntax is correct, tried it on (Linux,

> self-compiled) alpha.10064268.

>

Thanks.

That's strange, though; I just ran my matrix code snippet in v3.7.1 beta 9

(Windows), and it fails there as well, same error.

But I found the cause (at least for Windows builds): It's the matrix vector

values themselves that I used-- which I chose blindly, as just some combination

of 0's and 1's! Thinking that any combination would work for a simple syntax

test; apparently a bad idea. :-[

So I substituted the example values given in POV-ray's "matrix" docs...

matrix < 1, 1, 0,

0, 1, 0,

0, 0, 1,

0, 0, 0 >

....and the #declared transform now runs without an error.

I guess it's not good to play around with things that I don't yet fully

understand ;-)

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Kenneth" <kdw### [at] gmail com> wrote:

> That's a wonderful scene, very mysterious and evocative. And thanks for sharing

> the details of your techniques, plus the image of the various assets you used.

Thanks Kenneth, I appreciate that.

> That idea gave me some thoughts about my recent post regarding AA problems with

> very bright objects, where AA fails for those bright pixels (Thanks!) So I put

> together something similar(?) to your idea: Rendering a scene the normal way,

> then bringing the image back into POV-ray and doing an

> image-to-function-to-pigments conversion on it (all 3 color channels). Then

> re-combining (using 'average') those 3 pigment functions-- with simple but

> proper r-g-b color_maps-- to re-create the image as before. At this point, I

> discovered that I could change the color_maps' index values to effectively

> mask-off (as black) any pixels over a certain 0-1 brightness threshold... like

> your idea. Great, so far!

Yes, this sounds very much like what I did (which was orginally inspired by

reading your bright pixels AA problems post and pondering ways to fix that!)

> This is the 2nd part of my task. I looked up 'gaussian blur', and I see that it

> involves matrix use in some way. I'm still a rank beginner when it comes to

> manipulating matrices, but aside from that small problem (ha), I can't even get

> a *basic* experiment with a matrix to work in v3.8xx for Windows

Okay, I didn't use a matrix in that sense, i.e., the matrix keyword as used for

transformations. I just built my own Gaussian smoothing matrix using a 2d array,

like this (note the symmetry):

#declare ConvolutionKernel = array[5][5] { // Smoothing matrix

{1, 4, 7, 4, 1},

{4, 16, 26, 16, 4},

{7, 26, 41, 26, 7},

{4, 16, 26, 16, 4},

{1, 4, 7, 4, 1}

}

This small kernel (matrix) represents pixel weights, and the central element

(with the highest value) is the calculation target, an average of all the

surrounding pixel colors. In a nutshell it works like this:

Place the center weight of the kernel (41 in this case) on an image pixel. The

colors of all pixels under the matrix (from the original image, as sampled from

the function pigment) are multiplied by the overlapping kernel pixel weights,

and the results of all pixels are all added together into a final summed color.

Each kernel weight that is multiplied against a pixel color is added to an

overall matrix sum (at image borders not all weights will have pixels under

them). An average color for the central pixel is then calculated as: summed

color divided by the matrix sum.

Move the center of the kernel to the next pixel and iterate the process until

all pixels have been assigned averaged colors. At that point the averaging has

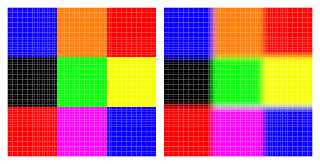

blurred the image, in this case the 5x5 matrix gives a 3-pixel blur (see

attached sample image, left is a magnified 60x60 test image and right is the

blurred convolution result).

Doing it this way is a *slow* process, especially in SDL, because for a

1920x1080 pixel image the are 2 million+ pixels, and each needs to be averaged

with (for the most part, except edges) 24 neighboring pixels, for an estimated

48 million samples and calculations. com> wrote:

> That's a wonderful scene, very mysterious and evocative. And thanks for sharing

> the details of your techniques, plus the image of the various assets you used.

Thanks Kenneth, I appreciate that.

> That idea gave me some thoughts about my recent post regarding AA problems with

> very bright objects, where AA fails for those bright pixels (Thanks!) So I put

> together something similar(?) to your idea: Rendering a scene the normal way,

> then bringing the image back into POV-ray and doing an

> image-to-function-to-pigments conversion on it (all 3 color channels). Then

> re-combining (using 'average') those 3 pigment functions-- with simple but

> proper r-g-b color_maps-- to re-create the image as before. At this point, I

> discovered that I could change the color_maps' index values to effectively

> mask-off (as black) any pixels over a certain 0-1 brightness threshold... like

> your idea. Great, so far!

Yes, this sounds very much like what I did (which was orginally inspired by

reading your bright pixels AA problems post and pondering ways to fix that!)

> This is the 2nd part of my task. I looked up 'gaussian blur', and I see that it

> involves matrix use in some way. I'm still a rank beginner when it comes to

> manipulating matrices, but aside from that small problem (ha), I can't even get

> a *basic* experiment with a matrix to work in v3.8xx for Windows

Okay, I didn't use a matrix in that sense, i.e., the matrix keyword as used for

transformations. I just built my own Gaussian smoothing matrix using a 2d array,

like this (note the symmetry):

#declare ConvolutionKernel = array[5][5] { // Smoothing matrix

{1, 4, 7, 4, 1},

{4, 16, 26, 16, 4},

{7, 26, 41, 26, 7},

{4, 16, 26, 16, 4},

{1, 4, 7, 4, 1}

}

This small kernel (matrix) represents pixel weights, and the central element

(with the highest value) is the calculation target, an average of all the

surrounding pixel colors. In a nutshell it works like this:

Place the center weight of the kernel (41 in this case) on an image pixel. The

colors of all pixels under the matrix (from the original image, as sampled from

the function pigment) are multiplied by the overlapping kernel pixel weights,

and the results of all pixels are all added together into a final summed color.

Each kernel weight that is multiplied against a pixel color is added to an

overall matrix sum (at image borders not all weights will have pixels under

them). An average color for the central pixel is then calculated as: summed

color divided by the matrix sum.

Move the center of the kernel to the next pixel and iterate the process until

all pixels have been assigned averaged colors. At that point the averaging has

blurred the image, in this case the 5x5 matrix gives a 3-pixel blur (see

attached sample image, left is a magnified 60x60 test image and right is the

blurred convolution result).

Doing it this way is a *slow* process, especially in SDL, because for a

1920x1080 pixel image the are 2 million+ pixels, and each needs to be averaged

with (for the most part, except edges) 24 neighboring pixels, for an estimated

48 million samples and calculations.

Post a reply to this message

Attachments:

Download 'convolveexample.png' (1219 KB)

Preview of image 'convolveexample.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Robert McGregor" <rob### [at] mcgregorfineart com> wrote:

> "Kenneth" <kdw### [at] gmail com> wrote:

> "Kenneth" <kdw### [at] gmail com> wrote:

> > This is the 2nd part of my task. I looked up 'gaussian blur', and I see that it

> > involves matrix use in some way. I'm still a rank beginner when it comes to

> > manipulating matrices, but aside from that small problem (ha), I can't even get

> > a *basic* experiment with a matrix to work in v3.8xx for Windows

>

> Okay, I didn't use a matrix in that sense, i.e., the matrix keyword as used for

> transformations. I just built my own Gaussian smoothing matrix using a 2d array,

> like this (note the symmetry):

>

> #declare ConvolutionKernel = array[5][5] { // Smoothing matrix

> {1, 4, 7, 4, 1},

> {4, 16, 26, 16, 4},

> {7, 26, 41, 26, 7},

> {4, 16, 26, 16, 4},

> {1, 4, 7, 4, 1}

> }

Yes, it's a mathematical "matrix" - but with what we're doing with it, it's

implemented as an _array_ in code. Not many working parts under the hood for

doing matrix math at the moment.

So I guess "array processing algorithm" would most clearly describe the

operation.

I did similar here:

http://news.povray.org/povray.binaries.images/thread/%3Cweb.5e9e3e93521c7ab7fb0b41570%40news.povray.org%3E/?ttop=432310

&toff=50

And probably for the Fast Fourier Transform and other places where I needed the

matrix structure to accomplish what I wanted. com> wrote:

> > This is the 2nd part of my task. I looked up 'gaussian blur', and I see that it

> > involves matrix use in some way. I'm still a rank beginner when it comes to

> > manipulating matrices, but aside from that small problem (ha), I can't even get

> > a *basic* experiment with a matrix to work in v3.8xx for Windows

>

> Okay, I didn't use a matrix in that sense, i.e., the matrix keyword as used for

> transformations. I just built my own Gaussian smoothing matrix using a 2d array,

> like this (note the symmetry):

>

> #declare ConvolutionKernel = array[5][5] { // Smoothing matrix

> {1, 4, 7, 4, 1},

> {4, 16, 26, 16, 4},

> {7, 26, 41, 26, 7},

> {4, 16, 26, 16, 4},

> {1, 4, 7, 4, 1}

> }

Yes, it's a mathematical "matrix" - but with what we're doing with it, it's

implemented as an _array_ in code. Not many working parts under the hood for

doing matrix math at the moment.

So I guess "array processing algorithm" would most clearly describe the

operation.

I did similar here:

http://news.povray.org/povray.binaries.images/thread/%3Cweb.5e9e3e93521c7ab7fb0b41570%40news.povray.org%3E/?ttop=432310

&toff=50

And probably for the Fast Fourier Transform and other places where I needed the

matrix structure to accomplish what I wanted.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Robert McGregor" <rob### [at] mcgregorfineart com> wrote:

> "Kenneth" <kdw### [at] gmail com> wrote:

> "Kenneth" <kdw### [at] gmail com> wrote:

>

> > ...So I put together something similar(?) to your idea: Rendering a scene

> > the normal way, then bringing the image back into POV-ray and doing an

> > image-to-function-to-pigments conversion on it (all 3 color channels)...

>

> Yes, this sounds very much like what I did (which was orginally inspired by

> reading your bright pixels AA problems post and pondering ways to fix that!)

Ha! This is what I love about the newsgroups here: similar ideas feeding off of

each other, to find a common solution.

> > I looked up 'gaussian blur', and I see that it

> > involves matrix use in some way...

>

> Okay, I didn't use a matrix in that sense, i.e., the matrix keyword as

> used for transformations.

Yes, I had a half-formed idea that this might be the case. Honestly, I had no

real idea as to how I was going to proceed with a 'typical' matrix, if I could

even get the darn thing to run ;-)

> I just built my own Gaussian smoothing matrix using a 2d array,

> like this (note the symmetry):

>

> #declare ConvolutionKernel = array[5][5] { // Smoothing matrix

> {1, 4, 7, 4, 1},

> {4, 16, 26, 16, 4},

> {7, 26, 41, 26, 7},

> {4, 16, 26, 16, 4},

> {1, 4, 7, 4, 1}

> }

[clip]

Ah, the key! That's brilliant and elegantly simple. I shall experiment with it.

Thanks again for your willingness to share, and for your clear comments.

Here's an alternate 'brute force' idea for finding / masking off pixels in an

image that go over a certain brightness limit: Years ago, I wrote some code

using eval_pigment to go over each and every pixel of a pre-rendered image, then

storing all of those found color values in a 2-D array. (Or alternately, just

the brightest pixel values according to a particular threshold, and their x/y

positions in the image). The final step was to 're-create' the image by

assigning each original pixel's color-- or black, as the case may be-- to a tiny

flat box or polygon in an x/y grid. For example, an 800X600 re-created image

would be made of 480,000 tiny box shapes! Crude but effective.

But a more elegant solution to this 'many boxes' idea would be to take all of

the arrays' eval_pigment color values (and positions in the image), and somehow

shove them into a FUNCTION of some sort-- to ultimately be applied as a *single*

pigment function to a single flat box. But HOW to do this has always eluded me.

In other words, how can a single function be 'built' using a repetitive

iteration scheme-- other than by assigning each and every pixel color to its own

initial pigment function, then combining/adding(?) all of the 480,000 functions

into 1 for the final image output?? (Which by itself leaves out the *positions*

of the individual pixels, another major problem.)

Can such a pigment function be built piece-by-piece-- in a #for loop, for

example-- AND in correct final order of the pixels? I was wondering if you have

ever devised a scheme to do something like this. com> wrote:

>

> > ...So I put together something similar(?) to your idea: Rendering a scene

> > the normal way, then bringing the image back into POV-ray and doing an

> > image-to-function-to-pigments conversion on it (all 3 color channels)...

>

> Yes, this sounds very much like what I did (which was orginally inspired by

> reading your bright pixels AA problems post and pondering ways to fix that!)

Ha! This is what I love about the newsgroups here: similar ideas feeding off of

each other, to find a common solution.

> > I looked up 'gaussian blur', and I see that it

> > involves matrix use in some way...

>

> Okay, I didn't use a matrix in that sense, i.e., the matrix keyword as

> used for transformations.

Yes, I had a half-formed idea that this might be the case. Honestly, I had no

real idea as to how I was going to proceed with a 'typical' matrix, if I could

even get the darn thing to run ;-)

> I just built my own Gaussian smoothing matrix using a 2d array,

> like this (note the symmetry):

>

> #declare ConvolutionKernel = array[5][5] { // Smoothing matrix

> {1, 4, 7, 4, 1},

> {4, 16, 26, 16, 4},

> {7, 26, 41, 26, 7},

> {4, 16, 26, 16, 4},

> {1, 4, 7, 4, 1}

> }

[clip]

Ah, the key! That's brilliant and elegantly simple. I shall experiment with it.

Thanks again for your willingness to share, and for your clear comments.

Here's an alternate 'brute force' idea for finding / masking off pixels in an

image that go over a certain brightness limit: Years ago, I wrote some code

using eval_pigment to go over each and every pixel of a pre-rendered image, then

storing all of those found color values in a 2-D array. (Or alternately, just

the brightest pixel values according to a particular threshold, and their x/y

positions in the image). The final step was to 're-create' the image by

assigning each original pixel's color-- or black, as the case may be-- to a tiny

flat box or polygon in an x/y grid. For example, an 800X600 re-created image

would be made of 480,000 tiny box shapes! Crude but effective.

But a more elegant solution to this 'many boxes' idea would be to take all of

the arrays' eval_pigment color values (and positions in the image), and somehow

shove them into a FUNCTION of some sort-- to ultimately be applied as a *single*

pigment function to a single flat box. But HOW to do this has always eluded me.

In other words, how can a single function be 'built' using a repetitive

iteration scheme-- other than by assigning each and every pixel color to its own

initial pigment function, then combining/adding(?) all of the 480,000 functions

into 1 for the final image output?? (Which by itself leaves out the *positions*

of the individual pixels, another major problem.)

Can such a pigment function be built piece-by-piece-- in a #for loop, for

example-- AND in correct final order of the pixels? I was wondering if you have

ever devised a scheme to do something like this.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Kenneth" my namesake, wrote:

> ...take all of

> the arrays' eval_pigment color values (and positions in the image), and somehow

> shove them into a FUNCTION of some sort-- to ultimately be applied as a *single*

> pigment function to a single flat box. But HOW to do this has always eluded me.

Because it's hiding under you nose - under the hood.

It the image_map "function" that is all shoved into ... one pigment pattern.

Throughout all of my experiments over the years, I've used POV-Ray to help teach

myself math, and computer graphics, which has helped me learn about POV-Ray, and

what actually goes on (or is supposed to go on) under the hood.

I dabble in ShaderToy because there is no (or very little) "under the hood".

You have to write it all yourself. And that dispels the illusions of things

like light source, shadows, camera, 3D space.... it's all just "one function"

that evaluates to a certain rgb value at every x,y position of the screen, or

image file.

So how does a function work?

We take <x, y, z> and plug those into the function, which computes a color

value.

But you could also pre-calculate the color values, and store them - in a file -

to reference when you're looping through all of the position values - and that's

called an image file. That gets implemented via the pigment {image_map} syntax.

Because IIRC POV-Ray even has a mechanism whereby you can "render" something in

memory and use that as an image_map style thing, without even rendering it, or

saving it to an image file first.

Now, you could manually construct a function as a giant polynomial that

intersects all the right rgb values given the right xyz values --- but that's

just encoding the image file data into an equation - which is probably wasteful

of effort, time, and storage. But it's also likely the kind of thing that _I'd_

be likely to do, in order to learn how to do it, prove that it's possible, and

possibly learn another dozen things and raise 34 more questions in the process.

You likely don't have to store ALL of the image data, if a lot of it is the same

- and then you can write a function, or an algorithm that encodes a lot of data

with - less data. That is image compression.

In the 80's, I was making a world map on an Atari 800 XL, and it seemed silly to

store EVERY "pixel" of the map, when I was only dealing with 2 colors, and I had

long stretches of same-color pixels. So I defined them as "lines" (or "boxes")

and just saved the starting point and the length. Like assembling a hardwood

floor.

So how does one take 48,000 boxes and color them all with one function?

union {

.... all your boxes ...

pigment {image_map}

}

All those boxes get immersed into the region where the image_map correlates to

the 3D space coordinates. It creates a 1:1 correspondence - a look-up table - a

function - that does what you'd essentially be doing from scratch, or through a

roundabout way with a hand-written function.

At least that's how it all works in my head at this point in time. There may

be some nuances and POV-Ray specific source code things that don't quite work in

exactly that way... but I hope this helps you "wrap your head around it"?

- Bill

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Bald Eagle" <cre### [at] netscape net> wrote:

>

> Because it's hiding under you nose - under the hood.

> It the image_map "function" that is all shoved into ... one pigment pattern.

> [snip]

> So how does a function work?

> We take <x, y, z> and plug those into the function, which computes a color

> value.

>

> But you could also pre-calculate the color values, and store them - in a

> file-- to reference when you're looping through all of the position values -

> and that's called an image file. That gets implemented via the pigment

> {image_map} syntax.

Yeah, I guess that my 'dream scheme' of...

trace --> eval_pigment --> a SINGLE function{pigment...} [somehow?]

....is kind of an attempt to mimic what goes on 'under the hood'... a sort of

'reverse engineering' scheme, devised in SDL.

The seemingly effortless way that POV-ray itself can take an image_map and turn

it into a single function{pigment...}-- with all of the original pixels in the

correct order-- is like Black Magic to me.

>

> Now, you could manually construct a function as a giant polynomial that

> intersects all the right rgb values given the right xyz values --- but that's

> just encoding the image file data into an equation - which is probably

> wasteful of effort, time, and storage.

Yeah, those little caveats did occur to me. :-[

I agree: My hoped-for scheme seems to be not-so-elegant after all. Compared to

that, my older scheme of...

trace --> eval_pigment --> 480,000 colored boxes

.... suddenly looks like an elegant and simple stroke of genius! :-P

>

> So how does one take 48,000 boxes and color them all with one function?

>

> union {

> .... all your boxes ...

> pigment {image_map}

> }

>

Yes, very straightforward-- once my single and way-too-elaborate function itself

is created! But I guess I need to discard my eval_pigment idea itself as

unworkable (i.e., initially evaluating every *individual* pixel of an input

image, to ultimately create some sort of single function to represent all the

colors and pixel positions.) net> wrote:

>

> Because it's hiding under you nose - under the hood.

> It the image_map "function" that is all shoved into ... one pigment pattern.

> [snip]

> So how does a function work?

> We take <x, y, z> and plug those into the function, which computes a color

> value.

>

> But you could also pre-calculate the color values, and store them - in a

> file-- to reference when you're looping through all of the position values -

> and that's called an image file. That gets implemented via the pigment

> {image_map} syntax.

Yeah, I guess that my 'dream scheme' of...

trace --> eval_pigment --> a SINGLE function{pigment...} [somehow?]

....is kind of an attempt to mimic what goes on 'under the hood'... a sort of

'reverse engineering' scheme, devised in SDL.

The seemingly effortless way that POV-ray itself can take an image_map and turn

it into a single function{pigment...}-- with all of the original pixels in the

correct order-- is like Black Magic to me.

>

> Now, you could manually construct a function as a giant polynomial that

> intersects all the right rgb values given the right xyz values --- but that's

> just encoding the image file data into an equation - which is probably

> wasteful of effort, time, and storage.

Yeah, those little caveats did occur to me. :-[

I agree: My hoped-for scheme seems to be not-so-elegant after all. Compared to

that, my older scheme of...

trace --> eval_pigment --> 480,000 colored boxes

.... suddenly looks like an elegant and simple stroke of genius! :-P

>

> So how does one take 48,000 boxes and color them all with one function?

>

> union {

> .... all your boxes ...

> pigment {image_map}

> }

>

Yes, very straightforward-- once my single and way-too-elaborate function itself

is created! But I guess I need to discard my eval_pigment idea itself as

unworkable (i.e., initially evaluating every *individual* pixel of an input

image, to ultimately create some sort of single function to represent all the

colors and pixel positions.)

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Kenneth" <kdw### [at] gmail com> wrote:

> "Bald Eagle" <cre### [at] netscape com> wrote:

> "Bald Eagle" <cre### [at] netscape net> wrote:

> >

> > Because it's hiding under you nose - under the hood.

> > It the image_map "function" that is all shoved into ... one pigment pattern.

> > [snip]

> > So how does a function work?

> > We take <x, y, z> and plug those into the function, which computes a color

> > value.

> >

> > But you could also pre-calculate the color values, and store them - in a

> > file-- to reference when you're looping through all of the position values -

> > and that's called an image file. That gets implemented via the pigment

> > {image_map} syntax.

>

> Yeah, I guess that my 'dream scheme' of...

> trace --> eval_pigment --> a SINGLE function{pigment...} [somehow?]

> ....is kind of an attempt to mimic what goes on 'under the hood'... a sort of

> 'reverse engineering' scheme, devised in SDL.

But what you're essentially doing is exactly that. Because there is no

"function" - it's a look-up table - reading in the color values for each x,y

pixel in the image. It's simply the fact that it's done under the hood, via

compiled source code, that makes it seem "different".

povray-3.7-stable/povray-3.7-stable/source/backend/support/imageutil.cpp

bool image_map(const VECTOR EPoint, const PIGMENT *Pigment, Colour& colour)

{

int reg_number;

DBL xcoor = 0.0, ycoor = 0.0;

// If outside map coverage area, return clear

if(map_pos(EPoint, ((const TPATTERN *) Pigment), &xcoor, &ycoor))

{

colour = Colour(1.0, 1.0, 1.0, 0.0, 1.0);

return false;

}

else

image_colour_at(Pigment->Vals.image, xcoor, ycoor, colour, ®_number,

false);

return true;

}

"If the x,y position is outside of the image area, return a clear value, else

return the color of the image at that x,y value."

No function. Just an algorithm in a loop.

> The seemingly effortless way that POV-ray itself can take an image_map and turn

> it into a single function{pigment...}-- with all of the original pixels in the

> correct order-- is like Black Magic to me.

Why? Can we even count the number of software packages that can do that?

What about a movie player that can read them in fast enough to give you greater

than 15 fps?

> Yeah, those little caveats did occur to me. :-[

> I agree: My hoped-for scheme seems to be not-so-elegant after all. Compared to

> that, my older scheme of...

> trace --> eval_pigment --> 480,000 colored boxes

> .... suddenly looks like an elegant and simple stroke of genius! :-P

I've done that kind of the opposite way - tracing objects, and converting the

height to a color value, but I realized it was just as easy to do with a

gradient pigment pattern...

Now, what I have wanted, and suggested in the past, is for there to be a

mechanism by which the compiled source code stores all the color data for an

image file in an SDL-accessible 2D array. Which would kinda give you what you

want without all of the mucking around.

> > So how does one take 48,000 boxes and color them all with one function?

> >

> > union {

> > .... all your boxes ...

> > pigment {image_map}

> > }

> >

> Yes, very straightforward-- once my single and way-too-elaborate function itself

> is created! But I guess I need to discard my eval_pigment idea itself as

> unworkable (i.e., initially evaluating every *individual* pixel of an input

> image, to ultimately create some sort of single function to represent all the

> colors and pixel positions.)

what about function {pigment {image_map}} ?

And I guess I should point out that _form_ follows _function_.

What do you want to do with the function once you've got it, that you need to

have it in function form? net> wrote:

> >

> > Because it's hiding under you nose - under the hood.

> > It the image_map "function" that is all shoved into ... one pigment pattern.

> > [snip]

> > So how does a function work?

> > We take <x, y, z> and plug those into the function, which computes a color

> > value.

> >

> > But you could also pre-calculate the color values, and store them - in a

> > file-- to reference when you're looping through all of the position values -

> > and that's called an image file. That gets implemented via the pigment

> > {image_map} syntax.

>

> Yeah, I guess that my 'dream scheme' of...

> trace --> eval_pigment --> a SINGLE function{pigment...} [somehow?]

> ....is kind of an attempt to mimic what goes on 'under the hood'... a sort of

> 'reverse engineering' scheme, devised in SDL.

But what you're essentially doing is exactly that. Because there is no

"function" - it's a look-up table - reading in the color values for each x,y

pixel in the image. It's simply the fact that it's done under the hood, via

compiled source code, that makes it seem "different".

povray-3.7-stable/povray-3.7-stable/source/backend/support/imageutil.cpp

bool image_map(const VECTOR EPoint, const PIGMENT *Pigment, Colour& colour)

{

int reg_number;

DBL xcoor = 0.0, ycoor = 0.0;

// If outside map coverage area, return clear

if(map_pos(EPoint, ((const TPATTERN *) Pigment), &xcoor, &ycoor))

{

colour = Colour(1.0, 1.0, 1.0, 0.0, 1.0);

return false;

}

else

image_colour_at(Pigment->Vals.image, xcoor, ycoor, colour, ®_number,

false);

return true;

}

"If the x,y position is outside of the image area, return a clear value, else

return the color of the image at that x,y value."

No function. Just an algorithm in a loop.

> The seemingly effortless way that POV-ray itself can take an image_map and turn

> it into a single function{pigment...}-- with all of the original pixels in the

> correct order-- is like Black Magic to me.

Why? Can we even count the number of software packages that can do that?

What about a movie player that can read them in fast enough to give you greater

than 15 fps?

> Yeah, those little caveats did occur to me. :-[

> I agree: My hoped-for scheme seems to be not-so-elegant after all. Compared to

> that, my older scheme of...

> trace --> eval_pigment --> 480,000 colored boxes

> .... suddenly looks like an elegant and simple stroke of genius! :-P

I've done that kind of the opposite way - tracing objects, and converting the

height to a color value, but I realized it was just as easy to do with a

gradient pigment pattern...

Now, what I have wanted, and suggested in the past, is for there to be a

mechanism by which the compiled source code stores all the color data for an

image file in an SDL-accessible 2D array. Which would kinda give you what you

want without all of the mucking around.

> > So how does one take 48,000 boxes and color them all with one function?

> >

> > union {

> > .... all your boxes ...

> > pigment {image_map}

> > }

> >

> Yes, very straightforward-- once my single and way-too-elaborate function itself

> is created! But I guess I need to discard my eval_pigment idea itself as

> unworkable (i.e., initially evaluating every *individual* pixel of an input

> image, to ultimately create some sort of single function to represent all the

> colors and pixel positions.)

what about function {pigment {image_map}} ?

And I guess I should point out that _form_ follows _function_.

What do you want to do with the function once you've got it, that you need to

have it in function form?

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()