|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

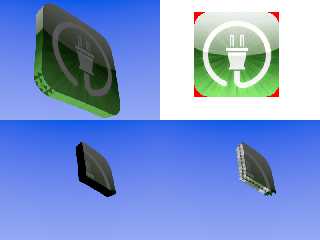

I'm having an issue which I'm hoping someone can give me some insight into.

I created a mesh (original non 'mesh2' object) macro to create a spherical

segment based on parameters specified (ie theta angle, sigma angle, radius of

inner shell, radius of outer shell).

In a nutshell its created by first cunstruction of a tiny closed mesh object

(thereby enabling use of the 'inside_vector' modifier), comprised of 6 sides and

12 'triangle' objects, and with UV parameters supplied by the calling macro.

The calling macro creates a union of the smaller mesh objects based on a set

number of divisions in the theta and sigma (angles), and radius directions.

My problem is that no matter how I apply the use of the 'uv_mapping' and the

'texture' statements, POV always applies the 'texture' first then applies the

'inside_vector'. The problem with this is when working with transparent

'image_map' objects (such as the icon image in the upper right quadrant of the

attached image) yields unintended results such as those in the corners of the

mapped image (upper left quadrant).

I've tried changing the order such that the texture is applied both to the

'mesh' objects individually, and to the 'union' of the individual meshes, but

with the same result. I would think that since the 'inside_vector' is applied to

the individual mesh objects, if the texture is applied to the 'union' object

this would do the trick, but this does not appear to be the case

Since the documentation says it was designed for CSG, I've tried encasing the

mesh in other "normal" objects (such as a 'sphere' or 'box') hoping this would

resolve the issue. The 'inside_vector' does appear to be working correctly as

indicated by the two bottom pictures (left with the 'inside_vector' parameter

added to an intersected object, right without the 'inside_vector' parameter

added).

It seems to be an issue in determining what is the surface that needs to have

the 'inside_vector'. Ideally, POV would calculate the surface first (thereby

making it solid), then apply the texture with the transparency. But as

indicated, it seems to be applying the texture incororating the transparency

first, thereby making parts of the mesh faces transparent, and then applying the

'inside_vector' (and making the result solid) secondly.

Anybody have any recommendations for a fix?

I know this is in unitended consequence (at least for me), but is this a bug in

general? Or just some limitation?

Is it something that can be corrected in future versions of POVray so as not to

require a fix? That is can POV be programmed so the user can change the order in

which the 'inside_vector' and transparent 'image_map' are applied?

-Jeff

Post a reply to this message

Attachments:

Download 'inside_vector.png' (135 KB)

Preview of image 'inside_vector.png'

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Image_map, transparent, mesh, and inside_vector

Date: 14 Jan 2013 11:54:45

Message: <50f43855@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 14.01.2013 01:55, schrieb Woody:

> I'm having an issue which I'm hoping someone can give me some insight into.

You seem to have some misconceptions about how POV-Ray's CSG and

handling of transparent textures cooperate. Because as a matter of fact

they don't.

First it is crucial to understand that POV-Ray renders /surfaces/. This

is true even for "solid" objects: The concept of being "solid" only has

a meaning in the context of "Constructive Solid Geometry" (CSG)

intersections and differences, where the volumetric definitions of

objects are used to mutually determine which sections of the other

objects' surfaces are to be rendered; the only reason why this doesn't

lead to visible holes in the geometry is because the holes that are

ripped open in the surface of one object are precisely filled with the

remaining surfaces of the other objects.

When applying partially transparent textures to an object, other rules

apply: Textures define the colors (and transparency values) to use when

an object's surface is rendered, but they don't define any surfaces

themselves. Textures can be used to suppress all or part of an object's

surface (by using a transparent pigment), but they can't define new

surfaces: They are unable to fill the holes they rip open.

You /can/ define a shape based on a transparent texture, but this

requires the use of the slow isosurface object.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

clipka <ano### [at] anonymous org> wrote:

> Am 14.01.2013 01:55, schrieb Woody:

> > I'm having an issue which I'm hoping someone can give me some insight into.

>

> You seem to have some misconceptions about how POV-Ray's CSG and

> handling of transparent textures cooperate. Because as a matter of fact

> they don't.

>

> First it is crucial to understand that POV-Ray renders /surfaces/. This

> is true even for "solid" objects: The concept of being "solid" only has

> a meaning in the context of "Constructive Solid Geometry" (CSG)

> intersections and differences, where the volumetric definitions of

> objects are used to mutually determine which sections of the other

> objects' surfaces are to be rendered; the only reason why this doesn't

> lead to visible holes in the geometry is because the holes that are

> ripped open in the surface of one object are precisely filled with the

> remaining surfaces of the other objects.

>

> When applying partially transparent textures to an object, other rules

> apply: Textures define the colors (and transparency values) to use when

> an object's surface is rendered, but they don't define any surfaces

> themselves. Textures can be used to suppress all or part of an object's

> surface (by using a transparent pigment), but they can't define new

> surfaces: They are unable to fill the holes they rip open.

>

> You /can/ define a shape based on a transparent texture, but this

> requires the use of the slow isosurface object.

I stand corrected. Thanks for clarifying.

Wouldn't there be a way though to program the ray so that, given that the ray

has intersected an surface with transparency turned on, but not yet hit a

surface transparency turned off, it would be able calculate which pigment would

occurr at each point of calucation in between based on the supplied UV values?

-Jeff org> wrote:

> Am 14.01.2013 01:55, schrieb Woody:

> > I'm having an issue which I'm hoping someone can give me some insight into.

>

> You seem to have some misconceptions about how POV-Ray's CSG and

> handling of transparent textures cooperate. Because as a matter of fact

> they don't.

>

> First it is crucial to understand that POV-Ray renders /surfaces/. This

> is true even for "solid" objects: The concept of being "solid" only has

> a meaning in the context of "Constructive Solid Geometry" (CSG)

> intersections and differences, where the volumetric definitions of

> objects are used to mutually determine which sections of the other

> objects' surfaces are to be rendered; the only reason why this doesn't

> lead to visible holes in the geometry is because the holes that are

> ripped open in the surface of one object are precisely filled with the

> remaining surfaces of the other objects.

>

> When applying partially transparent textures to an object, other rules

> apply: Textures define the colors (and transparency values) to use when

> an object's surface is rendered, but they don't define any surfaces

> themselves. Textures can be used to suppress all or part of an object's

> surface (by using a transparent pigment), but they can't define new

> surfaces: They are unable to fill the holes they rip open.

>

> You /can/ define a shape based on a transparent texture, but this

> requires the use of the slow isosurface object.

I stand corrected. Thanks for clarifying.

Wouldn't there be a way though to program the ray so that, given that the ray

has intersected an surface with transparency turned on, but not yet hit a

surface transparency turned off, it would be able calculate which pigment would

occurr at each point of calucation in between based on the supplied UV values?

-Jeff

Post a reply to this message

|

|

|  |

|  |

|

|

From: Alain

Subject: Re: Image_map, transparent, mesh, and inside_vector

Date: 14 Jan 2013 17:51:46

Message: <50f48c02@news.povray.org>

|

|

|

|  |

|  |

|

|

> clipka <ano### [at] anonymous org> wrote:

>> Am 14.01.2013 01:55, schrieb Woody:

>>> I'm having an issue which I'm hoping someone can give me some insight into.

>>

>> You seem to have some misconceptions about how POV-Ray's CSG and

>> handling of transparent textures cooperate. Because as a matter of fact

>> they don't.

>>

>> First it is crucial to understand that POV-Ray renders /surfaces/. This

>> is true even for "solid" objects: The concept of being "solid" only has

>> a meaning in the context of "Constructive Solid Geometry" (CSG)

>> intersections and differences, where the volumetric definitions of

>> objects are used to mutually determine which sections of the other

>> objects' surfaces are to be rendered; the only reason why this doesn't

>> lead to visible holes in the geometry is because the holes that are

>> ripped open in the surface of one object are precisely filled with the

>> remaining surfaces of the other objects.

>>

>> When applying partially transparent textures to an object, other rules

>> apply: Textures define the colors (and transparency values) to use when

>> an object's surface is rendered, but they don't define any surfaces

>> themselves. Textures can be used to suppress all or part of an object's

>> surface (by using a transparent pigment), but they can't define new

>> surfaces: They are unable to fill the holes they rip open.

>>

>> You /can/ define a shape based on a transparent texture, but this

>> requires the use of the slow isosurface object.

>

> I stand corrected. Thanks for clarifying.

>

> Wouldn't there be a way though to program the ray so that, given that the ray

> has intersected an surface with transparency turned on, but not yet hit a

> surface transparency turned off, it would be able calculate which pigment would

> occurr at each point of calucation in between based on the supplied UV values?

>

> -Jeff

>

Unless your object is filled by some media, or use colour fading, there

is no colour evaluation done between the surfaces.

Colour fading can only deal with uniform tints, making it unsuitable for

your intended use.

You can use media. The media can't exist inside your object unless you

add the "hollow" attribute to the object.

You can use your image to modulate the media density, but can't UV map

it for that purpose. The media will need to have a high density and will

make the object slower to render, but only in areas where you can see

inside due to the transparency. You need to use scattering media.

Please read the section about media in the documentations.

Alain org> wrote:

>> Am 14.01.2013 01:55, schrieb Woody:

>>> I'm having an issue which I'm hoping someone can give me some insight into.

>>

>> You seem to have some misconceptions about how POV-Ray's CSG and

>> handling of transparent textures cooperate. Because as a matter of fact

>> they don't.

>>

>> First it is crucial to understand that POV-Ray renders /surfaces/. This

>> is true even for "solid" objects: The concept of being "solid" only has

>> a meaning in the context of "Constructive Solid Geometry" (CSG)

>> intersections and differences, where the volumetric definitions of

>> objects are used to mutually determine which sections of the other

>> objects' surfaces are to be rendered; the only reason why this doesn't

>> lead to visible holes in the geometry is because the holes that are

>> ripped open in the surface of one object are precisely filled with the

>> remaining surfaces of the other objects.

>>

>> When applying partially transparent textures to an object, other rules

>> apply: Textures define the colors (and transparency values) to use when

>> an object's surface is rendered, but they don't define any surfaces

>> themselves. Textures can be used to suppress all or part of an object's

>> surface (by using a transparent pigment), but they can't define new

>> surfaces: They are unable to fill the holes they rip open.

>>

>> You /can/ define a shape based on a transparent texture, but this

>> requires the use of the slow isosurface object.

>

> I stand corrected. Thanks for clarifying.

>

> Wouldn't there be a way though to program the ray so that, given that the ray

> has intersected an surface with transparency turned on, but not yet hit a

> surface transparency turned off, it would be able calculate which pigment would

> occurr at each point of calucation in between based on the supplied UV values?

>

> -Jeff

>

Unless your object is filled by some media, or use colour fading, there

is no colour evaluation done between the surfaces.

Colour fading can only deal with uniform tints, making it unsuitable for

your intended use.

You can use media. The media can't exist inside your object unless you

add the "hollow" attribute to the object.

You can use your image to modulate the media density, but can't UV map

it for that purpose. The media will need to have a high density and will

make the object slower to render, but only in areas where you can see

inside due to the transparency. You need to use scattering media.

Please read the section about media in the documentations.

Alain

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Am 14.01.2013 19:28, schrieb Woody:

> Wouldn't there be a way though to program the ray so that, given that the ray

> has intersected an surface with transparency turned on, but not yet hit a

> surface transparency turned off, it would be able calculate which pigment would

> occurr at each point of calucation in between based on the supplied UV values?

While that /is/ indeed possible with scattering media (by using extreme

density values), there is one big problem: You can only simulate diffuse

surfaces with this. No specular or phong highlights.

My recommendation to you would be to use an isosurface, based on a

pigment function, which in turn is based on an image pattern with

use_alpha and bicubic interpolation.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

clipka <ano### [at] anonymous org> wrote:

> Am 14.01.2013 19:28, schrieb Woody:

>

> > Wouldn't there be a way though to program the ray so that, given that the ray

> > has intersected an surface with transparency turned on, but not yet hit a

> > surface transparency turned off, it would be able calculate which pigment would

> > occurr at each point of calucation in between based on the supplied UV values?

>

> While that /is/ indeed possible with scattering media (by using extreme

> density values), there is one big problem: You can only simulate diffuse

> surfaces with this. No specular or phong highlights.

>

> My recommendation to you would be to use an isosurface, based on a

> pigment function, which in turn is based on an image pattern with

> use_alpha and bicubic interpolation.

I'm having a hard time creating the code. Could possibly provide a starting

point? org> wrote:

> Am 14.01.2013 19:28, schrieb Woody:

>

> > Wouldn't there be a way though to program the ray so that, given that the ray

> > has intersected an surface with transparency turned on, but not yet hit a

> > surface transparency turned off, it would be able calculate which pigment would

> > occurr at each point of calucation in between based on the supplied UV values?

>

> While that /is/ indeed possible with scattering media (by using extreme

> density values), there is one big problem: You can only simulate diffuse

> surfaces with this. No specular or phong highlights.

>

> My recommendation to you would be to use an isosurface, based on a

> pigment function, which in turn is based on an image pattern with

> use_alpha and bicubic interpolation.

I'm having a hard time creating the code. Could possibly provide a starting

point?

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()