|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

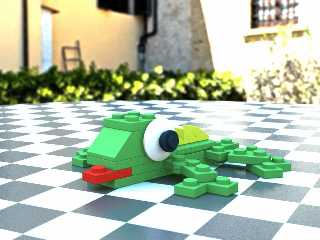

I've been experimenting with POV-Ray's Radiosity and my LEGO bricks. I'm using

Jaime Vives Piquere's macro to do Pseudo-HDRI lighting, mainly because it was

easy and I wanted to use 3.6 or 3.7 to render the scene. Interestingly, I found

3.7 beta's radiosity looked better then 3.6 with my LEGO's. I ran across this

model yesterday and I just "had" to model it. I needed to model a few parts not

in my library which is always fun. About 12 hours to render using a Dual core

AMD Turion 2.0GHz -- blame the focal blur.

Post a reply to this message

Attachments:

Download '7804 lizard.jpg' (61 KB)

Preview of image '7804 lizard.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"SafePit" <ste### [at] reids4fun com> wrote:

> 3.7 beta's radiosity looked better then 3.6 with my LEGO's.

Why, yes - of course it does! :P com> wrote:

> 3.7 beta's radiosity looked better then 3.6 with my LEGO's.

Why, yes - of course it does! :P

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"clipka" <nomail@nomail> wrote:

> "SafePit" <ste### [at] reids4fun com> wrote:

> > 3.7 beta's radiosity looked better then 3.6 with my LEGO's.

>

> Why, yes - of course it does! :P

LOL -- aren't you a "little" biased? :) com> wrote:

> > 3.7 beta's radiosity looked better then 3.6 with my LEGO's.

>

> Why, yes - of course it does! :P

LOL -- aren't you a "little" biased? :)

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"SafePit" <ste### [at] reids4fun com> wrote:

> "clipka" <nomail@nomail> wrote:

> > "SafePit" <ste### [at] reids4fun com> wrote:

> "clipka" <nomail@nomail> wrote:

> > "SafePit" <ste### [at] reids4fun com> wrote:

> > > 3.7 beta's radiosity looked better then 3.6 with my LEGO's.

> >

> > Why, yes - of course it does! :P

>

> LOL -- aren't you a "little" biased? :)

Speaking of "biased"... ;) how hard would it be to get path tracing going with

the 3.7 codebase? (think mc-pov 3.7)

My sincere apologies if this has been discussed elsewhere - but if so, could

someone please point me to it? com> wrote:

> > > 3.7 beta's radiosity looked better then 3.6 with my LEGO's.

> >

> > Why, yes - of course it does! :P

>

> LOL -- aren't you a "little" biased? :)

Speaking of "biased"... ;) how hard would it be to get path tracing going with

the 3.7 codebase? (think mc-pov 3.7)

My sincere apologies if this has been discussed elsewhere - but if so, could

someone please point me to it?

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"sooperFoX" <bon### [at] gmail com> wrote:

>

> Speaking of "biased"... ;) how hard would it be to get path tracing going with

> the 3.7 codebase? (think mc-pov 3.7)

I've already pondered the idea. And found no reason why it shouldn't work.

Well, except for one thing: That "render until I like it" feature with interim

result output would currently be an issue. Nothing that couldn't be solved

though. For starters, high-quality anti-aliasing settings might do instead of

rendering the shot over and over again.

Eliminating classic lighting would be easy: Just don't use it.

Reflection and transmission could be handled by existing code without any change

at all. Adding blur, like in MCPov, would be an extra gimmick that in my opinion

might be of interest for standard POV-Ray scenes anyway.

The "diffuse job" could be fully covered by radiosity code, by just bypassing

the sample cache, using a low sample ray count and high recursion depth, and

some fairly small changes to a few internal parameters. That, plus another

thing that has already made its way onto the radiosity agenda: The radiosity

sample ray pattern would have to be able to use certain hints in the scene

about bright areas (MCPov's "portals"); and for monte-carlo tracing it would

have to be truly random.

So there would be some work to do, but basically nothing that wouldn't fit into

the 3.7 framework.

Ah, and SSLT should nicely fit into the thing as well, as soon as it properly

works with radiosity.

Scattering media would remain a problem, unless one would go for full volumetric

monte-carlo scattering simulation. Which, for highly scattering media, might be

veeeeeery slow. com> wrote:

>

> Speaking of "biased"... ;) how hard would it be to get path tracing going with

> the 3.7 codebase? (think mc-pov 3.7)

I've already pondered the idea. And found no reason why it shouldn't work.

Well, except for one thing: That "render until I like it" feature with interim

result output would currently be an issue. Nothing that couldn't be solved

though. For starters, high-quality anti-aliasing settings might do instead of

rendering the shot over and over again.

Eliminating classic lighting would be easy: Just don't use it.

Reflection and transmission could be handled by existing code without any change

at all. Adding blur, like in MCPov, would be an extra gimmick that in my opinion

might be of interest for standard POV-Ray scenes anyway.

The "diffuse job" could be fully covered by radiosity code, by just bypassing

the sample cache, using a low sample ray count and high recursion depth, and

some fairly small changes to a few internal parameters. That, plus another

thing that has already made its way onto the radiosity agenda: The radiosity

sample ray pattern would have to be able to use certain hints in the scene

about bright areas (MCPov's "portals"); and for monte-carlo tracing it would

have to be truly random.

So there would be some work to do, but basically nothing that wouldn't fit into

the 3.7 framework.

Ah, and SSLT should nicely fit into the thing as well, as soon as it properly

works with radiosity.

Scattering media would remain a problem, unless one would go for full volumetric

monte-carlo scattering simulation. Which, for highly scattering media, might be

veeeeeery slow.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"clipka" <nomail@nomail> wrote:

> I've already pondered the idea. And found no reason why it shouldn't work.

>

> Well, except for one thing: That "render until I like it" feature with interim

> result output would currently be an issue. Nothing that couldn't be solved

> though. For starters, high-quality anti-aliasing settings might do instead of

> rendering the shot over and over again.

As I understand it, the idea of rendering the shot over and over again is the

only way that it *could* work. Each pixel of a 'pass' follows a diffuse bounce

in a 'random' direction (which could have a small bias, eg portals) and the

more times you repeat those random passes the more 'coverage' of the total

solution you get. Kind of like having an infinite radiosity count, spread out

over time. That's why it starts out so noisy...

> Adding blur, like in MCPov, would be an extra gimmick that in my opinion

> might be of interest for standard POV-Ray scenes anyway.

That is just a natural side effect of the multiple passes - each pass takes a

randomly different reflection or refraction direction, and when you average

them all together you get a blurred result. This is also why you get perfect

anti-aliasing.

> The "diffuse job" could be fully covered by radiosity code, by just bypassing

> the sample cache, using a low sample ray count and high recursion depth, and

> some fairly small changes to a few internal parameters. That, plus another

Yes, I think so. You probably don't even need that high a recursion depth, maybe

5 or so. And it's not so much recursion as it is iteration, right? You don't

shoot [count] rays again for each bounce of a single path?

> thing that has already made its way onto the radiosity agenda: The radiosity

> sample ray pattern would have to be able to use certain hints in the scene

> about bright areas (MCPov's "portals"); and for monte-carlo tracing it would

> have to be truly random.

Could it just be any object with finish ambient > 0? There is also

bi-directional path tracing and Metropolis Light Transport systems, but I think

they are significantly more complicated to implement..

> So there would be some work to do, but basically nothing that wouldn't fit into

> the 3.7 framework.

I wish I understood enough of it to be able to help in implementing! Perhaps one

day I will find the time to look at the POV source. Or maybe fidos can help? :)

> Scattering media would remain a problem, unless one would go for full volumetric

> monte-carlo scattering simulation. Which, for highly scattering media, might be

> veeeeeery slow.

Yes, but it would be *correct*, and the results would be unbeatable. It could be

turned on/off though for people who don't want to wait weeks for a decent result

;)

Well, just a pipe dream. For now, there's Indigo Renderer and Blender...

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"sooperFoX" <bon### [at] gmail com> wrote:

> > Well, except for one thing: That "render until I like it" feature with interim

> > result output would currently be an issue. Nothing that couldn't be solved

> > though. For starters, high-quality anti-aliasing settings might do instead of

> > rendering the shot over and over again.

>

> As I understand it, the idea of rendering the shot over and over again is the

> only way that it *could* work. Each pixel of a 'pass' follows a diffuse bounce

> in a 'random' direction (which could have a small bias, eg portals) and the

> more times you repeat those random passes the more 'coverage' of the total

> solution you get. Kind of like having an infinite radiosity count, spread out

> over time. That's why it starts out so noisy...

No, *basically* the only important ingredient for montecarlo raytracing to work

is that each *pixel* is sampled over and over again. That this is done in

multiple passes is "only" a matter of convenience. A single-pass "render each

pixel 1000 times" rule, which madly high non-adaptive anti-aliasing settings

would roughly be equivalent to, would qualify just as well.

I do agree of course that doing multiple passes, giving each pixel just one shot

per pass, and saving an interim result image after each pass, is much more

convenient than doing it all in one pass, giving each pixel 1000 shots before

proceeding to the next. No argument here.

> > Adding blur, like in MCPov, would be an extra gimmick that in my opinion

> > might be of interest for standard POV-Ray scenes anyway.

>

> That is just a natural side effect of the multiple passes - each pass takes a

> randomly different reflection or refraction direction, and when you average

> them all together you get a blurred result. This is also why you get perfect

> anti-aliasing.

Well, it is not a natural side effect of the multiple passes - it is a natural

side effect of multiple rays being shot per pixel *and* some jittering applied

to the reflection.

You can achieve the same effect with standard POV by using anti-aliasing (the

multiple-ray-shooting component) with micronormals (exploiting the "jitter" in

the initial rays to pick a more or less random normal from a bump map).

That's why I don't consider this an integral trait of a montecarlo extension to

POV-Ray.

> > The "diffuse job" could be fully covered by radiosity code, by just bypassing

> > the sample cache, using a low sample ray count and high recursion depth, and

> > some fairly small changes to a few internal parameters. That, plus another

>

> Yes, I think so. You probably don't even need that high a recursion depth, maybe

> 5 or so. And it's not so much recursion as it is iteration, right? You don't

> shoot [count] rays again for each bounce of a single path?

Well, if using the existing radiosity mechanism (just bypassing the sample

cache), it would actually be recursion indeed: The radiosity algorithm is

*designed* to shoot N rays per location.

(If I'm not mistaken, it is recursion anyway all throughout POV-Ray, whether it

is reflection or refraction (or diffusion, in which case the radiosity code may

add some recursive element); the only exception are straight rays through

(semi-) transparent objects. Maybe not a wise thing to do, but what the

heck...)

I guess it is the same with MCPov, too - after all, it allows you to specify the

number of rays to shoot per diffuse bounce as well. Which is not bad because it

reduces the need to trace the ray from the eye to (almost) here as often.

>

>

> > thing that has already made its way onto the radiosity agenda: The radiosity

> > sample ray pattern would have to be able to use certain hints in the scene

> > about bright areas (MCPov's "portals"); and for monte-carlo tracing it would

> > have to be truly random.

>

> Could it just be any object with finish ambient > 0? There is also

> bi-directional path tracing and Metropolis Light Transport systems, but I think

> they are significantly more complicated to implement..

Bi-directional path tracing only works if you have point lights, or can

approximate the light sources with point lights - maybe by picking random

points on glowing objects' surfaces for each path. This can be done with

meshes, and some other primitives as well, because it is easy to pick a random

point on their surface with a uniform random distribution; but when it comes to

isosurfaces or some such, getting the random distribution right or modulating it

with the proper factor to compensate for non-uniform distribution will be

difficult at best.

What *can* be done is bi-directional path tracing using classic light sources.

This would in fact be the result if the classic diffuse lighting term would be

left activated, and the specular highlighting "synchronized" with (diffuse)

reflection - or if photon mapping would be used for the whole scene.

Of Metropolis Light Transport I know nothing.

> > Scattering media would remain a problem, unless one would go for full volumetric

> > monte-carlo scattering simulation. Which, for highly scattering media, might be

> > veeeeeery slow.

>

> Yes, but it would be *correct*, and the results would be unbeatable. It could be

> turned on/off though for people who don't want to wait weeks for a decent result

> ;)

Yep. I already mused that such a thing - integrated into POV-Ray - could provide

me with reference shots for the SSLT code, to verify that it's doing just as

good in faster time ;)

Same goes for monte-carlo tracing in general, which would provide me with

reference shots for radiosity. MCPov is unsuited in this respect because it

cannot co-operate with conventional lighting. com> wrote:

> > Well, except for one thing: That "render until I like it" feature with interim

> > result output would currently be an issue. Nothing that couldn't be solved

> > though. For starters, high-quality anti-aliasing settings might do instead of

> > rendering the shot over and over again.

>

> As I understand it, the idea of rendering the shot over and over again is the

> only way that it *could* work. Each pixel of a 'pass' follows a diffuse bounce

> in a 'random' direction (which could have a small bias, eg portals) and the

> more times you repeat those random passes the more 'coverage' of the total

> solution you get. Kind of like having an infinite radiosity count, spread out

> over time. That's why it starts out so noisy...

No, *basically* the only important ingredient for montecarlo raytracing to work

is that each *pixel* is sampled over and over again. That this is done in

multiple passes is "only" a matter of convenience. A single-pass "render each

pixel 1000 times" rule, which madly high non-adaptive anti-aliasing settings

would roughly be equivalent to, would qualify just as well.

I do agree of course that doing multiple passes, giving each pixel just one shot

per pass, and saving an interim result image after each pass, is much more

convenient than doing it all in one pass, giving each pixel 1000 shots before

proceeding to the next. No argument here.

> > Adding blur, like in MCPov, would be an extra gimmick that in my opinion

> > might be of interest for standard POV-Ray scenes anyway.

>

> That is just a natural side effect of the multiple passes - each pass takes a

> randomly different reflection or refraction direction, and when you average

> them all together you get a blurred result. This is also why you get perfect

> anti-aliasing.

Well, it is not a natural side effect of the multiple passes - it is a natural

side effect of multiple rays being shot per pixel *and* some jittering applied

to the reflection.

You can achieve the same effect with standard POV by using anti-aliasing (the

multiple-ray-shooting component) with micronormals (exploiting the "jitter" in

the initial rays to pick a more or less random normal from a bump map).

That's why I don't consider this an integral trait of a montecarlo extension to

POV-Ray.

> > The "diffuse job" could be fully covered by radiosity code, by just bypassing

> > the sample cache, using a low sample ray count and high recursion depth, and

> > some fairly small changes to a few internal parameters. That, plus another

>

> Yes, I think so. You probably don't even need that high a recursion depth, maybe

> 5 or so. And it's not so much recursion as it is iteration, right? You don't

> shoot [count] rays again for each bounce of a single path?

Well, if using the existing radiosity mechanism (just bypassing the sample

cache), it would actually be recursion indeed: The radiosity algorithm is

*designed* to shoot N rays per location.

(If I'm not mistaken, it is recursion anyway all throughout POV-Ray, whether it

is reflection or refraction (or diffusion, in which case the radiosity code may

add some recursive element); the only exception are straight rays through

(semi-) transparent objects. Maybe not a wise thing to do, but what the

heck...)

I guess it is the same with MCPov, too - after all, it allows you to specify the

number of rays to shoot per diffuse bounce as well. Which is not bad because it

reduces the need to trace the ray from the eye to (almost) here as often.

>

>

> > thing that has already made its way onto the radiosity agenda: The radiosity

> > sample ray pattern would have to be able to use certain hints in the scene

> > about bright areas (MCPov's "portals"); and for monte-carlo tracing it would

> > have to be truly random.

>

> Could it just be any object with finish ambient > 0? There is also

> bi-directional path tracing and Metropolis Light Transport systems, but I think

> they are significantly more complicated to implement..

Bi-directional path tracing only works if you have point lights, or can

approximate the light sources with point lights - maybe by picking random

points on glowing objects' surfaces for each path. This can be done with

meshes, and some other primitives as well, because it is easy to pick a random

point on their surface with a uniform random distribution; but when it comes to

isosurfaces or some such, getting the random distribution right or modulating it

with the proper factor to compensate for non-uniform distribution will be

difficult at best.

What *can* be done is bi-directional path tracing using classic light sources.

This would in fact be the result if the classic diffuse lighting term would be

left activated, and the specular highlighting "synchronized" with (diffuse)

reflection - or if photon mapping would be used for the whole scene.

Of Metropolis Light Transport I know nothing.

> > Scattering media would remain a problem, unless one would go for full volumetric

> > monte-carlo scattering simulation. Which, for highly scattering media, might be

> > veeeeeery slow.

>

> Yes, but it would be *correct*, and the results would be unbeatable. It could be

> turned on/off though for people who don't want to wait weeks for a decent result

> ;)

Yep. I already mused that such a thing - integrated into POV-Ray - could provide

me with reference shots for the SSLT code, to verify that it's doing just as

good in faster time ;)

Same goes for monte-carlo tracing in general, which would provide me with

reference shots for radiosity. MCPov is unsuited in this respect because it

cannot co-operate with conventional lighting.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"clipka" <nomail@nomail> wrote:

Right on all counts. Thanks for the clarifications; I think I had

over-simplified it in my head and lost some in the translation but I think we

agree.

> Of Metropolis Light Transport I know nothing.

Basically it is an algorithm which uses path tracing (or bi-di path tracing) as

a basis, and once it has found a path it mutates or tweaks it slightly looking

for other, similar paths. In this way it can improve indirect light such as in

that scene with the door almost fully closed. I think it's meant to be good for

indoor scenes which are mostly lit indirectly, or to improve caustics.

There are better explanations around, which I suggest you read if you can,

'cause I haven't done it justice :)

> Same goes for monte-carlo tracing in general, which would provide me with

> reference shots for radiosity. MCPov is unsuited in this respect because it

> cannot co-operate with conventional lighting.

It could be used for one half of a bi-directional path tracer, with the

conventional lights being used for the forward-paths like you mentioned before?

Or perhaps it could be modified to use a point light source as a portal or some

such. In all likelihood if POV were serious about becoming a path-tracer it

might have to be re-imagined but I think it's at least a good beginning.

Post a reply to this message

|

|

|  |

|  |

|

|

From: Kyle

Subject: Re: LEGO 7804 Lizard - 3.7 beta 32 with Radiosity

Date: 14 Apr 2009 15:33:18

Message: <49e4e4fe@news.povray.org>

|

|

|

|  |

|  |

|

|

That lizard cracks me up! I like it.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"clipka" <nomail@nomail> wrote:

> "sooperFoX" <bon### [at] gmail com> wrote:

> > > Well, except for one thing: That "render until I like it" feature with interim

> > > result output would currently be an issue. Nothing that couldn't be solved

> > > though. For starters, high-quality anti-aliasing settings might do instead of

> > > rendering the shot over and over again.

> >

> > As I understand it, the idea of rendering the shot over and over again is the

> > only way that it *could* work. Each pixel of a 'pass' follows a diffuse bounce

> > in a 'random' direction (which could have a small bias, eg portals) and the

> > more times you repeat those random passes the more 'coverage' of the total

> > solution you get. Kind of like having an infinite radiosity count, spread out

> > over time. That's why it starts out so noisy...

>

> No, *basically* the only important ingredient for montecarlo raytracing to work

> is that each *pixel* is sampled over and over again. That this is done in

> multiple passes is "only" a matter of convenience. A single-pass "render each

> pixel 1000 times" rule, which madly high non-adaptive anti-aliasing settings

> would roughly be equivalent to, would qualify just as well.

>

> I do agree of course that doing multiple passes, giving each pixel just one shot

> per pass, and saving an interim result image after each pass, is much more

> convenient than doing it all in one pass, giving each pixel 1000 shots before

> proceeding to the next. No argument here.

This is more or less what my stochastic render rig does currently in POV. If I

render 400 passes (which means full frames via animation), then I'm sampling

each pixel 400 times. I combine the 400 HDR images afterwards. Or after 40

passes to get a feel for how it's going. And if 400 passes still looks a little

noisy, then I fire up another render to get some more frames.

Stuff I currently can do this way:

- Blurred reflection, blurred refraction (a tiny bit of blurring on everything

adds a lot to realism)

- Anti-aliasing

- Depth of Field (with custom bokeh maps)

- High quality Media effects (by doing randomised low quality media each pass)

- Distributed light sources (i.e. studio bank lighting)

- High density lightdomes (up to 1000 lights)

- Soft shadowing (by using a different jitter 2x2 area light each pass)

- Fake SSS (via a low quality jittered FastSS() on each pass)

Things I haven't done (or can't do) yet

- Radiosity - haven't tried it yet (but I could do a pre-pass with and use

save/load)

- Photons (same as radiosity above)

- Dispersion (filter greater than 1 plain doesn't work in POV, even when I

patch the obvious underflow condition in the code)

- SSLT! (There's not ability to jitter for each pass)

Most of the randomisation is driven by a single

#declare stochastic_seed = seed( frame_number)

at the start of the code.

e.g. Blurry reflections are simply micronormals randomized on the

stochastic_seed

normal {

bumps

scale 0.0001

translate <rand(stochastic_seed), rand(stochastic_seed),

rand(stochastic_seed)>

}

or averaging in the "real" normal

normal {

average

normal_map {

[ 1 crackle ]

[ 1

bumps

scale 0.0001

translate <rand(stochastic_seed), rand(stochastic_seed),

rand(stochastic_seed)>

]

}

}

I've also started using a lot of halton sequences based on the frame_number, as

this seem even better than pure randomisation for a evenly distributed series

of samples.

My 35mm camera macros, that got posted here a month or so ago, have the

Anti-aliasing and DoF implementation of this in them.

The thing I'd really like to do is to identify pixels that haven't stabilized

after N passes, and only render those some more. It's a waste to render all the

pixels in a frame when most of them have arrived at their final value +/- some

minute percentage. It's the problem pixels that need more work.

> > > The "diffuse job" could be fully covered by radiosity code, by just bypassing

> > > the sample cache, using a low sample ray count and high recursion depth, and

> > > some fairly small changes to a few internal parameters. That, plus another

> >

> > Yes, I think so. You probably don't even need that high a recursion depth, maybe

> > 5 or so. And it's not so much recursion as it is iteration, right? You don't

> > shoot [count] rays again for each bounce of a single path?

>

> Well, if using the existing radiosity mechanism (just bypassing the sample

> cache), it would actually be recursion indeed: The radiosity algorithm is

> *designed* to shoot N rays per location.

Are they randomised, or would they be the same each pass?

> Of Metropolis Light Transport I know nothing.

It's just a statistical method applied to bi-direction path tracing to allow

speed-ups while preserving the unbiased results.

Cheers,

Edouard. com> wrote:

> > > Well, except for one thing: That "render until I like it" feature with interim

> > > result output would currently be an issue. Nothing that couldn't be solved

> > > though. For starters, high-quality anti-aliasing settings might do instead of

> > > rendering the shot over and over again.

> >

> > As I understand it, the idea of rendering the shot over and over again is the

> > only way that it *could* work. Each pixel of a 'pass' follows a diffuse bounce

> > in a 'random' direction (which could have a small bias, eg portals) and the

> > more times you repeat those random passes the more 'coverage' of the total

> > solution you get. Kind of like having an infinite radiosity count, spread out

> > over time. That's why it starts out so noisy...

>

> No, *basically* the only important ingredient for montecarlo raytracing to work

> is that each *pixel* is sampled over and over again. That this is done in

> multiple passes is "only" a matter of convenience. A single-pass "render each

> pixel 1000 times" rule, which madly high non-adaptive anti-aliasing settings

> would roughly be equivalent to, would qualify just as well.

>

> I do agree of course that doing multiple passes, giving each pixel just one shot

> per pass, and saving an interim result image after each pass, is much more

> convenient than doing it all in one pass, giving each pixel 1000 shots before

> proceeding to the next. No argument here.

This is more or less what my stochastic render rig does currently in POV. If I

render 400 passes (which means full frames via animation), then I'm sampling

each pixel 400 times. I combine the 400 HDR images afterwards. Or after 40

passes to get a feel for how it's going. And if 400 passes still looks a little

noisy, then I fire up another render to get some more frames.

Stuff I currently can do this way:

- Blurred reflection, blurred refraction (a tiny bit of blurring on everything

adds a lot to realism)

- Anti-aliasing

- Depth of Field (with custom bokeh maps)

- High quality Media effects (by doing randomised low quality media each pass)

- Distributed light sources (i.e. studio bank lighting)

- High density lightdomes (up to 1000 lights)

- Soft shadowing (by using a different jitter 2x2 area light each pass)

- Fake SSS (via a low quality jittered FastSS() on each pass)

Things I haven't done (or can't do) yet

- Radiosity - haven't tried it yet (but I could do a pre-pass with and use

save/load)

- Photons (same as radiosity above)

- Dispersion (filter greater than 1 plain doesn't work in POV, even when I

patch the obvious underflow condition in the code)

- SSLT! (There's not ability to jitter for each pass)

Most of the randomisation is driven by a single

#declare stochastic_seed = seed( frame_number)

at the start of the code.

e.g. Blurry reflections are simply micronormals randomized on the

stochastic_seed

normal {

bumps

scale 0.0001

translate <rand(stochastic_seed), rand(stochastic_seed),

rand(stochastic_seed)>

}

or averaging in the "real" normal

normal {

average

normal_map {

[ 1 crackle ]

[ 1

bumps

scale 0.0001

translate <rand(stochastic_seed), rand(stochastic_seed),

rand(stochastic_seed)>

]

}

}

I've also started using a lot of halton sequences based on the frame_number, as

this seem even better than pure randomisation for a evenly distributed series

of samples.

My 35mm camera macros, that got posted here a month or so ago, have the

Anti-aliasing and DoF implementation of this in them.

The thing I'd really like to do is to identify pixels that haven't stabilized

after N passes, and only render those some more. It's a waste to render all the

pixels in a frame when most of them have arrived at their final value +/- some

minute percentage. It's the problem pixels that need more work.

> > > The "diffuse job" could be fully covered by radiosity code, by just bypassing

> > > the sample cache, using a low sample ray count and high recursion depth, and

> > > some fairly small changes to a few internal parameters. That, plus another

> >

> > Yes, I think so. You probably don't even need that high a recursion depth, maybe

> > 5 or so. And it's not so much recursion as it is iteration, right? You don't

> > shoot [count] rays again for each bounce of a single path?

>

> Well, if using the existing radiosity mechanism (just bypassing the sample

> cache), it would actually be recursion indeed: The radiosity algorithm is

> *designed* to shoot N rays per location.

Are they randomised, or would they be the same each pass?

> Of Metropolis Light Transport I know nothing.

It's just a statistical method applied to bi-direction path tracing to allow

speed-ups while preserving the unbiased results.

Cheers,

Edouard.

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()