|

|

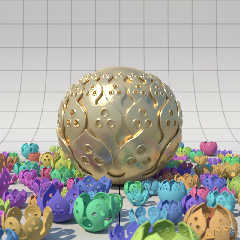

Anti-aliasing, area light + blurred reflection + depth of field + photons

+ radiosity + lots of 4th order polynomials (tori) make for long render

times.

To reduce the slowdown by the object itself, I clipped (intersected) some

of the tori using boxes instead of planes. The revised code was posted in

p.b.s-f on September 23. I also experimented with slicing up the base of

the object, but that led to equivocal results, so that is not included in

the revised code.

I did the photon mapping and radiosity in separate preliminary passes,

then added the anti-aliasing, area light, blurred reflection and DOF for

the final pass. Alas, after 33 hours, I looked at how much had been

rendered, and estimated that it would take about 3 weeks to complete.

(Later testing revealed that the bottom of the "sphere" renders more

slowly than the top, so 3 weeks was likely an underestimate!) A drastic

change of plan was in order.

After testing various options, I decided on 19 3rd pass renders each

without DOF or micronormal averaging, but instead jittering the camera and

the micronormal for each run. The 19 frames were then averaged.

Here's a lesson learned: When averaging several frames in which an object

has strong metallic highlights, it is best to use one of the HDR formats

for the frames. With traditional formats, hyper-white highlights get

clipped before averaging (analogous to POV 3.5 anti-aliasing), resulting

in an anemic final highlight.

sams_shay.20131009.jpg is my submission to CGSphere. The render time was

21h 19m 18s over 19 frames, plus 25 seconds to combine them.

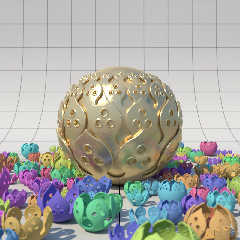

sams_shay.single.jpg is one of my test runs, with DOF but no micronormal

averaging. Surprisingly, there isn't much difference in appearance. The

render time was 20h 25m 15s.

In addition to the above render times, the photons pass took 1h 24m 21s

and the radiosity pass took 2h 7m 26s. All renders were done on 8 cores

with nice -n 19.

There is something that puzzles me, which perhaps CLipka could clear up.

For DOF I used an aperture which translates to 7 mm. For the 19 frames I

initially jittered the camera within a 3.5 mm radius, but that ended up

way blurrier than the DOF run! I eventually cut the jitter radius by half

(and also moved the focal point closer to the surface of the "sphere").

Why does DOF give different results than manually jittering the camera

within the same aperture?

Credits:

- CGSphere environment by Trevor G. Quayle.

- Blurred reflection by RC3Metal.

- Sky (apart from the clouds) by Scott Boham, although it doesn't show

well with the blurred reflection.

- Inspiration, of course, by Samuel T. Benge and Shay.

--

<Insert witty .sig here>

Post a reply to this message

Attachments:

Download 'sams_shay.20131009.jpg' (151 KB)

Download 'sams_shay.single.jpg' (160 KB)

Preview of image 'sams_shay.20131009.jpg'

Preview of image 'sams_shay.single.jpg'

|

|

|

|

Am 10.10.2013 14:52, schrieb Cousin Ricky:

> There is something that puzzles me, which perhaps CLipka could clear

> up. For DOF I used an aperture which translates to 7 mm. For the 19

> frames I initially jittered the camera within a 3.5 mm radius, but that

> ended up way blurrier than the DOF run! I eventually cut the jitter

> radius by half (and also moved the focal point closer to the surface of

> the "sphere"). Why does DOF give different results than manually

> jittering the camera within the same aperture?

That's because...

... um...

... damn, I haven't the slightest clue.

Post a reply to this message

|

|

|

|

Am 10.11.2013 22:02, schrieb clipka:

> Am 10.10.2013 14:52, schrieb Cousin Ricky:

>

>> There is something that puzzles me, which perhaps CLipka could clear

>> up. For DOF I used an aperture which translates to 7 mm. For the 19

>> frames I initially jittered the camera within a 3.5 mm radius, but that

>> ended up way blurrier than the DOF run! I eventually cut the jitter

>> radius by half (and also moved the focal point closer to the surface of

>> the "sphere"). Why does DOF give different results than manually

>> jittering the camera within the same aperture?

>

> That's because...

>

> .... um...

>

> .... damn, I haven't the slightest clue.

There does indeed seem to be a factor of 0.5 buried /somewhere/ in the

code that I never noticed.

Thus, I have been wrong all the time: The aperture value doesn't give

the diameter of the camera lens. Instead, it is an entirely artificial

value that /happens/ to be /twice/ the diameter of the lens.

(That still doesn't mean that jittering the camera position to simulate

focal blur will get you exactly the same results; one effect that is to

be considered especially when using strong focal blur is that jittering

the camera also tilts the image plane, while the jittering used in the

focal blur code leaves the image plane unchanged. But that shouldn't be

relevant for the amount of focal blur you used.)

Post a reply to this message

|

|

![]()