|

|

|

|

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 21 May 2018 10:39:20

Message: <5b02da18$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/20/2018 05:09 PM, clipka wrote:

> Am 20.05.2018 um 18:10 schrieb William F Pokorny:

>

>> My position is still the difference isn't large enough to be of concern,

>> but if so, maybe we should more strongly consider going to >0.0 or some

>> very small values for all shapes. Doing it only in blob.cpp doesn't make

>> sense to me.

>

> I guess the reason I changed it only for blobs was because there I

> identified it as a problem - and I might not have been familiar enough

> with the shapes stuff to know that the other shapes have a similar

> mechanism.

>

> After all, why would one expect such a mechanism if there's the "global"

> MIN_ISECT_DEPTH?

Understand. Only recently are the root filtering and bounding mechanisms

coming into some focus for me & I'm certain I still don't understand it

all. There are too, the two, higher level bounding mechanism which are

tangled in the root/intersection handling. I'm starting to get the

brain-itch the current bounding is not always optimal for 'root finding.'

>

> Technically, I'm pretty sure the mechanism should be disabled for SSLT

> rays in all shapes.

>

OK. Agree from what I see. Work toward >0.0 I guess - though I have this

feeling there is likely an effective good performance 'limit' ahead of

>0.0.

>

>> Aside: MIN_ISECT_DEPTH doesn't exist before 3.7. Is it's creation

>> related to the SSLT implementation?

>

> No, it was already there before I joined the team. The only relation to

> SSLT is that it gets in the way there.

>

> My best guess would be that someone tried to pull the DEPTH_TOLERANCE

> mechanism out of all the shapes, and stopped with the work half-finished.

>

>

> BTW, one thing that has been bugging me all along about the

> DEPTH_TOLERANCE and MIN_ISECT_DEPTH mechanisms (and other near-zero

> tests in POV-Ray) is that they're using absolute values, rather than

> adapting to the overall scale of stuff. That should be possible, right?

>

Sure, but... Not presently in POV-Ray is my 'anytime soon' answer. Too

many magic values or defined values like EPSILON used for differing -or

effectively differing - purposes. Remember, for example, one of the

recent changes I made to the regula-falsi method used within polysolve()

was to use universally the ray value domain instead of a mix of

polynomial values and ray values for relative & absolute error stops.

Root / intersection work is sometimes done in a normalized space(1) and

sometimes not, etc. The pool of issues, questions and possibilities in

which I'm mentally drowning is already deep - and I'm far from seeing to

the bottom. We can often scale scenes up for better result because

POV-Ray code has long been numerically biased to 0+ (1e-3 to 1e7) (2).

Near term think we should work toward something zero centered (1e-7 to

1e7).

Would like to first get to where we've got a more accurate polysolve()

against which any changes to other solvers / specialized shape solvers

can be tested. After which we can perhaps work to get all the solvers &

solver variant code buried in shape code to double / 'DBL' accuracy.

Underneath everything solver / intersection wise is double accuracy

which is only 15 decimal digits. The best double value step

(DBL_EPSILON) off 1.0 is 2.22045e-16. Plus we use fastmath which

degrades that accuracy.

Aside: I coded up a 128bit polysolve() but it was very slow (+10x) and

the compiler feature doesn't look to be supported broadly enough as a

standard to be part of POV-Ray. A near term interesting idea to me - one

Dick Balaska, I think, touched upon recently in another thread - is

coding up a version of polysolve() we'd ship using 'long double'. Long

double is guaranteed at only 64bits (double), but in practice today

folks would mostly get 80bits over 64bits - and sometimes more. It would

need to be an option to the default 64 bit double as it would certainly

be slower - but being generally hardware backed, performance should be

<< the +10x degrade I see for 128bits on my Intel processor. Would be -

for most - an improvement to 18 decimal digits and an epsilon step of

1.0842e-19 off 1.0. Worthwhile...?

Bill P.

(1) Normalizing & the inverse, transforms & the inverse all degrade

accuracy too.

(2) From the days of single floats - where the scale up would have

worked less well at hiding numerical issues (ie coincident surface

noise) because the non-normalized / global accuracy was then something

like 6 decimal digits or 1e-3 to + 1e3.

Post a reply to this message

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 21 May 2018 16:35:08

Message: <5b032d7c@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 21.05.2018 um 16:39 schrieb William F Pokorny:

> Underneath everything solver / intersection wise is double accuracy

> which is only 15 decimal digits. The best double value step

> (DBL_EPSILON) off 1.0 is 2.22045e-16. Plus we use fastmath which

> degrades that accuracy.

>

> Aside: I coded up a 128bit polysolve() but it was very slow (+10x) and

> the compiler feature doesn't look to be supported broadly enough as a

> standard to be part of POV-Ray. A near term interesting idea to me - one

> Dick Balaska, I think, touched upon recently in another thread - is

> coding up a version of polysolve() we'd ship using 'long double'. Long

> double is guaranteed at only 64bits (double), but in practice today

> folks would mostly get 80bits over 64bits - and sometimes more. It would

> need to be an option to the default 64 bit double as it would certainly

> be slower - but being generally hardware backed, performance should be

> << the +10x degrade I see for 128bits on my Intel processor. Would be -

> for most - an improvement to 18 decimal digits and an epsilon step of

> 1.0842e-19 off 1.0. Worthwhile...?

I think that's a path worthwhile exploring further. My suggestion would

be to use a macro - say "PRECISION_FLOAT" - to define a type to be used

in places where precision matters even more than usual, so that we can

get binaries with different performance characteristics out of the same

source code: That macro could simply be defined as `double` for the

status quo, `long double` for a portable attempt at achieving more

precision without losing too much speed, a compiler-specific type such

as `__float128` on gcc, or even a type provided by a 3rd party library

such as GMP or MPFR.

> (2) From the days of single floats - where the scale up would have

> worked less well at hiding numerical issues (ie coincident surface

> noise) because the non-normalized / global accuracy was then something

> like 6 decimal digits or 1e-3 to + 1e3.

POV-Ray has never used single-precision floats. I doubt even DKBTrace

ever did: At least as of version 2.01, even on the Atari it already used

double-precision floats.

As a matter of fact, the IBM-PC configuration for DKBTrace 2.01 (*)

actually defined DBL as `long double` when a coprocessor was present.

DKBTrace 2.12 (**) additionally set EPSILON to 1.0e-15 instead of 1.0e-5

in that case (provided the compiler used wasn't Turbo-C, presumably

because it would define `long double` as 64-bit). This was carried on at

least until POV-Ray v2.2, but must have been gotten lost between v2.2

for DOS and v3.6 for Windows. (My guess would be that the Windows

version never had it.)

(* See here:

https://groups.google.com/forum/#!topic/comp.sources.amiga/icNQTp_txHE)

(** See here: http://cd.textfiles.com/somuch/smsharew/CPROG/)

Post a reply to this message

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 21 May 2018 17:13:30

Message: <5b03367a$1@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 21.05.2018 um 22:35 schrieb clipka:

> I think that's a path worthwhile exploring further. My suggestion would

> be to use a macro - say "PRECISION_FLOAT" - to define a type to be used

Make that `PRECISE_FLOAT`; fits better with a series of similar types

already in use, `PreciseColourChannel`, `PreciseRGBColour` etc., which

are used for colour-ish data where `float` is not enough, most notably

the SSLT code. (Obviously, there the data type of choice is `double`,

and does suffice.)

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 22 May 2018 07:37:01

Message: <5b0400dd@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/21/2018 04:35 PM, clipka wrote:

> Am 21.05.2018 um 16:39 schrieb William F Pokorny:

>

> POV-Ray has never used single-precision floats.

Taking you to mean never in the 'solvers'.

> I doubt even DKBTrace

> ever did: At least as of version 2.01, even on the Atari it already used

> double-precision floats.

>

> As a matter of fact, the IBM-PC configuration for DKBTrace 2.01 (*)

> actually defined DBL as `long double` when a coprocessor was present.

> DKBTrace 2.12 (**) additionally set EPSILON to 1.0e-15 instead of 1.0e-5

> in that case (provided the compiler used wasn't Turbo-C, presumably

> because it would define `long double` as 64-bit). This was carried on at

> least until POV-Ray v2.2, but must have been gotten lost between v2.2

> for DOS and v3.6 for Windows. (My guess would be that the Windows

> version never had it.)

>

> (* See here:

> https://groups.google.com/forum/#!topic/comp.sources.amiga/icNQTp_txHE)

>

> (** See here: http://cd.textfiles.com/somuch/smsharew/CPROG/)

>

Thanks. I've never dug as far back. True too I was speculating about how

many might have actually been running early POV-Ray. Me taking the early

as v1.0 "#define DBL double to be a mechanism those without FPUs and

early small bus sizes were running with "define DBL float" instead. Not

that I was running POV-RAY or even aware of POV-Ray that early.

Don't remember exactly, but I didn't have a personal machine with an

actual FPU until the late 90s. First 64 bit machine early 2000s I guess.

My very first personally owned machine was an 8bit z80 based custom

built thing...

Bill P.

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 22 May 2018 07:45:30

Message: <5b0402da$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/21/2018 05:13 PM, clipka wrote:

> Am 21.05.2018 um 22:35 schrieb clipka:

>

>> I think that's a path worthwhile exploring further. My suggestion would

>> be to use a macro - say "PRECISION_FLOAT" - to define a type to be used

>

> Make that `PRECISE_FLOAT`; fits better with a series of similar types

> already in use, `PreciseColourChannel`, `PreciseRGBColour` etc., which

> are used for colour-ish data where `float` is not enough, most notably

> the SSLT code. (Obviously, there the data type of choice is `double`,

> and does suffice.)

>

OK. Will go for PRECISE_FLOAT and plan to define it in

./base/configbase.h along with DBL and SNGL.

Further - for now - I plan to use it only in polysolve() and its

sub-functions with conversions from and to DBL on entry and exit as I

implemented the 128bit polysolve().

Bill P.

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 27 May 2018 12:19:14

Message: <5b0ada82@news.povray.org>

|

|

|

|  |

|  |

|

|

On 03/30/2018 08:21 AM, William F Pokorny wrote:

>...

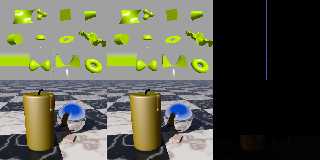

Sturm / polysove() investigation. Coefficients wrongly seen as zeroes.

In this chapter we venture up into the Solve_Polynomial() function which

calls polysove(), solve_quadratic(), solve_cubic() and solve_quartic().

Consider this code added back in 1994 and part of POV-Ray v3.0.

/*

* Determine the "real" order of the polynomial, i.e.

* eliminate small leading coefficients.

*/

i = 0;

while ((i < n) && (fabs(c0[i]) < SMALL_ENOUGH))

{

i++;

}

n -= i;

c = &c0[i];

My last commit deletes it! Having so large a SMALL_ENOUGH (1e-10) and

any fixed value given equation order varies as does distance along the

ray where the equations not normalized is a bad idea(1).

Perhaps reason enough that when any even order polynomial with no roots

is reduced to an odd order polynomial it turns into a polynomial with

roots. All odd order polynomials have at least one root.

In the attached image we have two examples - neither using sturm /

polysolve - where the code above goes bad.

In the top row we've got the shipped primativ.pov scene and specifically

a poly shape which is not explicitly bounded. It creates 4th order

equations so should use solve_quartic(). However the code up top

sometimes strips the leading coefficient and in this case we go from a

quartic which has no roots to an odd third order cubic which has one.

This is the vertical bar artifact in the upper left.

In the bottom row we have the shipped subsurface.pov scene. The

differences on the bottom right (at 4x actual difference values) happen

again where the code at top drops a single leading coefficient. In this

case for pixels and rays where I've examine actual roots, subsurface

related rays go from 4th order equations with >=2 roots with one of

those roots inside the blob interval being kept, to a 3rd order equation

with a single root outside the active blob interval. In other words we

are missing subsurface roots/intersections where we see differences.

Notes:

- The subsurface effect here is visible with no difference multiplier

and many times larger than the returned root threshold differences

recently discussed.

- Because the ray-surface equations are based upon scene geometry the

'zeroes-not-zeroes-dropped' issue here is often scene-object or axis

aligned in some fashion. I'm reminded of a rounded box canyon subsurface

scene Robert McGregor posted where I noticed one box face having a

strange look. Someone joked it was perhaps a door to Narnia or similar

after nobody had an idea what caused the effect. I'm thinking perhaps

the code above - or the still present order reduction by ratio code -

played a part...

NOTE! This update corrupts some previous scenes while fixing some

previous bad ones. Some planned but not yet defined or implemented

equation tuning on ray DX,DY,DZ values is to come.

Updates at:

https://github.com/wfpokorny/povray/tree/fix/polynomialsolverAccuracy

Performance and specific commit details below.

Bill P.

(1) - Yes, this strongly suggests order reduction based upon the largest

two coefficients is also a bad idea. However leaving that feature in

place for now as some primitives require this type of reduction to

function at all. Perhaps in these cases push the reductions out of the

solvers and into the shape code.

Performance info:

------------ /usr/bin/time povray -j -wt1 -fn -p -d -c lemonSturm.pov

0) master 30.22user 0.04system 0:30.89elapsed

16) 21387e2 14.88user 0.02system 0:15.51elapsed -50.76%

17) 80860cd --- NA --- (1e-15 constant calc fix)

(PRECISE_FLOAT)

18) aa6a0a6 --- Bad Result(a) --- (float)

14.95user 0.02system 0:15.56elapsed +0.47% (double)

25.12user 0.02system 0:25.73elapsed +68.82% (long double)

282.97user 0.13system 4:44.13elapsed +1801.68% (__float128)

19) 4e16623 14.94user 0.01system 0:15.56elapsed ---- -50.56%

(a) - Not exhaustively evaluated, but some shapes render OK at 'float'

though those which did were slower (+150% ballpark). Unsure why so slow,

but don't plan to dig further. Sturm / polysolve is for those looking to

be more accurate, not less.

17) Correcting two double 1e-15 constant calculations.

In recent commits started setting two 1e-15 values with the calculation:

= 1/std::numeric_limits<DBL>::digits10;

when I meant to code:

= 1/pow(10,std::numeric_limits<DBL>::digits10);

Both forms bad practice in not being specific as to the types for the

explicit values. The first returns integer 0, later cast to double and I

got away with it in all but my full set of test cases. Corrected:

= (DBL)1.0/pow((DBL)10.0,std::numeric_limits<DBL>::digits10);

18) Implementing suggested PRECISE_FLOAT macro mechanism for polysolve().

Primarily enabling 'long double' support for the polysolve() / 'sturm'

solver given 'long double' is part of the C+11 standard and gets most

users 80 bits or more as opposed to 64. Further, the additional bits are

almost always hardware backed making for reasonable performance.

With GNU g++ 64 bit environments '__float128' is a valid setting with no

additional library required - though it's very slow. Other extended

accuracy floats such as 'double double' enabled, via the PRECISE_

mechanisms, but they involve additional libraries and settings. See code.

Note! With this update the polysolve sturm chain is no longer pruned

with a default 1e-10 value, but rather a value appropriate for the

floating point type. Means better accuracy for some previously bad root

results and less accuracy for others. Coming updates will better tune

the chain equation pruning.

19) Removing leading, near zero coefficient reduction in Solve_Polynomial().

Leading coefficients of < SMALL_ENOUGH (1e-10) were being dropped

reducing the order of the incoming polynomial when the near zero values

had meaning with respect to roots. Led to both false roots and missed roots.

Post a reply to this message

Attachments:

Download 'notzeroesdropped.png' (326 KB)

Preview of image 'notzeroesdropped.png'

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 12 Jul 2018 07:34:56

Message: <5b473ce0$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 03/30/2018 08:21 AM, William F Pokorny wrote:

> ....

Sturm / polysove() investigation. Other solvers. Root polishing.

I've continue to look at the root solvers over the past month. At a good

place to write another post about findings and changes.

A couple preliminaries before the main topic.

First, while most results are better, the polynomialsolverAccuracy

branch still lacks planned updates. For example, scenes with lathes and

sphere_sweeps using orthographic cameras currently render with more

artifacts, not less.

Second, this set of commits updates the solve_quadratic function in a

way both more accurate and faster.

---

The main topic is root finding tolerance and scaling.

All the solvers find roots / surface intersections to some tolerance.

They do this in a coordinate space which has been normalized with

respect all scene transforms. Additionally, objects like blobs

internally normalized to internal 'unit' coordinate spaces.

Suppose in the solver coordinate space a ray/surface equation has a root

essentially at the ray origin - at distance 0.0 - as happens with self

shadowing rays. All solvers due their tolerances have the potential to

allow such near-zero roots to drift into the >0.0 value space as

ray-surface intersections. This numerical reality is why objects filter

roots to a minimum returned intersection depth larger than zero(1).

Further, this minimum returned intersection depth must account for the

translation of every root and its tolerance back into the original

coordinate space. A wrongly positive 1e-8 root in solver space is a much

more positive 1e-4 root after a scale up by 10000. The solvers each have

different tolerances. There are many shape custom 'solvers' in use

beyond those I've been working upon and differing internal

normalizations. Why we've got many differing returned depth values. Why

blob's have long used a minimum returned intersection depth of 1e-2

while a sphere, for example, uses 1e-6.

It's the case that even blob's large 1e-2 filtering value is inadequate

given solve_quartic()'s tolerance is relatively large. Continuing to use

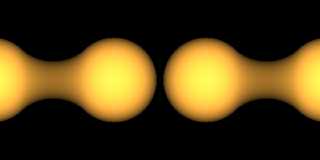

Gail Shaw's blob scene from 2005 to demonstrate, the attached image was

rendered with the current 3.8 master branch without sturm - so using

solve_quartic(). The original scene render is on the left. On the right

is the same scene scaled up by 10000. The vertical bars showing up on

the right happen due slightly ~=0.0 roots drifting positive in

solve_quartic(). The wrongly positive roots are then scaled up to a

value greater than the current 1e-2 small root/intersection depth

filter. Not being filtered, the roots corrupt results.

While zero crossing roots due tolerance are the most serious tolerance

multiplied by scale issue, roots well positive can drift quite a lot too

in the global space where the scale up is large.

The improvement adopted for the solve_quartic() and polysolve()/sturm

solvers - where 'scaled tolerance' issues have been seen - was to add a

Newton-Raphson root polishing step to each. With blobs this looks to

allow a returned depth on the order of 4e-8 over 1e-2 for a 1e-7 to 1e7

global working range at DBL = 'double'.

Aside for thought: Might we be able to determine when we are evaluating

a self-shadowing or same-shape-terminating ray-surface equation? If so,

it should be we can use the knowledge we have a root 'at 0.0' to always

deflate / reduce the order of these polynomials prior to finding roots.

Updates at:

https://github.com/wfpokorny/povray/tree/fix/polynomialsolverAccuracy

Performance and specific commit details below.

Bill P.

(1) - The idea of going as small as >0.0 in each shape's implementation

fly is not presently possible. Trying instead a new much smaller

MIN_ISECT_DEPTH_RETURNED value - see commit comments.

Performance info:

------------ /usr/bin/time povray -j -wt1 -fn -p -d -c lemonSturm.pov

0) master 30.22user 0.04system 0:30.89elapsed

19) 4e16623 14.94user 0.01system 0:15.56elapsed -50.56%

20) 0d66160 15.22user 0.03system 0:15.80elapsed +1.87%

(hilbert_curve linear sphere_sweep

scene with new solve_quadratic()) (-7.5%)

21) 761dd0b 15.05user 0.04system 0:15.71elapsed -1.12%

22) 552b625 (quartic polish non-sturm lemon scene) (+0.82%)

23) 63e0456 15.16user 0.02system 0:15.76elapsed +1.20%

24) ec28851 NA Code documentation only.

25) 8962513 15.43user 0.02system 0:16.05elapsed +1.78% -48.94%

(quartic polish non-sturm lemon scene) (+1.02% +2.70%)

19) Removing leading, near zero coefficient reduction in Solve_Polynomial().

Leading coefficients of < SMALL_ENOUGH (1e-10) were being dropped

reducing the order of the incoming polynomial when the near zero values

had meaning with respect to roots. Led to both false roots and missed roots.

20) New zero coefficient stripping and solve_quadratic implementation.

General effort to implement better 'effective zero' coefficient

handling. Created new POV_DBL_EPSILON macro value which is 2x the C++

standards <float type>_EPSILON value and updated PRECISE_EPSILON to be

2x the single bit epsilon as well.

Zero filtering in polysolve now looks at all polynomial coefficients and

either sets 'effective zeros' to exactly 0.0 or strips them if they are

leading coefficients.

The much more accurate near zero coefficients drove the need for a

better solve_quadratic implementation included with this commit. Note it

supports the PRECISE_FLOAT options like polysolve.

Zero filtering in solve_quadratic, solve_cubic and solve_quartic now

standardized both in implementation and use of POV_DBL_EPSILON.

21) Created new constexpr DBL variable MIN_ISECT_DEPTH_RETURNED.

Near term need to use a value not MIN_ISECT_DEPTH in blob.cpp to test

Jérôme's github pull request #358. Longer term aim is to drive all

returned intersection depths from shape code to

MIN_ISECT_DEPTH_RETURNED. The value is automatically derived from DBL

setting and at double resolves to about 4.44089e-08. On the order of the

square root of POV_DBL_EPSILON.

Moving blob.cpp's inside test value INSIDE_TOLERANCE to POV_DBL_EPSILON

over previous recent re-calculation. Over time plan is to move EPSILONs

best nearer a double's step to POV_DBL_EPSILON.

Cleaning up doxygen documentation added during recent solver related

updates.

22) Adding root polishing step to solve_quartic function.

Newton-Raphson step added to polish initial roots found by the

solve_quartic function. The core solve_quartic tolerance allows roots to

drift from <=0.0 to >0.0 values with the latter causing artifacts and

additional root filtering. This the reason for the long too large 1e-2

intersection depth value in blob.cpp now reduced to

MIN_ISECT_DEPTH_RETURNED.

As part of this change created a new FUDGE_FACTOR4(1e-8) constant DBL

value to replace previous use of SMALL_ENOUGH(1e-10) within

solve_quartic. Looks like the value had been smaller to make roots more

accurate, but at the cost of missing roots in some difficult equation

cases. With the root polishing can move back to a larger value so as to

always get roots. Yes, this better addresses most of what the already

remove difficult_coeffs() function and bump into polysolve was trying to do.

23) Adding root polishing step to polysolve function.

24) Cleaning up a few comments and doxygen documentation.

25) Moving to more conservative root polishing implementations.

Post a reply to this message

Attachments:

Download 'tolerancestory.png' (97 KB)

Preview of image 'tolerancestory.png'

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 25 Nov 2018 11:18:25

Message: <5bfacb51$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 3/30/18 8:21 AM, William F Pokorny wrote:

> In continuing to look at media I returned to a 'blob-media' issue Gail

> Shaw originally posted back in 2005.

A long time since I posted here on the progress I guess... Still hacking

- just proving hard to get everything dependent on polysolve to

something mostly 'fixed.'

Yesterday, Johannes (j13r) posted in newusers a scene where he was

trying to create a scene using torus shapes and media to create

something like solar flares on the surface of a disk. A slightly

modified version without lights makes for a pretty good poster for

current media issues compared to my current working branch with better

solvers/shape code, my current version of the change proposed in:

https://github.com/POV-Ray/povray/pull/358 and the internal small

tolerance at 1e-6 instead of 1e-3.

The current v3.8 on the left, my patched branch in the middle and the

differences (artifacts) shown on the right. The scene was modified for

no lights and run with the flags: +w600 +h300 Corona.pov -j +p +a in

both cases.

Bill P.

Post a reply to this message

Attachments:

Download 'coronaa_to_coronab.jpg' (21 KB)

Preview of image 'coronaa_to_coronab.jpg'

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 28 Nov 2018 08:23:45

Message: <5bfe96e1@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 25.11.2018 um 17:18 schrieb William F Pokorny:

> A long time since I posted here on the progress I guess... Still hacking

> - just proving hard to get everything dependent on polysolve to

> something mostly 'fixed.'

I admire and appreciate your perseverance in this matter.

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 11 Mar 2019 10:41:20

Message: <5c867390@news.povray.org>

|

|

|

|  |

|  |

|

|

On 3/30/18 8:21 AM, William F Pokorny wrote:

> In continuing to look at media I returned to a 'blob-media' issue Gail

> Shaw originally posted back in 2005. The bright spots at the very edge

> are indeed caused by the 'intersection depth < small_tolerance' or

> missed intersection issue as Slime guessed at the time.

>

Sturm / polysove(), solvers investigation. End of this thread.

Almost exactly a year ago started to dig into numerical issues. It's a

good time to take a break. Before I do, five more commits to close out

this thread with updates representing a reasonably complete set of

improvements. I have in mind still many possible solver improvements and

code improvements beyond these. I could spend the rest of my lifetime at

it. I'm instead going to get back to some scene work near term.

First commit a set of changes I've been sitting on since last fall which

to my testing makes most everything better solver-side wise. The one

user oriented change was adding a sturm option to sphere_sweep where

that sturm means a two pass sort of sturm. The sturm control tests a fix

for a class of issues most often seen with orthogonal cameras to an in

plane sweep as might be done for human signatures or presentation like

images.

Second commit is my version of Jerome's pull request #358 which needs

some of the changes in the first commit. Note this update causes scenes

with photons to be somewhat slower due more photons being deposited.

Third commit addresses what might be called 2.5 issues with the shadow

cache for both artifacts and performance. The update causes a shift in

certain shadow artifacts. I suspect the state of the shadow cache might

have come about as a way to hide (it didn't completely and causes other

issues) certain shadowing issues - but guessing. The also cleans up

SMALL_TOLERANCE and SHADOW_TOLERANCE uses in the shadow cache / trace

shadow code. The values have been equivalent for 25+ years so the

tangling had not mattered to result.

Fourth commit mostly a completion of the second and third eliminating

SMALL_TOLERANCE in addition to MIN_ISECT_DIST. This partly follows up on

a question Jerome asked either in #358 or some related issue. Namely,

why not zero or near it for the Intersect_BBox_Dir calls in object.cpp.

Larger values do indeed cause artifacts with secondary rays as

especially noticeable in scenes with media. It's one of many causes for

media speckles. Pull #358 as originally submitted moved from a

MIN_ISECT_DIST value of 1e-4 to SMALL_TOLERANCE of 1e-3 making the

inherent problem worse. SMALL_TOLERANCE had also been adopted a few

other places in the code. In those cases moved to gkDBL_epsilon or

gkMinIsectDepthReturned as appropriate.

Fifth commit changes Cone_Tolerance(1e-9) in cone.cpp to

gkMinIsectDepthReturned(4.4e-8). Found to be necessary during testing

due pull #358 changes.

Solvers in much better shape to my testing (2000+ cases now) but, so

long as we run more or less directly on the floating point hardware for

best speed, there will always be floating point accuracy issues cropping up.

All shapes where I made modifications test better and scale better, but

I'll mention the sphere_sweeps still has substantial issues with media

(even at 1x scale) and scaling. Due - reasons - one being the necessary

updates are not easy. Rays perpendicular to a sweeps directions will

sometimes show MORE artifacts due tightening up on solver accuracy(1) -

this is where the new sturm (double sturm) control must be used.

The two attached images shows a kind of media scaling set I've generally

adopted as it seems to be a pretty good way to test the numerical

soundness of a shape's code - especially the secondary rays. One image

is blobs for sturms the other for the updated solve_quartic code. Middle

row being the updated and essentially speckle-less new result. Seventh

column over is the 1x scene. Scales from the left at 1e6x to the right

at 1e-7x.

Updates at (2):

https://github.com/wfpokorny/povray/tree/fix/polynomialsolverAccuracy

Performance and specific commit details below.

Bill P.

(1) - As mentioned previously some of the solvers had been de-tuned

(solve_quadratic and sturm) to help lathes and sphere_sweeps - I guess.

The solve_quartic solvers was tuned - perhaps by accident given the

previous use of SMALL_ENOUGH - to be more accurate at the expense of

finding roots. In that latter case now tuned to find the most roots

possible with root polishing. Argh! I'd need to write a book to describe

anything close to all the details addressed and still open. For the

record significant solver related conversation can be found in the

github pull request comments at: https://github.com/POV-Ray/povray/pull/358.

(2) - The last set of changes in master forced some branch merges

instead of the usual re-basing. The solver branch, for one, had to be

merged to maintain compile-ability on checkout of previous branch commits.

Performance info:

------------ /usr/bin/time povray -j -wt1 -fn -p -d -cc lemonSturm.pov

0) master 30.22user 0.04system 0:30.89elapsed

25) 8962513 15.43user 0.02system 0:16.05elapsed +1.78% -48.94%

(quartic polish non-sturm lemon scene) (+1.02% +2.70%)

26) 75ddd88 17.53user 0.02system 0:18.11elapsed +13.61%

(quartic non-sturm lemon scene) (+3.10%)

27) 4ea0c37 NA (slowdown due photons. benchmark +3.5%)

28) ff6cd8d 14.41user 0.02system 0:15.01elapsed (-17.80%)

29) ef9538b NA

30) 38b434d NA

...

25) Moving to more conservative root polishing implementations.

Further making constant names consistent with recommened coding style. In

polynomialsolver.cpp results in the following name changes.

FUDGE_FACTOR2 now kSolveQuarticV1_Factor2

FUDGE_FACTOR3 now kSolveQuarticV1_Factor3

FUDGE_FACTOR4 now kSolveQuarticV2_Factor4

TWO_M_PI_3 now kSolveCubic_2MultPiDiv3

FOUR_M_PI_3 now kSolveCubic_4MultPiDiv3

MAX_ITERATIONS now kMaxIterations

SBISECT_MULT_ROOT_THRESHOLD now kSbisectMultRootThreshold

REGULA_FALSA_THRESHOLD now kRegulaFalsaThreshold

RELERROR now kRelativeError

SMALL_ENOUGH now kSolveQuadratic_SmallEnough

26) Initial, reasonably complete, update to common solvers.

Working on possible further improvements, but those likely quite far out

in time. In total dozens of issues addressed. New solver call structure.

Solvers themselves more accurate and aligned better with common

practice. Additional root polishing. Corresponding updates to shape code

while working to extend scaling range for shapes. Changes encompass

updates needed to support a commit to follow which covers pull request

#358 and its associated issues.

Generally sturm option now much faster. Also true due improvements to

the fixed solvers that the sturm option is less often necessary.

The sphere_sweep now supports a sturm option. Here sturm runs the

sturmian solver in a two pass approach which, for certain scenes, will

work where the previous single pass sturmian solver did not. Unlike

other updated shapes, the sphere_sweeps scaling accuracy range was not

much improved due a decision to leave other parse time optimizations for

run time performance in place.

27) My implementation of Jerome's pull request #358.

Fix for issues #121, #125 and several related newsgroup reports as well.

Mostly it restores 3.6 behavior with respect to intersection depths

filtered.

Note! Scenes using photons will often run slower and look somewhat

different due additional photons being deposited. This includes our

benchmark scene.

28) Shadow cache fixes. SHADOW_TOLERANCE vs SMALL_TOLERANCE cleanup.

SHADOW_TOLERANCE not used for cached results leading to artifacts though

sometimes hiding others.

Shadow cache not invalidated on misses causing sometimes significant

performance hit.

SMALL_TOLERANCE being used instead of SHADOW_TOLERANCE in some trace

shadow related comparisons. Values had long (25+ years) been cleaned up

ahead of SMALL_TOLERANCE removal.

Note! This the last commit where SMALL_TOLERANCE will exists.

29) Mostly details completing the previous two commits.

Eliminating SMALL_TOLERANCE in addition to MIN_ISECT_DIST. This partly

follows up on a question Jerome asked either in #358 or some related

issue. Namely, why not zero or near it for the Intersect_BBox_Dir calls

in object.cpp. Larger values do indeed cause artifacts with secondary

rays as especially noticeable in scenes with media. It's one of many

causes for media speckles. Pull #358 as originally submitted moved from

a MIN_ISECT_DIST value of 1e-4 to SMALL_TOLERANCE of 1e-3 making the

inherent problem worse. SMALL_TOLERANCE had also been adopted a few

other places in the code. In those cases moved to gkDBL_epsilon or

gkMinIsectDepthReturned as appropriate.

30) In cone.cpp changing Cone_Tolerance to gkMinIsectDepthReturned.

During testing found the Cone_Tolerance in cone.cpp, which had been

changed from 1e-6 to 1e-9 v3.6 to v3.7, was too small for some photon

scenes. Secondary rays starting on the surface (at zero but numerically

not) were not getting filtered with the pull request 358 like changes

(MIN_ISECT_DIST to 0.0). Moved to new gkMinIsectDepthReturned (4.4e-8)

used now in many other shapes and OK for test scenes I have.

Post a reply to this message

Attachments:

Download 'blobsturmstory.png' (78 KB)

Download 'blobnosturmstory.png' (74 KB)

Preview of image 'blobsturmstory.png'

Preview of image 'blobnosturmstory.png'

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()