|

|

|

|

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 18 May 2018 22:16:31

Message: <5aff88ff@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 18.05.2018 um 19:00 schrieb William F Pokorny:

> Chrisoph added this bit of code in blob.cpp to avoid visible seams:

>

> DBL depthTolerance = (ray.IsSubsurfaceRay()? 0 : DEPTH_TOLERANCE);

>

> I've been running with DEPTH_TOLERANCE at 1e-4 for a long time in my

> personal version of POV-Ray which fixes all the blob accuracy issues

> except for the subsurface one.

For subsurface rays it's safer to keep it at exactly 0.

The `depthTolerance` mechanism, to all of my knowledge, is in there to

make sure that transmitted, reflected or shadow rays don't pick up the

original surface again.

This problem is absent in rays earmarked as subsurface rays (presuming

we have high enough precision) because such rays are never shot directly

from the original intersection point, but a point translated slightly

into the object. So subsurface rays already carry an implicit

depthTolerance with them.

> It could be adjusted so as to push more solve_quartic rays into sturm /

> polysolve, but it's aleady the case most difficult-coefficient rays are

> unnecessarily run in sturm / polysolve when solve_quartic would work

> just fine. The right thing to do is let users decide when to use 'sturm'

> and this set of commit dumps the difficult_coeffs() code.

Would it make sense to, rather than ditching the test entirely, instead

have it trigger a warning after the render (akin to the isosurfaces

max_gradient info messages) informing the user that /if/ they are seeing

artifacts with the object they may want to turn on sturm (provided of

course sturm is off)?

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 19 May 2018 09:49:07

Message: <5b002b53@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/18/2018 10:16 PM, clipka wrote:

> Am 18.05.2018 um 19:00 schrieb William F Pokorny:

>

>> Chrisoph added this bit of code in blob.cpp to avoid visible seams:

>>

>> DBL depthTolerance = (ray.IsSubsurfaceRay()? 0 : DEPTH_TOLERANCE);

>>

>> I've been running with DEPTH_TOLERANCE at 1e-4 for a long time in my

>> personal version of POV-Ray which fixes all the blob accuracy issues

>> except for the subsurface one.

Formally a blob's 'DEPTH_TOLERANCE' value was used for both the returned

from ray origin intersection tolerance and the internal

determine_influences() 'mind_dist' tolerances - though they control

different things. The internal sub-element influences mechanism now uses

>0.0. Should have always I believe except it does sometimes create

slightly more difficult ray-surface equations - which is my bet for why

the original v1.0 code didn't.

>

> For subsurface rays it's safer to keep it at exactly 0.

>

> The `depthTolerance` mechanism, to all of my knowledge, is in there to

> make sure that transmitted, reflected or shadow rays don't pick up the

> original surface again.

>

It depends about which intersection depth tolerance you speak. There are

variations of the mechanism.

a) What you say is close to my understanding for the continued and

shadow ray mechanisms which exists outside the code for each shape.

These mechanisms live in the 'ray tracing' code. I'd slightly reword

what you wrote to say these mechanisms prevent the use of ray - surface

intersections within some distance of a previous intersection. This

distance is today usually SMALL_TOLERANCE or 1e-3. SHADOW_TOLERANCE has

also been 1e-3 for more than 25 years and was only every something

different because Enzmann's initial solvers had a hard coded shadow ray

filter at a larger the SMALL_TOLERANCE value. There is too

MIN_ISECT_DEPTH.

b) Root / intersection depth tolerance filters exist in the code for

each shape/surface too. These control only whether all roots /

intersections for the shape are greater than a minimum intersection

depth relative to the incoming ray's origin or less than MAX_DISTANCE.

They do not control how closely ray surface intersections are spaced

from each other(1)(2). True the shape depth tolerance can influence

continued or shadow ray handling as seen by being 'different' than those

used in (a). However, we should aim for (b) <= (a) depth tolerance

alignment.

On seeing your subsurface code running the (b) variety at >0.0. I had

the thought, why not do this with all shapes / surfaces? Let (a) filter

the roots for its need. This would get us closer to a place where we'd

be able to make SMALL_TOLERANCE & MIN_ISECT_DEPTH smaller so people

don't have to so often do the ugly scale up their scene trick. There is

no numerical reason we cannot support generally much smaller scene

dimensions than we do.

Well it turns out returning all the roots >0.0 in each shapes (b)

mechanism costs cpu time - about 1% of a 3% increase with the internal

blob influence min_dist change for a glass blob scene. Time is saved

where intersections which (a) would have to filter later are just never

returned by (b). This experiment is why I set blob.cpp's DEPTH_TOLERANCE

= MIN_ISECT_DEPTH - which only happens also be the 1e-4 I've long been

using. I currently think the (b) depth for each shape / surface should

be migrated to MIN_ISECT_DEPTH over time.

(1) - I expect CSG generally needs all the roots regardless of spacing,

but this is code I've never reviewed.

(2) - Mostly true for what I've seen. But who knows, might be other

inbuilt shape, surface implementations do spacial root filtering for

internal reasons. The sphere_sweep's code seems to be doing a sort of a

root correction thing - with which you are far more familiar than me.

> This problem is absent in rays earmarked as subsurface rays (presuming

> we have high enough precision) because such rays are never shot directly

> from the original intersection point, but a point translated slightly

> into the object. So subsurface rays already carry an implicit

> depthTolerance with them.

>

I tested subsurface scenes with my updates and believe the changes are

compatible without the need for the returned depth to be >0.0. Thinking

about it another way, if the 'returned' min depth needed to be >0.0 for

subsurface to work, all shapes would need that conditional for >0.0 and

this is not the case. Believe we're good, but we can fix things if not.

>> It could be adjusted so as to push more solve_quartic rays into sturm /

>> polysolve, but it's aleady the case most difficult-coefficient rays are

>> unnecessarily run in sturm / polysolve when solve_quartic would work

>> just fine. The right thing to do is let users decide when to use 'sturm'

>> and this set of commit dumps the difficult_coeffs() code.

>

> Would it make sense to, rather than ditching the test entirely, instead

> have it trigger a warning after the render (akin to the isosurfaces

> max_gradient info messages) informing the user that /if/ they are seeing

> artifacts with the object they may want to turn on sturm (provided of

> course sturm is off)?

>

We should think about it, but currently I answer no to your specific

question. The difficult coefficient method is wildly inaccurate in

practice unlike the warning with isosurfaces.

A warning would be good if we can figure out something better... Mostly

it will be obvious - and more often obvious with this update. If running

blobs and you see speckles / noise - try sturm. Advice good today by the

way. Advice I should have taken with my own potential glow scene over

extreme AA - but the code dropped hid much of the solve_quartic issue

from me and I thought media the cause.

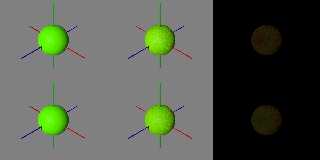

I ran test scenes where knowing you need sturm is pretty much impossible

to see without trying sturm and comparing images. For example, attached

is an image where I was aiming for a fruit looking thing with a faked

subsurface effect using multiple concentric blobs positive and negative

- taking advantage of blob's texture blending.

In the left column we see the <= master (3.8) current result where the

internal blob surfaces are not accurately resolved. The right column

shows the difference between column 1 and 2 for each row.

The top row, middle shows the new all solve_quartic() solution which in

fact has bad roots on a surface internal to the outer ones. Negative

blobs in addition to tiny thresholds tend to trip the solve_quartic

failures. We cannot 'see' we've got bad roots... The bottom row is the

accurate - though it happens less attractive - sturm result.

Reasonable accurate warnings would be good. Wonder if there is a way to

pick the issue up in solve_quartic itself or in blob.cpp knowing sturm

not set....

Bill P.

Post a reply to this message

Attachments:

Download 'diffcoeffhidden.png' (160 KB)

Preview of image 'diffcoeffhidden.png'

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 20 May 2018 12:10:08

Message: <5b019de0$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/18/2018 10:16 PM, clipka wrote:

> Am 18.05.2018 um 19:00 schrieb William F Pokorny:

>

>> Chrisoph added this bit of code in blob.cpp to avoid visible seams:

>>

>> DBL depthTolerance = (ray.IsSubsurfaceRay()? 0 : DEPTH_TOLERANCE);

>>

>> I've been running with DEPTH_TOLERANCE at 1e-4 for a long time in my

>> personal version of POV-Ray which fixes all the blob accuracy issues

>> except for the subsurface one.

>

> For subsurface rays it's safer to keep it at exactly 0.

>

...

>

> This problem is absent in rays earmarked as subsurface rays (presuming

> we have high enough precision) because such rays are never shot directly

> from the original intersection point, but a point translated slightly

> into the object. So subsurface rays already carry an implicit

> depthTolerance with them.

>

Aside: 'exactly 0' unless we change blob.cpp code means >0.0.

I woke up this morning thinking more about your description of SSLT and

though I picked up no major differences in result, results were not

identical - but I didn't expect them to be. I'd not run the returned

root depth threshold at both 0.0 and 1e-4 (MIN_ISECT_DEPTH) and compared

results.

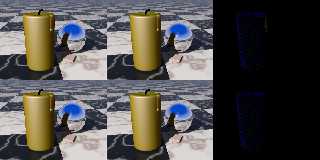

The top row in the attached image is without sturm the bottom with. The

differences are very subtle, but can be seen after being multiplied by 50.

The left column is less my recent changes so min_dist and the return

depth >0.0 with sturm automatically getting used often in the upper left

image.

The middle column is the updated code so the middle top now using

strictly solve_quartic() making the differences on the right are

greater. The bottom, sturm always row best shows the effect of not

returning roots <= 1e-4 from the ray origin.

My position is still the difference isn't large enough to be of concern,

but if so, maybe we should more strongly consider going to >0.0 or some

very small values for all shapes. Doing it only in blob.cpp doesn't make

sense to me.

Aside: MIN_ISECT_DEPTH doesn't exist before 3.7. Is it's creation

related to the SSLT implementation?

Bill P.

Post a reply to this message

Attachments:

Download 'sslt_rtrnrootquestion.png' (460 KB)

Preview of image 'sslt_rtrnrootquestion.png'

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 20 May 2018 17:09:35

Message: <5b01e40f$1@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 20.05.2018 um 18:10 schrieb William F Pokorny:

> My position is still the difference isn't large enough to be of concern,

> but if so, maybe we should more strongly consider going to >0.0 or some

> very small values for all shapes. Doing it only in blob.cpp doesn't make

> sense to me.

I guess the reason I changed it only for blobs was because there I

identified it as a problem - and I might not have been familiar enough

with the shapes stuff to know that the other shapes have a similar

mechanism.

After all, why would one expect such a mechanism if there's the "global"

MIN_ISECT_DEPTH?

Technically, I'm pretty sure the mechanism should be disabled for SSLT

rays in all shapes.

> Aside: MIN_ISECT_DEPTH doesn't exist before 3.7. Is it's creation

> related to the SSLT implementation?

No, it was already there before I joined the team. The only relation to

SSLT is that it gets in the way there.

My best guess would be that someone tried to pull the DEPTH_TOLERANCE

mechanism out of all the shapes, and stopped with the work half-finished.

BTW, one thing that has been bugging me all along about the

DEPTH_TOLERANCE and MIN_ISECT_DEPTH mechanisms (and other near-zero

tests in POV-Ray) is that they're using absolute values, rather than

adapting to the overall scale of stuff. That should be possible, right?

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 21 May 2018 10:39:20

Message: <5b02da18$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/20/2018 05:09 PM, clipka wrote:

> Am 20.05.2018 um 18:10 schrieb William F Pokorny:

>

>> My position is still the difference isn't large enough to be of concern,

>> but if so, maybe we should more strongly consider going to >0.0 or some

>> very small values for all shapes. Doing it only in blob.cpp doesn't make

>> sense to me.

>

> I guess the reason I changed it only for blobs was because there I

> identified it as a problem - and I might not have been familiar enough

> with the shapes stuff to know that the other shapes have a similar

> mechanism.

>

> After all, why would one expect such a mechanism if there's the "global"

> MIN_ISECT_DEPTH?

Understand. Only recently are the root filtering and bounding mechanisms

coming into some focus for me & I'm certain I still don't understand it

all. There are too, the two, higher level bounding mechanism which are

tangled in the root/intersection handling. I'm starting to get the

brain-itch the current bounding is not always optimal for 'root finding.'

>

> Technically, I'm pretty sure the mechanism should be disabled for SSLT

> rays in all shapes.

>

OK. Agree from what I see. Work toward >0.0 I guess - though I have this

feeling there is likely an effective good performance 'limit' ahead of

>0.0.

>

>> Aside: MIN_ISECT_DEPTH doesn't exist before 3.7. Is it's creation

>> related to the SSLT implementation?

>

> No, it was already there before I joined the team. The only relation to

> SSLT is that it gets in the way there.

>

> My best guess would be that someone tried to pull the DEPTH_TOLERANCE

> mechanism out of all the shapes, and stopped with the work half-finished.

>

>

> BTW, one thing that has been bugging me all along about the

> DEPTH_TOLERANCE and MIN_ISECT_DEPTH mechanisms (and other near-zero

> tests in POV-Ray) is that they're using absolute values, rather than

> adapting to the overall scale of stuff. That should be possible, right?

>

Sure, but... Not presently in POV-Ray is my 'anytime soon' answer. Too

many magic values or defined values like EPSILON used for differing -or

effectively differing - purposes. Remember, for example, one of the

recent changes I made to the regula-falsi method used within polysolve()

was to use universally the ray value domain instead of a mix of

polynomial values and ray values for relative & absolute error stops.

Root / intersection work is sometimes done in a normalized space(1) and

sometimes not, etc. The pool of issues, questions and possibilities in

which I'm mentally drowning is already deep - and I'm far from seeing to

the bottom. We can often scale scenes up for better result because

POV-Ray code has long been numerically biased to 0+ (1e-3 to 1e7) (2).

Near term think we should work toward something zero centered (1e-7 to

1e7).

Would like to first get to where we've got a more accurate polysolve()

against which any changes to other solvers / specialized shape solvers

can be tested. After which we can perhaps work to get all the solvers &

solver variant code buried in shape code to double / 'DBL' accuracy.

Underneath everything solver / intersection wise is double accuracy

which is only 15 decimal digits. The best double value step

(DBL_EPSILON) off 1.0 is 2.22045e-16. Plus we use fastmath which

degrades that accuracy.

Aside: I coded up a 128bit polysolve() but it was very slow (+10x) and

the compiler feature doesn't look to be supported broadly enough as a

standard to be part of POV-Ray. A near term interesting idea to me - one

Dick Balaska, I think, touched upon recently in another thread - is

coding up a version of polysolve() we'd ship using 'long double'. Long

double is guaranteed at only 64bits (double), but in practice today

folks would mostly get 80bits over 64bits - and sometimes more. It would

need to be an option to the default 64 bit double as it would certainly

be slower - but being generally hardware backed, performance should be

<< the +10x degrade I see for 128bits on my Intel processor. Would be -

for most - an improvement to 18 decimal digits and an epsilon step of

1.0842e-19 off 1.0. Worthwhile...?

Bill P.

(1) Normalizing & the inverse, transforms & the inverse all degrade

accuracy too.

(2) From the days of single floats - where the scale up would have

worked less well at hiding numerical issues (ie coincident surface

noise) because the non-normalized / global accuracy was then something

like 6 decimal digits or 1e-3 to + 1e3.

Post a reply to this message

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 21 May 2018 16:35:08

Message: <5b032d7c@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 21.05.2018 um 16:39 schrieb William F Pokorny:

> Underneath everything solver / intersection wise is double accuracy

> which is only 15 decimal digits. The best double value step

> (DBL_EPSILON) off 1.0 is 2.22045e-16. Plus we use fastmath which

> degrades that accuracy.

>

> Aside: I coded up a 128bit polysolve() but it was very slow (+10x) and

> the compiler feature doesn't look to be supported broadly enough as a

> standard to be part of POV-Ray. A near term interesting idea to me - one

> Dick Balaska, I think, touched upon recently in another thread - is

> coding up a version of polysolve() we'd ship using 'long double'. Long

> double is guaranteed at only 64bits (double), but in practice today

> folks would mostly get 80bits over 64bits - and sometimes more. It would

> need to be an option to the default 64 bit double as it would certainly

> be slower - but being generally hardware backed, performance should be

> << the +10x degrade I see for 128bits on my Intel processor. Would be -

> for most - an improvement to 18 decimal digits and an epsilon step of

> 1.0842e-19 off 1.0. Worthwhile...?

I think that's a path worthwhile exploring further. My suggestion would

be to use a macro - say "PRECISION_FLOAT" - to define a type to be used

in places where precision matters even more than usual, so that we can

get binaries with different performance characteristics out of the same

source code: That macro could simply be defined as `double` for the

status quo, `long double` for a portable attempt at achieving more

precision without losing too much speed, a compiler-specific type such

as `__float128` on gcc, or even a type provided by a 3rd party library

such as GMP or MPFR.

> (2) From the days of single floats - where the scale up would have

> worked less well at hiding numerical issues (ie coincident surface

> noise) because the non-normalized / global accuracy was then something

> like 6 decimal digits or 1e-3 to + 1e3.

POV-Ray has never used single-precision floats. I doubt even DKBTrace

ever did: At least as of version 2.01, even on the Atari it already used

double-precision floats.

As a matter of fact, the IBM-PC configuration for DKBTrace 2.01 (*)

actually defined DBL as `long double` when a coprocessor was present.

DKBTrace 2.12 (**) additionally set EPSILON to 1.0e-15 instead of 1.0e-5

in that case (provided the compiler used wasn't Turbo-C, presumably

because it would define `long double` as 64-bit). This was carried on at

least until POV-Ray v2.2, but must have been gotten lost between v2.2

for DOS and v3.6 for Windows. (My guess would be that the Windows

version never had it.)

(* See here:

https://groups.google.com/forum/#!topic/comp.sources.amiga/icNQTp_txHE)

(** See here: http://cd.textfiles.com/somuch/smsharew/CPROG/)

Post a reply to this message

|

|

|  |

|  |

|

|

From: clipka

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 21 May 2018 17:13:30

Message: <5b03367a$1@news.povray.org>

|

|

|

|  |

|  |

|

|

Am 21.05.2018 um 22:35 schrieb clipka:

> I think that's a path worthwhile exploring further. My suggestion would

> be to use a macro - say "PRECISION_FLOAT" - to define a type to be used

Make that `PRECISE_FLOAT`; fits better with a series of similar types

already in use, `PreciseColourChannel`, `PreciseRGBColour` etc., which

are used for colour-ish data where `float` is not enough, most notably

the SSLT code. (Obviously, there the data type of choice is `double`,

and does suffice.)

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 22 May 2018 07:37:01

Message: <5b0400dd@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/21/2018 04:35 PM, clipka wrote:

> Am 21.05.2018 um 16:39 schrieb William F Pokorny:

>

> POV-Ray has never used single-precision floats.

Taking you to mean never in the 'solvers'.

> I doubt even DKBTrace

> ever did: At least as of version 2.01, even on the Atari it already used

> double-precision floats.

>

> As a matter of fact, the IBM-PC configuration for DKBTrace 2.01 (*)

> actually defined DBL as `long double` when a coprocessor was present.

> DKBTrace 2.12 (**) additionally set EPSILON to 1.0e-15 instead of 1.0e-5

> in that case (provided the compiler used wasn't Turbo-C, presumably

> because it would define `long double` as 64-bit). This was carried on at

> least until POV-Ray v2.2, but must have been gotten lost between v2.2

> for DOS and v3.6 for Windows. (My guess would be that the Windows

> version never had it.)

>

> (* See here:

> https://groups.google.com/forum/#!topic/comp.sources.amiga/icNQTp_txHE)

>

> (** See here: http://cd.textfiles.com/somuch/smsharew/CPROG/)

>

Thanks. I've never dug as far back. True too I was speculating about how

many might have actually been running early POV-Ray. Me taking the early

as v1.0 "#define DBL double to be a mechanism those without FPUs and

early small bus sizes were running with "define DBL float" instead. Not

that I was running POV-RAY or even aware of POV-Ray that early.

Don't remember exactly, but I didn't have a personal machine with an

actual FPU until the late 90s. First 64 bit machine early 2000s I guess.

My very first personally owned machine was an 8bit z80 based custom

built thing...

Bill P.

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 22 May 2018 07:45:30

Message: <5b0402da$1@news.povray.org>

|

|

|

|  |

|  |

|

|

On 05/21/2018 05:13 PM, clipka wrote:

> Am 21.05.2018 um 22:35 schrieb clipka:

>

>> I think that's a path worthwhile exploring further. My suggestion would

>> be to use a macro - say "PRECISION_FLOAT" - to define a type to be used

>

> Make that `PRECISE_FLOAT`; fits better with a series of similar types

> already in use, `PreciseColourChannel`, `PreciseRGBColour` etc., which

> are used for colour-ish data where `float` is not enough, most notably

> the SSLT code. (Obviously, there the data type of choice is `double`,

> and does suffice.)

>

OK. Will go for PRECISE_FLOAT and plan to define it in

./base/configbase.h along with DBL and SNGL.

Further - for now - I plan to use it only in polysolve() and its

sub-functions with conversions from and to DBL on entry and exit as I

implemented the 128bit polysolve().

Bill P.

Post a reply to this message

|

|

|  |

|  |

|

|

From: William F Pokorny

Subject: Re: Old media blob issue leading to a look at sturm / polysolve.

Date: 27 May 2018 12:19:14

Message: <5b0ada82@news.povray.org>

|

|

|

|  |

|  |

|

|

On 03/30/2018 08:21 AM, William F Pokorny wrote:

>...

Sturm / polysove() investigation. Coefficients wrongly seen as zeroes.

In this chapter we venture up into the Solve_Polynomial() function which

calls polysove(), solve_quadratic(), solve_cubic() and solve_quartic().

Consider this code added back in 1994 and part of POV-Ray v3.0.

/*

* Determine the "real" order of the polynomial, i.e.

* eliminate small leading coefficients.

*/

i = 0;

while ((i < n) && (fabs(c0[i]) < SMALL_ENOUGH))

{

i++;

}

n -= i;

c = &c0[i];

My last commit deletes it! Having so large a SMALL_ENOUGH (1e-10) and

any fixed value given equation order varies as does distance along the

ray where the equations not normalized is a bad idea(1).

Perhaps reason enough that when any even order polynomial with no roots

is reduced to an odd order polynomial it turns into a polynomial with

roots. All odd order polynomials have at least one root.

In the attached image we have two examples - neither using sturm /

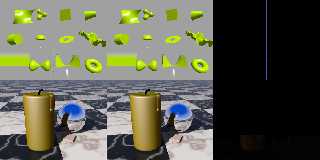

polysolve - where the code above goes bad.

In the top row we've got the shipped primativ.pov scene and specifically

a poly shape which is not explicitly bounded. It creates 4th order

equations so should use solve_quartic(). However the code up top

sometimes strips the leading coefficient and in this case we go from a

quartic which has no roots to an odd third order cubic which has one.

This is the vertical bar artifact in the upper left.

In the bottom row we have the shipped subsurface.pov scene. The

differences on the bottom right (at 4x actual difference values) happen

again where the code at top drops a single leading coefficient. In this

case for pixels and rays where I've examine actual roots, subsurface

related rays go from 4th order equations with >=2 roots with one of

those roots inside the blob interval being kept, to a 3rd order equation

with a single root outside the active blob interval. In other words we

are missing subsurface roots/intersections where we see differences.

Notes:

- The subsurface effect here is visible with no difference multiplier

and many times larger than the returned root threshold differences

recently discussed.

- Because the ray-surface equations are based upon scene geometry the

'zeroes-not-zeroes-dropped' issue here is often scene-object or axis

aligned in some fashion. I'm reminded of a rounded box canyon subsurface

scene Robert McGregor posted where I noticed one box face having a

strange look. Someone joked it was perhaps a door to Narnia or similar

after nobody had an idea what caused the effect. I'm thinking perhaps

the code above - or the still present order reduction by ratio code -

played a part...

NOTE! This update corrupts some previous scenes while fixing some

previous bad ones. Some planned but not yet defined or implemented

equation tuning on ray DX,DY,DZ values is to come.

Updates at:

https://github.com/wfpokorny/povray/tree/fix/polynomialsolverAccuracy

Performance and specific commit details below.

Bill P.

(1) - Yes, this strongly suggests order reduction based upon the largest

two coefficients is also a bad idea. However leaving that feature in

place for now as some primitives require this type of reduction to

function at all. Perhaps in these cases push the reductions out of the

solvers and into the shape code.

Performance info:

------------ /usr/bin/time povray -j -wt1 -fn -p -d -c lemonSturm.pov

0) master 30.22user 0.04system 0:30.89elapsed

16) 21387e2 14.88user 0.02system 0:15.51elapsed -50.76%

17) 80860cd --- NA --- (1e-15 constant calc fix)

(PRECISE_FLOAT)

18) aa6a0a6 --- Bad Result(a) --- (float)

14.95user 0.02system 0:15.56elapsed +0.47% (double)

25.12user 0.02system 0:25.73elapsed +68.82% (long double)

282.97user 0.13system 4:44.13elapsed +1801.68% (__float128)

19) 4e16623 14.94user 0.01system 0:15.56elapsed ---- -50.56%

(a) - Not exhaustively evaluated, but some shapes render OK at 'float'

though those which did were slower (+150% ballpark). Unsure why so slow,

but don't plan to dig further. Sturm / polysolve is for those looking to

be more accurate, not less.

17) Correcting two double 1e-15 constant calculations.

In recent commits started setting two 1e-15 values with the calculation:

= 1/std::numeric_limits<DBL>::digits10;

when I meant to code:

= 1/pow(10,std::numeric_limits<DBL>::digits10);

Both forms bad practice in not being specific as to the types for the

explicit values. The first returns integer 0, later cast to double and I

got away with it in all but my full set of test cases. Corrected:

= (DBL)1.0/pow((DBL)10.0,std::numeric_limits<DBL>::digits10);

18) Implementing suggested PRECISE_FLOAT macro mechanism for polysolve().

Primarily enabling 'long double' support for the polysolve() / 'sturm'

solver given 'long double' is part of the C+11 standard and gets most

users 80 bits or more as opposed to 64. Further, the additional bits are

almost always hardware backed making for reasonable performance.

With GNU g++ 64 bit environments '__float128' is a valid setting with no

additional library required - though it's very slow. Other extended

accuracy floats such as 'double double' enabled, via the PRECISE_

mechanisms, but they involve additional libraries and settings. See code.

Note! With this update the polysolve sturm chain is no longer pruned

with a default 1e-10 value, but rather a value appropriate for the

floating point type. Means better accuracy for some previously bad root

results and less accuracy for others. Coming updates will better tune

the chain equation pruning.

19) Removing leading, near zero coefficient reduction in Solve_Polynomial().

Leading coefficients of < SMALL_ENOUGH (1e-10) were being dropped

reducing the order of the incoming polynomial when the near zero values

had meaning with respect to roots. Led to both false roots and missed roots.

Post a reply to this message

Attachments:

Download 'notzeroesdropped.png' (326 KB)

Preview of image 'notzeroesdropped.png'

|

|

|  |

|  |

|

|

|

|

|  |

|

|

![]()