|

|

|

|

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

No, I'm not modeling "cross reed" glasses: see post by the same subject

on p.newusers ...

--

Jaime

Post a reply to this message

Attachments:

Download 'plenoptic-camera.jpg' (164 KB)

Preview of image 'plenoptic-camera.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Jaime Vives Piqueres <jai### [at] ignorancia org> wrote:

> No, I'm not modeling "cross reed" glasses: see post by the same subject

> on p.newusers ...

>

> --

> Jaime

Hmm, not seeing the post. Is this for use with integral imaging?

http://en.wikipedia.org/wiki/Integral_imaging

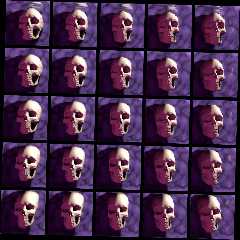

I was playing with integral imaging in POV just last year. It's a cool

technology, better than regular lenticular arrays or even most holograms, as the

cards can be rotated to any angle while still preserving their 3D appearance.

Rendering the source images in POV-Ray is super easy, although the resolution

must be very high.

Attached is one of my tests. You can cross your eyes at any two cards to view

the skull in 3D.

Sam org> wrote:

> No, I'm not modeling "cross reed" glasses: see post by the same subject

> on p.newusers ...

>

> --

> Jaime

Hmm, not seeing the post. Is this for use with integral imaging?

http://en.wikipedia.org/wiki/Integral_imaging

I was playing with integral imaging in POV just last year. It's a cool

technology, better than regular lenticular arrays or even most holograms, as the

cards can be rotated to any angle while still preserving their 3D appearance.

Rendering the source images in POV-Ray is super easy, although the resolution

must be very high.

Attached is one of my tests. You can cross your eyes at any two cards to view

the skull in 3D.

Sam

Post a reply to this message

Attachments:

Download 'iiskull3m_07s.jpg' (198 KB)

Preview of image 'iiskull3m_07s.jpg'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

> Jaime Vives Piqueres <jai### [at] ignorancia org> wrote:

>> No, I'm not modeling "cross reed" glasses: see post by the same subject

>> on p.newusers ...

>>

>> --

>> Jaime

>

> Hmm, not seeing the post.

Sorry, I changed the subject, but the original is "povray output as a

film/CCD" from 03/07/12 (I forgot not everyone uses a news reader setup

to display post ordered by date... :):

http://news.povray.org/povray.newusers/thread/%3Cweb.50009d33faf6826b9b0928190%40news.povray.org%3E/

> Is this for use with integral imaging?

> http://en.wikipedia.org/wiki/Integral_imaging

No, that's apparently another interesting usage of light-fields. A

plenoptic camera takes images using a microlenses array, with the

purpose of being able to refocus the image after the fact (among other

things).

http://en.wikipedia.org/wiki/Light-field_camera

--

Jaime org> wrote:

>> No, I'm not modeling "cross reed" glasses: see post by the same subject

>> on p.newusers ...

>>

>> --

>> Jaime

>

> Hmm, not seeing the post.

Sorry, I changed the subject, but the original is "povray output as a

film/CCD" from 03/07/12 (I forgot not everyone uses a news reader setup

to display post ordered by date... :):

http://news.povray.org/povray.newusers/thread/%3Cweb.50009d33faf6826b9b0928190%40news.povray.org%3E/

> Is this for use with integral imaging?

> http://en.wikipedia.org/wiki/Integral_imaging

No, that's apparently another interesting usage of light-fields. A

plenoptic camera takes images using a microlenses array, with the

purpose of being able to refocus the image after the fact (among other

things).

http://en.wikipedia.org/wiki/Light-field_camera

--

Jaime

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Jaime Vives Piqueres <jai### [at] ignorancia org> wrote:

> No, I'm not modeling "cross reed" glasses: see post by the same subject

> on p.newusers ...

>

> --

> Jaime

Interesting and fine image. I wonder if really a mesh cam is needed here, but

have no time for tests myself. Should a usual cam within your mesh (may be

extruded into the -z direction for some amount, flattend and then properly

positioned) with a proper ior to the mesh not yield a similiar picture?

Best regards,

Michael org> wrote:

> No, I'm not modeling "cross reed" glasses: see post by the same subject

> on p.newusers ...

>

> --

> Jaime

Interesting and fine image. I wonder if really a mesh cam is needed here, but

have no time for tests myself. Should a usual cam within your mesh (may be

extruded into the -z direction for some amount, flattend and then properly

positioned) with a proper ior to the mesh not yield a similiar picture?

Best regards,

Michael

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

> Jaime Vives Piqueres <jai### [at] ignorancia org> wrote:

>> No, I'm not modeling "cross reed" glasses: see post by the same subject

>> on p.newusers ...

>>

>> --

>> Jaime

>

> Interesting and fine image. I wonder if really a mesh cam is needed here, but

> have no time for tests myself. Should a usual cam within your mesh (may be

> extruded into the -z direction for some amount, flattend and then properly

> positioned) with a proper ior to the mesh not yield a similiar picture?

>

Yes, but the mesh_camera is much faster, and the result is much

smoother and resolution-independent. The only pain is that with

distribution #3 you cannot use more than one mesh (to stack some lenses).

--

Jaime org> wrote:

>> No, I'm not modeling "cross reed" glasses: see post by the same subject

>> on p.newusers ...

>>

>> --

>> Jaime

>

> Interesting and fine image. I wonder if really a mesh cam is needed here, but

> have no time for tests myself. Should a usual cam within your mesh (may be

> extruded into the -z direction for some amount, flattend and then properly

> positioned) with a proper ior to the mesh not yield a similiar picture?

>

Yes, but the mesh_camera is much faster, and the result is much

smoother and resolution-independent. The only pain is that with

distribution #3 you cannot use more than one mesh (to stack some lenses).

--

Jaime

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

Jaime Vives Piqueres <jai### [at] ignorancia org> wrote:

> Yes, but the mesh_camera is much faster, and the result is much

> smoother and resolution-independent. The only pain is that with

> distribution #3 you cannot use more than one mesh (to stack some lenses).

>

>

> --

> Jaime

This was the reason to propose an other approach. I couldn't resist to test my

idea, but yielded only poor efforts (as you can see). But why not put the model

of a lense in front of the mesh cam?

Best regards,

Michael org> wrote:

> Yes, but the mesh_camera is much faster, and the result is much

> smoother and resolution-independent. The only pain is that with

> distribution #3 you cannot use more than one mesh (to stack some lenses).

>

>

> --

> Jaime

This was the reason to propose an other approach. I couldn't resist to test my

idea, but yielded only poor efforts (as you can see). But why not put the model

of a lense in front of the mesh cam?

Best regards,

Michael

Post a reply to this message

Attachments:

Download 'iortest.png' (189 KB)

Preview of image 'iortest.png'

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"MichaelJF" <mi-### [at] t-online de> wrote:

> Jaime Vives Piqueres <jai### [at] ignorancia de> wrote:

> Jaime Vives Piqueres <jai### [at] ignorancia org> wrote:

> > Yes, but the mesh_camera is much faster, and the result is much

> > smoother and resolution-independent. The only pain is that with

> > distribution #3 you cannot use more than one mesh (to stack some lenses).

> > --

> > Jaime

>

> This was the reason to propose an other approach. I couldn't resist to test my

> idea, but yielded only poor efforts (as you can see). But why not put the model

> of a lense in front of the mesh cam?

>

> Best regards,

> Michael

I might be missing something fundamental to the topic at hand (wouldn't be the

first time ;) ), but why not just place the following in front of the camera?

#declare ArrayRes = 96;

box{

<-0.5, -0.5, 0>, <0.5, 0.5, 0>

pigment{rgb 0 transmit 1}

normal{

function{

(1-(x*x+y*y)/2)

} -4*2

translate x+y

scale 1/2

warp{repeat x}

warp{repeat y}

scale 1/ArrayRes

}

finish{diffuse 0}

interior{ior 1.5}

no_shadow no_reflection

}

That's what I used when rendering the light field for my integral imaging sim.

My first attempt for this step used actual geometry, but too many artifacts were

produced, so I settled on this quick-to-parse, fast-to-render solution.

Sam org> wrote:

> > Yes, but the mesh_camera is much faster, and the result is much

> > smoother and resolution-independent. The only pain is that with

> > distribution #3 you cannot use more than one mesh (to stack some lenses).

> > --

> > Jaime

>

> This was the reason to propose an other approach. I couldn't resist to test my

> idea, but yielded only poor efforts (as you can see). But why not put the model

> of a lense in front of the mesh cam?

>

> Best regards,

> Michael

I might be missing something fundamental to the topic at hand (wouldn't be the

first time ;) ), but why not just place the following in front of the camera?

#declare ArrayRes = 96;

box{

<-0.5, -0.5, 0>, <0.5, 0.5, 0>

pigment{rgb 0 transmit 1}

normal{

function{

(1-(x*x+y*y)/2)

} -4*2

translate x+y

scale 1/2

warp{repeat x}

warp{repeat y}

scale 1/ArrayRes

}

finish{diffuse 0}

interior{ior 1.5}

no_shadow no_reflection

}

That's what I used when rendering the light field for my integral imaging sim.

My first attempt for this step used actual geometry, but too many artifacts were

produced, so I settled on this quick-to-parse, fast-to-render solution.

Sam

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

On 30/12/2012 7:35 PM, Samuel Benge wrote:

> I might be missing something fundamental to the topic at hand (wouldn't be the

> first time;) ), but why not just place the following in front of the camera?

I must be missing it too. My thought was to use a camera Ray

Perturbation using a Leopard Normal. I am running an animation ATM

varying the scale.

--

Regards

Stephen

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

"Samuel Benge" <stb### [at] hotmail com> wrote:

>

> I might be missing something fundamental to the topic at hand (wouldn't be the

> first time ;) ), but why not just place the following in front of the camera?

>

> #declare ArrayRes = 96;

> box{

> <-0.5, -0.5, 0>, <0.5, 0.5, 0>

> pigment{rgb 0 transmit 1}

> normal{

> function{

> (1-(x*x+y*y)/2)

> } -4*2

> translate x+y

> scale 1/2

> warp{repeat x}

> warp{repeat y}

> scale 1/ArrayRes

> }

> finish{diffuse 0}

> interior{ior 1.5}

> no_shadow no_reflection

> }

>

> That's what I used when rendering the light field for my integral imaging sim.

> My first attempt for this step used actual geometry, but too many artifacts were

> produced, so I settled on this quick-to-parse, fast-to-render solution.

>

> Sam

I dont think that you are missing something here. My idea was the first I had in

my mind as I saw Jaimes picture. So I gave it a fast test. I think your idea and

Stephens may work better, but I came not up with this.

For me it was just a short and welcome distraction from more urgent RL issues.

Meanwhile I wonder why we simulate this camera at all. I learned about the

existence of plenoptic cameras by Jaimes posting first and found soon an article

from the Stanford group. As I understand it, their mean goal is to get more

depth of field with this camera as with an usual one. Didn't we have the problem

to get less DOF using focal blur? If one is intended in photorealistic rendering

of the results of an plenoptic camera, then this pictures must be heavily

postprocessed by analysing every field and composing an image from them. This

maths seems to be not trivial.

Best regards,

Michael com> wrote:

>

> I might be missing something fundamental to the topic at hand (wouldn't be the

> first time ;) ), but why not just place the following in front of the camera?

>

> #declare ArrayRes = 96;

> box{

> <-0.5, -0.5, 0>, <0.5, 0.5, 0>

> pigment{rgb 0 transmit 1}

> normal{

> function{

> (1-(x*x+y*y)/2)

> } -4*2

> translate x+y

> scale 1/2

> warp{repeat x}

> warp{repeat y}

> scale 1/ArrayRes

> }

> finish{diffuse 0}

> interior{ior 1.5}

> no_shadow no_reflection

> }

>

> That's what I used when rendering the light field for my integral imaging sim.

> My first attempt for this step used actual geometry, but too many artifacts were

> produced, so I settled on this quick-to-parse, fast-to-render solution.

>

> Sam

I dont think that you are missing something here. My idea was the first I had in

my mind as I saw Jaimes picture. So I gave it a fast test. I think your idea and

Stephens may work better, but I came not up with this.

For me it was just a short and welcome distraction from more urgent RL issues.

Meanwhile I wonder why we simulate this camera at all. I learned about the

existence of plenoptic cameras by Jaimes posting first and found soon an article

from the Stanford group. As I understand it, their mean goal is to get more

depth of field with this camera as with an usual one. Didn't we have the problem

to get less DOF using focal blur? If one is intended in photorealistic rendering

of the results of an plenoptic camera, then this pictures must be heavily

postprocessed by analysing every field and composing an image from them. This

maths seems to be not trivial.

Best regards,

Michael

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

|  |

|

|

> For me it was just a short and welcome distraction from more urgent

> RL issues. Meanwhile I wonder why we simulate this camera at all. I

> learned about the existence of plenoptic cameras by Jaimes posting

> first and found soon an article from the Stanford group. As I

> understand it, their mean goal is to get more depth of field with

> this camera as with an usual one. Didn't we have the problem to get

> less DOF using focal blur? If one is intended in photorealistic

> rendering of the results of an plenoptic camera, then this pictures

> must be heavily postprocessed by analysing every field and composing

> an image from them. This maths seems to be not trivial.

I explored this just because a guy asked for help simulating the

output of a plenoptic camera. I didn't get much information, but I guess

he is a researcher trying to validate his reconstruction or refocusing

algorithms in a cheap way, without having a real plenoptic camera at hand.

And of course no, this isn't of much use for us, regular POVers...

--

Jaime

Post a reply to this message

|

|

|  |

|  |

|

|

|

|

|  |

![]()